Archive for the ‘T-SQL’ Category

SQL Server XQuery

I promised my students an example of writing xquery statements in Microsoft SQL Server. This post builds on two earlier posts. The first qualifies how to build a marvel table with source data, and the second qualifies how you can create an XML Schema Collection and insert relational data into an XML structure.

You can query a sequence with xquery as follows:

DECLARE @x xml; SET @x = N''; SELECT @x.query('(1,2,(10,11,12,13,14,15)),-6'); |

It returns:

1 2 10 11 12 13 14 15 -6 |

You can query a sequence with an xquery FLOWR statement. FLOWR stands for: FOR, LET, ORDER BY, WHERE, and RETURN. A sample xquery with a FLOWER statement is:

DECLARE @x xml; SET @x = N''; SELECT @x.query('for $i in ((1,2,(10,11,12,13,14,15)),-6) order by $i return $i'); |

It returns:

-6 1 2 10 11 12 13 14 15 |

You can query the entire node tree with the following xquery statement because it looks for the occurrence of any node with the /* search string:

DECLARE @x xml; SET @x = N'<marvel> <avenger_name>Captain America</avenger_name> </marvel>'; SELECT @x.query('/*'); |

You can query the avenger_name elements from the marvel_xml table with the following syntax:

SELECT xml_table.query('/marvel/avenger_name') FROM marvel_xml; |

It returns the following set of avenger_name elements:

<avenger_name>Hulk</avenger_name> <avenger_name>Iron Man</avenger_name> <avenger_name>Black Widow</avenger_name> <avenger_name>Thor</avenger_name> <avenger_name>Captain America</avenger_name> <avenger_name>Hawkeye</avenger_name> <avenger_name>Winter Soldier</avenger_name> <avenger_name>Iron Patriot</avenger_name> |

You can query the fourth avenger_name element from the marvel_xml table with the following xquery statement:

SELECT xml_table.query('/marvel[4]/avenger_name') FROM marvel_xml; |

It returns the following avenger_name element:

<avenger_name>Thor</avenger_name> |

You can use the value() function to verify an avenger_name element exists:

SELECT CASE WHEN xml_table.value('(/marvel[4]/avenger_name)','nvarchar') = 'T' THEN 'Exists' END AS "Verified" FROM marvel_xml WHERE id = 3; |

The query returns the Exists string when it finds a valid avenger_name element. You have a number of other tools to query results sets from the XML node tree.

I hope this helps my students and anybody interested in writing xquery-enable queries.

Functions disallow NDS

My students asked if you could embed an OFFSET x ROWS FETCH NEXT y ROWS ONLY clause in a SQL Server T-SQL user-defined function. The answer is no, it isn’t Oracle (yes, you can do that in Oracle Database 12c with an NDS statement). There’s an example in Chapter 2 of my Oracle Database 12c PL/SQL Programming book if you’re interested. I also demonstrate a different approach to SQL Server T-SQL table functions in this older post. However, an attempt to add the clause to a SQL Server T-SQL function, like this:

CREATE FUNCTION studentdb.getBatch (@rows AS INT ,@offset AS INT) RETURNS @output TABLE ( marvel_id INT , avenger_name VARCHAR(30) , first_name VARCHAR(20) , last_name VARCHAR(20)) AS BEGIN /* Insert the results into the table variable. */ INSERT @output SELECT marvel_id , avenger_name , first_name , last_name FROM studentdb.marvel OFFSET (@offset - 1) ROWS FETCH NEXT @rows ROWS ONLY; /* Return the table variable from the function. */ RETURN; END; |

Throws the following errors trying to compile the function:

Msg 102, Level 15, State 1, Procedure getBatch, Line 16 Incorrect syntax near '@offset'. Msg 153, Level 15, State 2, Procedure getBatch, Line 16 Invalid usage of the option NEXT in the FETCH statement. |

If you have a strong background in Oracle and can sort through the dynamic SQL syntax for T-SQL, you might try re-writing the function to use the EXEC SP_EXECUTESQL @var; command. That rewrite that attempts to use NDS (Native Dynamic SQL) would look like this:

CREATE FUNCTION studentdb.getBatch (@rows AS INT ,@offset AS INT) RETURNS @output TABLE ( marvel_id INT , avenger_name VARCHAR(30) , first_name VARCHAR(20) , last_name VARCHAR(20)) AS BEGIN DECLARE /* Declare a variable for a dynamic SQL statement. */ @stmt VARCHAR(400); /* Assign the SQL statement to a variable. */ SET @stmt = N'SELECT marvel_id ' + N', avenger_name ' + N', first_name ' + N', last_name ' + N'FROM studentdb.marvel ' + N'OFFSET ' + (@offset - 1) + N' ' + N'ROWS FETCH NEXT ' + @rows + N' ROWS ONLY;'; BEGIN /* Insert the results into the table variable. */ INSERT @output EXEC sp_executesql @stmt; END; /* Return the table variable from the function. */ RETURN; END; |

Throws the following exception because you can’t use dynamic dispatch inside a T-SQL function:

Msg 443, Level 16, State 14, Procedure getBatch, Line 23 Invalid use of a side-effecting operator 'INSERT EXEC' within a function. |

On the other hand you can rewrite the statement with a BETWEEN operator and it works somewhat like an OFFSET and FETCH operation. That refactored function would be written as follows:

CREATE FUNCTION studentdb.getBatch (@rowa AS INT ,@rowb AS INT) RETURNS @output TABLE ( marvel_id INT , avenger_name VARCHAR(30) , first_name VARCHAR(20) , last_name VARCHAR(20)) AS BEGIN /* Insert the results into the table variable. */ INSERT @output SELECT marvel_id , avenger_name , first_name , last_name FROM studentdb.marvel WHERE marvel_id BETWEEN @rowa AND @rowb; /* Return the table variable from the function. */ RETURN; END; |

It doesn’t raise an exception. You can call the table function like this:

SELECT * FROM getBatch(2,3); |

It returns the two rows for Iron Man and Black Widow. As always, I hope this helps.

If you want to create the test case, here’s the script you need:

SELECT 'Conditionally drop studentdb.marvel table.' AS "Statement"; IF OBJECT_ID('studentdb.marvel','U') IS NOT NULL DROP TABLE studentdb.marvel; SELECT 'Create studentdb.marvel table.' AS "Statement"; CREATE TABLE studentdb.marvel ( marvel_id INT NOT NULL IDENTITY(1,1) CONSTRAINT marvel_pk PRIMARY KEY , avenger_name VARCHAR(30) NOT NULL , first_name VARCHAR(20) NOT NULL , last_name VARCHAR(20) NOT NULL); /* Insert the rows. */ INSERT INTO studentdb.marvel (avenger_name, first_name, last_name) VALUES ('Hulk','Bruce','Banner'); INSERT INTO studentdb.marvel (avenger_name, first_name, last_name) VALUES ('Iron Man','Tony','Stark'); INSERT INTO studentdb.marvel (avenger_name, first_name, last_name) VALUES ('Black Widow','Natasha','Romanoff'); INSERT INTO studentdb.marvel (avenger_name, first_name, last_name) VALUES ('Thor','Thor','Odinsson'); INSERT INTO studentdb.marvel (avenger_name, first_name, last_name) VALUES ('Captain America','Steve','Rogers'); INSERT INTO studentdb.marvel (avenger_name, first_name, last_name) VALUES ('Hawkeye','Clint','Barton'); INSERT INTO studentdb.marvel (avenger_name, first_name, last_name) VALUES ('Winter Soldier','Bucky','Barnes'); INSERT INTO studentdb.marvel (avenger_name, first_name, last_name) VALUES ('Iron Patriot','James','Rhodey'); /* Query the contents of the MARVEL table. */ SELECT * FROM studentdb.marvel; |

Convert to SQL Server?

I’m always amazed at the questions that pop up for me. For example, how do you convert an Oracle script that creates my Video Store model to a Microsoft SQL Server script. It’s not very hard but there’s one big caveat, and that’s the fact that system_user is a reserved word. That means you can’t create the Access Control List (ACL) table with a system_user name. The alternative, would be to convert the system_user table name to database_user. That’s what I’ve done in this example.

It’s also important to note that this example uses Microsoft SQL Server’s sqlcmd in batch mode. Naturally, it presumes that you’ve created a student user with a trivial password of student, and a studentdb schema. Also, that you’ve granted privileges so everything works (if you need help on that check my earlier post on how to setup a studentdb schema).

The following is an example of conditionally dropping and then creating a system_user table in an Oracle schema. It uses a CASCADE CONSTRAINTS clause to eliminate dependencies with foreign key values.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | -- Conditionally drop the table and sequence. BEGIN FOR i IN (SELECT NULL FROM user_tables WHERE table_name = 'SYSTEM_USER') LOOP EXECUTE IMMEDIATE 'DROP TABLE system_user CASCADE CONSTRAINTS'; END LOOP; FOR i IN (SELECT NULL FROM user_sequences WHERE sequence_name = 'SYSTEM_USER_S1') LOOP EXECUTE IMMEDIATE 'DROP SEQUENCE system_user_s1'; END LOOP; END; / |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | -- Create SYSTEM_USER table. CREATE TABLE system_user ( system_user_id NUMBER CONSTRAINT system_user_pk PRIMARY KEY , system_user_name VARCHAR2(20) CONSTRAINT system_user_nn1 NOT NULL , system_user_group_id NUMBER CONSTRAINT system_user_nn2 NOT NULL , system_user_type NUMBER CONSTRAINT system_user_nn3 NOT NULL , first_name VARCHAR2(20) , middle_name VARCHAR2(20) , last_name VARCHAR2(20) , created_by NUMBER CONSTRAINT system_user_nn4 NOT NULL , creation_date DATE CONSTRAINT system_user_nn5 NOT NULL , last_updated_by NUMBER CONSTRAINT system_user_nn6 NOT NULL , last_update_date DATE CONSTRAINT system_user_nn7 NOT NULL , CONSTRAINT system_user_fk1 FOREIGN KEY (created_by) REFERENCES system_user (system_user_id) , CONSTRAINT system_user_fk2 FOREIGN KEY (last_updated_by) REFERENCES system_user (system_user_id)); -- Create SYSTEM_USER_S1 sequence with a start value of 1001. CREATE SEQUENCE system_user_s1 START WITH 1001; |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | -- Conditionally drop the table and sequence. BEGIN FOR i IN (SELECT NULL FROM user_tables WHERE table_name = 'COMMON_LOOKUP') LOOP EXECUTE IMMEDIATE 'DROP TABLE common_lookup CASCADE CONSTRAINTS'; END LOOP; FOR i IN (SELECT NULL FROM user_sequences WHERE sequence_name = 'COMMON_LOOKUP_S1') LOOP EXECUTE IMMEDIATE 'DROP SEQUENCE common_lookup_s1'; END LOOP; END; / |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | -- Create COMMON_LOOKUP table. CREATE TABLE common_lookup ( common_lookup_id NUMBER , common_lookup_context VARCHAR2(30) CONSTRAINT nn_clookup_1 NOT NULL , common_lookup_type VARCHAR2(30) CONSTRAINT nn_clookup_2 NOT NULL , common_lookup_meaning VARCHAR2(30) CONSTRAINT nn_clookup_3 NOT NULL , created_by NUMBER CONSTRAINT nn_clookup_4 NOT NULL , creation_date DATE CONSTRAINT nn_clookup_5 NOT NULL , last_updated_by NUMBER CONSTRAINT nn_clookup_6 NOT NULL , last_update_date DATE CONSTRAINT nn_clookup_7 NOT NULL , CONSTRAINT pk_c_lookup_1 PRIMARY KEY(common_lookup_id) , CONSTRAINT fk_c_lookup_1 FOREIGN KEY(created_by) REFERENCES system_user(system_user_id) , CONSTRAINT fk_c_lookup_2 FOREIGN KEY(last_updated_by) REFERENCES system_user(system_user_id)); -- Create a non-unique index on a single column. CREATE INDEX common_lookup_n1 ON common_lookup(common_lookup_context); -- Create a unique index based on two columns. CREATE UNIQUE INDEX common_lookup_u2 ON common_lookup(common_lookup_context,common_lookup_type); -- Create COMMON_LOOKUP_S1 sequence with a start value of 1001. CREATE SEQUENCE common_lookup_s1 START WITH 1001; |

You can do the same thing for a database_user table in Microsoft SQL Server with the following syntax. Unfortunately, there isn’t a CASCADE CONSTRAINTS clause that we can append in Microsoft SQL Server. The script uses a dynamic SQL statement with a Common Table Expression (CTE) to generate a list of ALTER statements that drop foreign key constraints in the schema.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 | /* Drop all foreign keys. */ USE studentdb; /* Create a session variable to hold a command list. */ SELECT 'Create a session variable.' AS "Statement"; DECLARE @sql NVARCHAR(MAX) = N''; /* Generate the command list to drop foreign key constraints. */ SELECT 'Generate dynamic SQL statements.' AS "Statement"; ;WITH x AS (SELECT N'ALTER TABLE ' + OBJECT_SCHEMA_NAME(parent_object_id) + N'.' + OBJECT_NAME(parent_object_id) + N' ' + N'DROP CONSTRAINT ' + name + N';' AS sqlstmt FROM sys.foreign_keys) SELECT @sql += sqlstmt FROM x; /* Call the dynamically generated statements. */ SELECT 'Execute dynamic SQL statements.' AS "Statement"; EXEC sp_executesql @sql; /* Conditionally drop tables. */ SELECT 'Conditionally drop studentdb.common_lookup table.' AS "Statement"; IF OBJECT_ID('studentdb.database_user','U') IS NOT NULL DROP TABLE studentdb.database_user; /* Create a table with self-referencing foreign key constraints. */ SELECT 'Create studentdb.common_lookup table.' AS "Statement"; CREATE TABLE studentdb.database_user ( database_user_id INT NOT NULL IDENTITY(1,1) CONSTRAINT database_user_pk PRIMARY KEY , database_user_name VARCHAR(20) NOT NULL , database_user_group_id INT NOT NULL , database_user_type INT NOT NULL , first_name VARCHAR(20) , middle_name VARCHAR(20) , last_name VARCHAR(20) , created_by INT NOT NULL , creation_date DATE NOT NULL , last_updated_by INT NOT NULL , last_update_date DATE NOT NULL , CONSTRAINT database_user_fk1 FOREIGN KEY (created_by) REFERENCES studentdb.database_user (database_user_id) , CONSTRAINT database_user_fk2 FOREIGN KEY (created_by) REFERENCES studentdb.database_user (database_user_id)); /* Conditionally drop common_lookup table. */ SELECT 'Conditionally drop studentdb.common_lookup table.' AS "Statement"; IF OBJECT_ID('studentdb.common_lookup','U') IS NOT NULL DROP TABLE studentdb.common_lookup; /* Create a table with external referencing foreign key constraints. */ SELECT 'Create studentdb.common_lookup table.' AS "Statement"; CREATE TABLE studentdb.common_lookup ( common_lookup_id INT NOT NULL IDENTITY(1,1) CONSTRAINT common_lookup_pk PRIMARY KEY , common_lookup_context VARCHAR(30) CONSTRAINT nn_clookup_1 NOT NULL , common_lookup_type VARCHAR(30) CONSTRAINT nn_clookup_2 NOT NULL , common_lookup_meaning VARCHAR(30) CONSTRAINT nn_clookup_3 NOT NULL , created_by INT CONSTRAINT nn_clookup_4 NOT NULL , creation_date DATE CONSTRAINT nn_clookup_5 NOT NULL , last_updated_by INT CONSTRAINT nn_clookup_6 NOT NULL , last_update_date DATE CONSTRAINT nn_clookup_7 NOT NULL , CONSTRAINT common_lookup_fk1 FOREIGN KEY(created_by) REFERENCES studentdb.database_user (database_user_id) , CONSTRAINT common_lookup_fk2 FOREIGN KEY(last_updated_by) REFERENCES studentdb.database_user (database_user_id)); |

You can run it from a file by calling the sqlcmd utility. You’ll need to know several things to run it. First, you need to know your database instance. You can capture that from a query against the data dictionary or catalog. Just run the following from inside the Microsoft SQL Server Management Studio (SSMS):

SELECT @@SERVERNAME; |

In my case, it shows the following, which is the machine’s hostname a backslash and SQLEXPRESS:

MCLAUGHLINSQL\SQLEXPRESS |

The script uses sqltest.sql as a file name, and you can call it from the Windows shell environment like this:

sqlcmd -S MCLAUGHLINSQL\SQLEXPRESS -U student -P student -i C:\Data\MicrosoftSQL\sqltest.sql -o C:\Data\Microsoft\sqltest.out |

As always, I hope this helps.

A T-SQL Table Function

I had an interesting conversation about table functions in Oracle’s PL/SQL; and the fact that they’re not available in MySQL. When I explained they’re available in Microsoft T-SQL User-Defined Functions (UDFs), my students wanted a small example. One of them said they’d tried to do it but couldn’t get it to work because they found the Microsoft web pages difficult to read and use. Specifically, they didn’t like the sparseness of this one on how to create a function.

Here’s a quick definition of a UDF table function that runs in the studentdb schema (created in this post for migrating SQL Server into a MySQL database). The following getConquistador function takes a single string, which acts to filter the result set from a query positioned as the return value of the function. You should note that this is an implementation of Microsoft’s Common Language Infrastructure (CLI).

CREATE FUNCTION studentdb.getConquistador (@nationality AS VARCHAR(30)) RETURNS TABLE RETURN SELECT * FROM studentdb.conquistador WHERE nationality = @nationality; |

Unlike Oracle SQL, where you need to use the TABLE function to read the content of a table result from a function, you don’t need anything other than the function call in the FROM clause of a T-SQL query. Here’s an example of calling the table function:

SELECT * FROM studentdb.getConquistador('German'); |

The complete result from the query would produce these results when run from the sqlcmd command-line interface:

conquistador_id conquistador actual_name nationality

--------------- --------------------- -------------------- ------------

11 Nicolas de Federman Nikolaus Federmann German

13 Jorge de la Espira George von Speyer German

(2 rows affected) |

However, you also have the ability to query only rows of interest without any specialized syntax, like this:

1> USE studentdb; 2> SELECT conquistador AS "Conquistador" 3> , actual_name AS "Name" 4> FROM studentdb.getConquistador('German'); 5> GO |

This produces the following two-column result set:

Conquistador Name --------------------- -------------------- Nicolas de Federman Nikolaus Federmann Jorge de la Espira George von Speyer (2 rows affected) |

Hope this helps those interested in T-SQL UDFs.

Why Stored Programs?

Why should you use stored programs? Great question, here’s my little insight into a situation that I heard about in a large organization.

A very large organization is having a technology argument. In someway, like politics, half-truth drives this type of discussion. This company has hundreds of databases and they’re about half SQL Server and Oracle. The argument (half-truth) states that using T-SQL or PL/SQL yields “spaghetti” code!

It seems like an old argument from my perspective. After all, I’ve been working with T-SQL and PL/SQL for a long time. Spaghetti code exists in every language when unskilled programmers solve problems but the point here is one of software architecture, and an attempt to malign stored programming in general. Let’s examine the merit of the argument against stored programs.

First of all, the argument against stored programs is simply not true. SQL DML statements, like the INSERT, UPDATE, and DELETE statements should maintain ACID compliant interactions with a single table in a database. Unfortunately, the same statements create anomalies (errors) in a poorly designed database.

Stored programs provide the ability to perform ACID compliant interactions across a series of tables in a database. They may also hide database design errors and protect the data from corruption. The same can’t be said for Java or C# developers. Java and C# developers frequently fail to see database design errors or they overlook them as inconsequential. This type of behavior results in corrupt data.

It typically raises cost, errors, and overall application complexity when key logic migrates outside the database. If you’re asking why, that’s great. Here are my thoughts on why:

- Making a Java or C# programmer responsible for managing the transaction scope across multiple tables in a database is not trivial. It requires a Java programmer that truly has mastered SQL. As a rule, it means a programmer writes many more lines of logic in their code because they don’t understand how to use SQL. It often eliminates joins from being performed in the database where they would considerably outperform external language operations.

- Identifying bottlenecks and poor usage of data becomes much more complex for DBAs because small queries that avoid joins don’t appear problematic inside the database. DBAs don’t look at the execution or scope of transactions running outside of the database and you generally are left with anecdotal customer complaints about the inefficiency of the application. Therefore, you have diminished accountability.

- Developing a library of stored procedures (and functions) ensures the integrity of transaction management. It also provides a series of published interfaces to developers writing the application logic. The published interface provides a modular interface, and lets developers focus on delivering quality applications without worrying about the database design. It lowers costs and increases quality by focusing developers on their strengths rather than trying to make them generalists. That having been said, it should never mask a poorly designed database!

- Service level agreements are critical metrics in any organization because they compel efficiency. If you mix the logic of the database and the application layer together, you can’t hold the development team responsible for the interface or batch processing metrics because they’ll always “blame” the database. Likewise, you can’t hold the database team responsible for performance when their metrics will only show trivial DML statement processing. Moreover, the DBA team will always show you that it’s not their fault because they’ve got metrics!

- Removing transaction controls from the database server generally means you increase the analysis and design costs. That’s because few developers have deep understanding of a non-database programming language and the database. Likewise, input from DBAs is marginalized because the solution that makes sense is disallowed by design fiat. Systems designed in this type of disparate way often evolve into extremely awkward application models.

Interestingly, the effective use of T-SQL or PL/SQL often identifies, isolates, and manages issues in poorly designed database models. That’s because they focus on the integrity of transactions across tables and leverage native database features. They also act like CSS files, effectively avoiding the use of inline style or embedded SQL and transaction control statements.

Let’s face this fact; any person who writes something like “spaghetti” code in the original context is poorly informed. They’re typically trying to sidestep blame for an existing bad application design or drive a change of platform without cost justification.

My take on this argument is two fold. Technologists in the organization may want to dump what they have and play with something else; or business and IT management may want to sidestep the wrath of angry users by blaming their failure on technology instead of how they didn’t design, manage, or deliver it.

Oh, wait … isn’t that last paragraph the reason for the existence of pre-package software? 😉 Don’t hesitate to chime in, after all it’s just my off-the-cuff opinion.

SQL Automated Numbers

I’ve begun putting together an online database tutorial and expanded this entry and added horizontal scrolling to it. You can find the improved version of the blog post as blog page here.

Surrogate keys are interesting structures in databases. They’re essential if you want to make sure you optimize your design. They’re also very useful when you want to capture the automatic numbering value for a prior INSERT statement and reuse the automatic numbering value as the foreign key value in a subsequent statement. It was interesting to see how they’re implemented differently across Oracle, MySQL, and SQL Server while providing the same utility.

Below is a synopsis of how you implement these in Oracle, MySQL, and SQL Server.

Oracle

The first thing to qualify is that Oracle is generally always in a transactional mode. That means you don’t need to do anything special to set this example up.

Oracle doesn’t support automated numbering in tables prior to Oracle 12c. Oracle 12c introduces identity columns, and the mechanics change. However, you can use sequences to mimic automated numbering prior to Oracle 12c and without identity columns in Oracle 12c. A sequence is a structure in the database that holds a current value, increments by a fixed value – typically 1. Sequences are available in SQL and PL/SQL scopes through two pseudo columns. The pseudo columns are .nextval and .currval (note the two r’s because it’s not a stray dog).

The sequence_name.nextval call in any session places the next number from the sequence into your Personal Global Area (PGA), which is a memory context. After you’ve called the sequence into memory, you can access it again by using sequence_name.currval. The sequence only changes when you call it again with the .nextval pseudo column.

-- Conditionally drop data sturctures - tables and sequences. BEGIN FOR i IN (SELECT TABLE_NAME FROM user_tables WHERE TABLE_NAME IN ('ONE','TWO')) LOOP EXECUTE IMMEDIATE 'DROP TABLE '||i.table_name||' CASCADE CONSTRAINT'; END LOOP; FOR i IN (SELECT sequence_name FROM user_sequences WHERE sequence_name IN ('ONE_S1','TWO_S1')) LOOP EXECUTE IMMEDIATE 'DROP SEQUENCE '||i.sequence_name; END LOOP; END; / -- Create base table and sequence. CREATE TABLE one ( one_id INT NOT NULL CONSTRAINT pk_one PRIMARY KEY , one_text VARCHAR(10) NOT NULL ); CREATE SEQUENCE one_s1; -- Create dependent table and sequence. CREATE TABLE two ( two_id INT NOT NULL CONSTRAINT pk_two PRIMARY KEY , one_id INT NOT NULL , two_text VARCHAR(10) NOT NULL ); CREATE SEQUENCE two_s1; -- Insert rows into the tables with sequence values. INSERT INTO one VALUES (one_s1.nextval,'One!'); INSERT INTO one VALUES (one_s1.nextval,'Two!'); INSERT INTO two VALUES (two_s1.nextval, one_s1.currval,'Other Two!'); -- Display the values inserted with sequences. SELECT o.one_id , o.one_text , t.two_id , t.two_text FROM one o JOIN two t ON o.one_id = t.one_id; |

If you mimic automatic numbering with database triggers, you may not have access to the .currval value for the second INSERT statement. This occurs when you provide a NULL value expecting the trigger to manage .NEXTVAL call for you.

Transactions require that you keep the primary key value for the first table in a locally scoped variable for reuse. Then, you can pass it to the next INSERT statement. You do that with the .CURRVAL value.

You can make a potentially erroneous assumption that you’re the only user updating the table. Operating under that assumption, you can query the highest sequence number from the table before an insert, add one to it, and then attempt the INSERT statement. In a multi-user system, it’s possible that somebody beats you to the finish line with their INSERT statement. Your insert would then have a duplicate surrogate key value for the one_id column, and fail on an ORA-00001 error for a uniqueness violation on a primary key column.

A database trigger can help you avoid a race condition. The trigger would ensure sequence values are unique but it may also introduce problems. A common Oracle trigger with a pseudo automatic numbering paradigm is represented by the following trigger (found in APEX generated code).

CREATE OR REPLACE TRIGGER one_t1 BEFORE INSERT ON one FOR EACH ROW BEGIN :NEW.one_id := one_s1.nextval; END; / |

Caution is required on this type of automated sequence trigger. There are two problems with this type of trigger.

One scenario is where you include a call to sequence_name.NEXTVAL in your INSERT statement. It then increments the sequence, and attempts to insert the value whereupon the trigger fires and repeats the behavior. Effectively, this type of logic creates a sequence that increments by one when you submit a null value in the values clause and by two when you submit a sequence_name.NEXTVAL value.

Another scenario occurs when you attempt a bulk INSERT operation on the table. The sequence call and substitution occurs on each row of the sequence.

You face another problem when you rewrite the trigger to only fire when a surrogate primary key isn’t provided, like this:

CREATE OR REPLACE TRIGGER one_t1 BEFORE INSERT ON one FOR EACH ROW WHEN (NEW.one_id IS NULL) -- Asynchronous with bulk insert operations when a value is provided by the bulk operation to the surrogate key column. BEGIN :NEW.one_id := one_s1.nextval; END; / |

This trigger design causes a problem only with bulk INSERT statements. Effectively, the sequence remains unaltered when you provide surrogate key values as part of inserting an array of values. The next non-bulk INSERT statement would then grab the .NEXTVAL value, attempt to use it, and raise a unique constraint violation because the bulk operation probably already used the value from the sequence.

The fix to bulk operations requires that you lock the table, disable a trigger like this, and get the .NEXTVAL value. Then, you assign the .NEXTVAL value to two local variables. One of these remains unchanged while the other increments as you populate the array for the bulk insert operation. After assigning the result from the .NEXTVAL, you drop the sequence and find the highest key value for the bulk insertion operation, add one to the highest key value, and store it in another locally stored variable. You perform the bulk insert operation and then recreate the sequence with a value one greater than the highest value in the table, which should already be in a locally scored variable. Don’t forget that you’d locked the table, so unlock it now.

You should note that database triggers run in a subshell with access only to the immediate shell that fired them. Therefore, you can’t set a bind variable in a SQL*Plus session and subsequently reference it inside the trigger body because it doesn’t have access to the variable.

MySQL

MySQL supports automatic numbering but not a default transactional mode like Oracle. You need to disable auto commit and start a transaction. You also need to assign the last automatic numbering value to a variable before using it in a subsequent INSERT statement. You must also provide an overriding list of mandatory columns when you opt to exclude the automated numbering column value. The one thing that we should all appreciate about MySQL is their desire to stay close to and comply with ANSI standards.

-- Conditionally drop the tables. DROP TABLE IF EXISTS one; DROP TABLE IF EXISTS two; -- Create the tables with a surrogate key that automatically increments. CREATE TABLE one ( one_id INT PRIMARY KEY AUTO_INCREMENT , one_text VARCHAR(20)); CREATE TABLE two ( two_id INT PRIMARY KEY AUTO_INCREMENT , one_id INT , two_text VARCHAR(20)); -- Start transaction cycle. START TRANSACTION; -- Insert first row, transfer auto increment to memory. INSERT INTO one (one_text) VALUES ('One'); -- Assign last auto increment to local scope variable, the = works too. SET @one_fk := last_insert_id(); -- Insert second row with auto increment and local scope variable. INSERT INTO b (one_id, two_text) VALUES (@one_fk,'Two'); COMMIT; -- Display the values inserted with auto incremented values. SELECT o.one_id , o.one_text , t.two_id , t.two_text FROM one o JOIN two t ON o.one_id = t.one_id; |

SQL Server

SQL Server supports automatic numbering but they call it the identity value. There are two ways to use it but the one I’m showing is for SQL Server 2005 or newer. You can replace the older @@identity for the SCOPE_IDENTITY() function call but Microsoft has already removed first level support from SQL Server 2000. While they’ve not said @@identity is deprecated, it sure appears that’s possible in a future release.

USE student; BEGIN TRAN; -- Conditionally drop tables when they exist. IF OBJECT_ID('dbo.one','U') IS NOT NULL DROP TABLE dbo.one; IF OBJECT_ID('dbo.two','U') IS NOT NULL DROP TABLE dbo.two; -- Create auto incrementing tables. CREATE TABLE one ( one_id INT NOT NULL IDENTITY(1,1) CONSTRAINT pk_one PRIMARY KEY , one_text VARCHAR(10) NOT NULL ); CREATE TABLE two ( two_id INT NOT NULL IDENTITY(1,1) CONSTRAINT pk_two PRIMARY KEY , one_id INT NOT NULL , two_text VARCHAR(10) NOT NULL ); -- Insert the values, and magically no override signature required. INSERT INTO one VALUES ('One!'); INSERT INTO one VALUES ('Two!'); INSERT INTO two VALUES (SCOPE_IDENTITY(),'Other Two!'); -- Query the results. SELECT o.one_id , o.one_text , t.two_id , t.two_text FROM one o JOIN two t ON o.one_id = t.one_id; COMMIT TRAN; |

You should note that T-SQL doesn’t require an override signature when you use an automatic numbering column. This is different, isn’t it?

While the prior example works with two tables, it doesn’t scale to a series of tables. You should consider the following assignment pattern when you’ll have multiple last identity values in a single transaction scope.

DECLARE @one_pk AS INT; SET @one_pk = SCOPE_IDENTITY(); |

As mentioned, this style is important when you’ve got a series of primary and foreign keys to map in the scope of a single transaction. Also, I’d suggest that you put all the declarations at the beginning of the transaction’s scope.

As always, I hope this helps some folks.

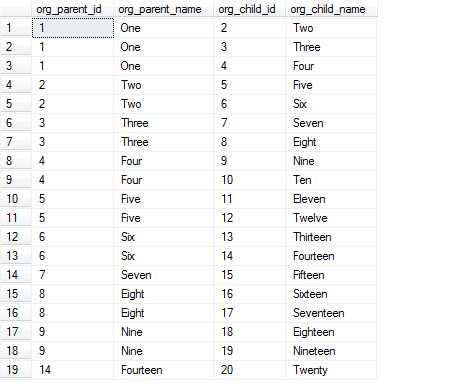

T-SQL Hierarchical Query

Playing around with Microsoft SQL Server 2008 Express edition, I’ve sorted through a bunch of tidbits. One that I thought was interesting, is how to perform a recursive or hierarchical query. This describes how you can perform the magic.

The official name of the WITH clause in Oracle’s lexicon (otherwise known as Oraclese) is a subquery factoring clause. You can find more on that in this earlier blog post. Microsoft has a different name for the WITH clause. They call it a Common Table Expression or CTE.

You perform recursive queries in Microsoft SQL Server 2008 by leveraging CTEs. I’ve modified the setup code from that earlier blog post to run in SQL Server 2008. You’ll find it at the bottom of this blog post.

Unless you want to write your own C# (.NET is the politically correct lingo) equivalent to Oracle’s SQL*Plus, you’ll need to run this script in the SQL Server Management Studio. Actually, you can use Microsoft SQL Server 2008’s command-line utility, which is called sqlcmd.exe but it is much less robust than SQL*Plus. In the Management Studio, you click File, then Open, and File… to load the file for execution, and then click the Execute button. You need to be careful you don’t click the Debug button, which is the green arrow to the right of the Execute button.

![]()

This is the magic query in the illustration. You can also find it in the source code. At the end of the day, I’m hard pressed to understand why they’d use a UNION ALL to support recursion.

The top-most CTE, or subquery factoring clause, simply joins the ORGANIZATION_NAME to the ORG_PARENT_ID and ORG_CHILD_ID columns to provide a single working source. The second CTE performs the recursion. The top-query sets the starting row, and the second query recursively navigates the tree. After all children are found, the first query moves to the next element in the table and recursively searches for its children.

You should note that the CTE self-references itself from inside the second query. Then, the external query (the non-CTE query) returns the results by querying the same CTE.

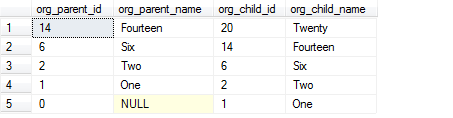

This logic behaves more like a nested loop, and actually fails to move down branches of the tree like a recursive program. Otherwise line 19 would be line 14 in the output. You could write another CTE to fix this shortfall, thereby mirroring a true recursive behavior, or you can write a stored procedure.

The illustrated query outputs the following hierarchical relationship, which navigates down the hierarchical tree:

You can also go up any branch of the tree by changing some of the logic. You’ll find the query to navigate up the tree as the second query in the setup script at the end of the blog. It renders the following output:

The blog will be updated if I discover the equivalent to the LEVEL in Oracle’s self-referencing semantics. If you know it, please share it with everybody.

Setup Script

Microsoft SQL Server 2008 Join Script

USE student; BEGIN TRAN; -- Conditionally drop tables when they exist. IF OBJECT_ID('dbo.ORGANIZATION','U') IS NOT NULL DROP TABLE dbo.ORGANIZATION; IF OBJECT_ID('dbo.ORG_STRUCTURE','U') IS NOT NULL DROP TABLE dbo.ORG_STRUCTURE; -- Create the organization table. CREATE TABLE ORGANIZATION ( organization_id INT , organization_name VARCHAR(10)); -- Seed the organizations. INSERT INTO dbo.ORGANIZATION VALUES (1,'One'), (2,'Two'), (3,'Three'), (4,'Four'), (5,'Five') ,(6,'Six'), (7,'Seven'), (8,'Eight'), (9,'Nine'), (10,'Ten') ,(11,'Eleven'), (12,'Twelve'), (13,'Thirteen'), (14,'Fourteen'), (15,'Fifteen') ,(16,'Sixteen'), (17,'Seventeen'), (18,'Eighteen'), (19,'Nineteen'), (20,'Twenty'); -- Create the organization structure table that holds the recursive key. CREATE TABLE org_structure ( org_structure_id INT , org_parent_id INT , org_child_id INT ); -- Seed the organization structures. INSERT INTO org_structure VALUES ( 1, 0, 1),( 1, 1, 2),( 1, 1, 3),( 1, 1, 4),( 1, 2, 5) ,( 1, 2, 6),( 1, 3, 7),( 1, 3, 8),( 1, 4, 9),( 1, 4,10) ,( 1, 5,11),( 1, 5,12),( 1, 6,13),( 1, 6,14),( 1, 7,15) ,( 1, 8,16),( 1, 8,17),( 1, 9,18),( 1, 9,19),( 1,14,20); COMMIT TRAN; -- Navigating down the tree from the root node. WITH org_name AS (SELECT os.org_parent_id AS org_parent_id , o1.organization_name AS org_parent_name , os.org_child_id AS org_child_id , o2.organization_name AS org_child_name FROM dbo.organization o1 RIGHT JOIN dbo.org_structure os ON o1.organization_id = os.org_parent_id RIGHT JOIN dbo.organization o2 ON o2.organization_id = os.org_child_id) , jn AS (SELECT org_parent_id, org_parent_name , org_child_id, org_child_name FROM org_name WHERE org_parent_id = 1 UNION ALL SELECT c.org_parent_id, c.org_parent_name , c.org_child_id, c.org_child_name FROM jn AS p JOIN org_name AS c ON c.org_parent_id = p.org_child_id) SELECT jn.org_parent_id, jn.org_parent_name , jn.org_child_id, jn.org_child_name FROM jn ORDER BY 1; -- Navigating up the tree from the 20th leaf-node child. WITH org_name AS (SELECT os.org_parent_id AS org_parent_id , o1.organization_name AS org_parent_name , os.org_child_id AS org_child_id , o2.organization_name AS org_child_name FROM dbo.organization o1 RIGHT JOIN dbo.org_structure os ON o1.organization_id = os.org_parent_id RIGHT JOIN dbo.organization o2 ON o2.organization_id = os.org_child_id) , jn AS (SELECT org_parent_id, org_parent_name , org_child_id, org_child_name FROM org_name WHERE org_child_id = 20 UNION ALL SELECT c.org_parent_id, c.org_parent_name , c.org_child_id, c.org_child_name FROM jn AS p JOIN org_name AS c ON c.org_child_id = p.org_parent_id) SELECT jn.org_parent_id, jn.org_parent_name , jn.org_child_id, jn.org_child_name FROM jn ORDER BY 1 DESC; |