Put MySQL in PATH

After downloading and installing MySQL 8.0.24 yesterday, I opened a command shell. In the command shell, I could access the MySQL Shell (mysqlsh.exe) but not the MySQL Client (mysql.exe). Typing in the following:

C:\WINDOWS\system32>mysql |

It returned:

'mysql' is not recognized as an internal or external command, operable program or batch file. |

The MySQL Client (mysql.exe) was installed because MySQL Workbench relies on it. However, the MySQL Microsoft Software Installer (MSI) does not put the mysql.exe file’s directory in the common Windows %PATH% environment variable. You can find the required %PATH% directory variable by opening the File Manager and searching for the mysql.exe file.

You should return several directories and programs but the directory you want is:

C:\Program Files\MySQL\MySQL Server 8.0\bin |

You can test it by using the SET command in the Microsoft Operating System, like this:

SET PATH=C:\Program Files\MySQL\MySQL Server 8.0\bin;%PATH% |

You can now call the mysql.exe program in your current shell session with the following syntax:

mysql -uroot -p |

You will be prompted for the password and then connected to the database as follows:

Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 19 Server version: 8.0.24 MySQL Community Server - GPL Copyright (c) 2000, 2021, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> |

Unfortunately, the SET command only sets the %PATH% environment variable in the current session. You can set the system %PATH% environment variable globally by following these steps:

- In Search, search for and then select: System (Control Panel)

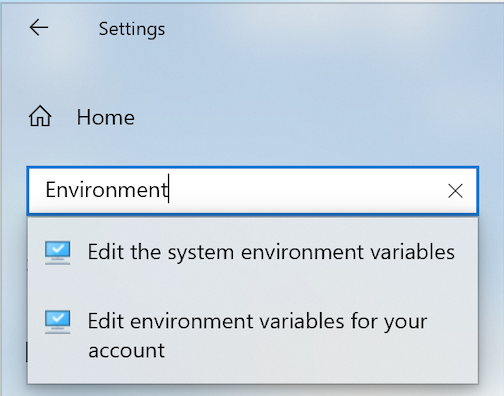

- In Settings dialog enter “Environment” in the search box and it will display:

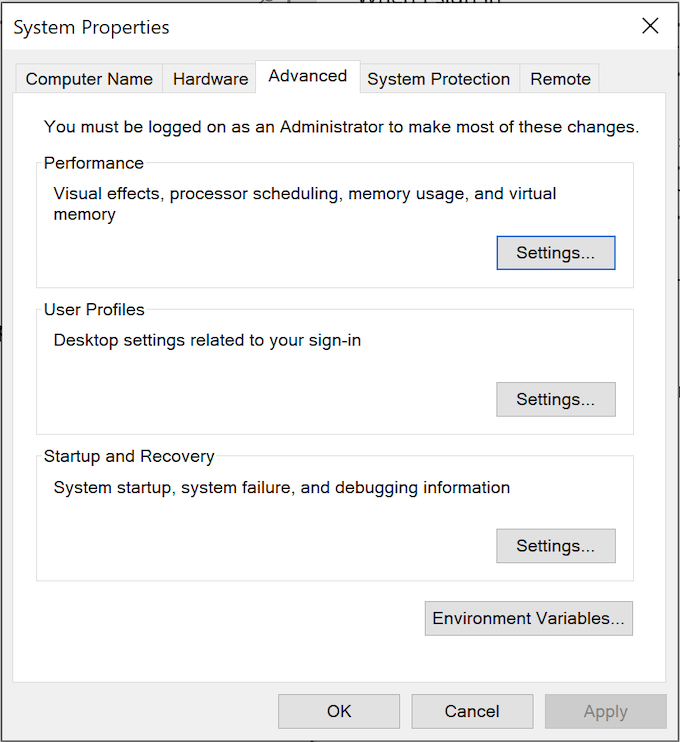

- Chose “Edit the system environment variables” option. You will see the following “System Properties” dialog:

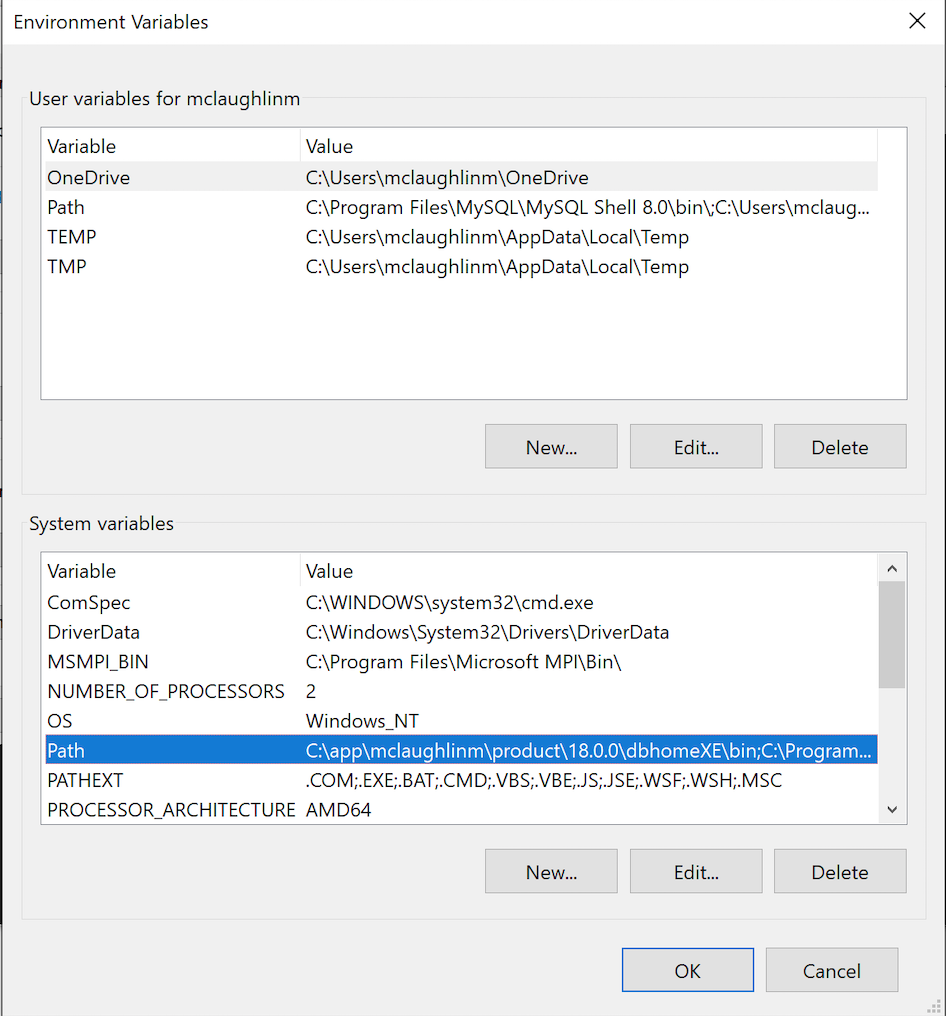

- Click the “Environment Variable” button to display the “Environment Variables” dialog. Click on the Path system variable before clicking the Edit button beneath.

- Click the “New” button and enter “

C:\Program Files\MySQL\MySQL Server 8.0\bin, and click the “OK” button. The next time you query the computer system’s%PATH%environment variable, it will show you the list of path locations that the operating system looks at for command files. It’s actually stored as a semicolon-delimited list in Windows 10 (and, as a colon-delimited list in Linux or Unix).

The next time you open a command shell, the %PATH% environment variable will find the mysql.exe program. As always, I hope these instructions help the reader.

MongoDB Two Step

Sometimes a bit of humor helps with a new topic. Creating a database in MongoDB is a two-step process, like the Texas Two-Step (a nick name for a country/western two-step danced in common time). A Texas Two Step is a one-two, one-two shuffle which is like the two-step process for how you create a MongoDB database.

While databases in MongoDB are a multiuser sandbox like a relational database, you can’t simply create them and grant them privileges. You must first USE the database and then put a collection (a.k.a., equivalent to a table in MySQL) in it. This blog post shows you how to create a MongoDB play database with an actor collection. It shows you the commands to create the a database with one collection in it and how to verify its existence.

You connect through the mongo shell, like:

mongo |

The first-step requires you to connect to the play database even though it doesn’t exist. You do that by typing:

> use play |

You can verify you’re in the play database by typing the db command, which the mongo shell treats as an expression. It returns play, as the name of the current database.

However, when you call the shell show dbs helper:

> show dbs |

It displays:

admin 0.000GB config 0.000GB local 0.000GB |

You also should note that the play database still doesn’t exist when you run the JavaScript equivalent to the shell show dbs helper:

> db.getMongo().getDBs() |

It returns the following list of databases, which excludes the as yet not created play database:

{

"databases" : [

{

"name" : "admin",

"sizeOnDisk" : 32768,

"empty" : false

},

{

"name" : "config",

"sizeOnDisk" : 61440,

"empty" : false

},

{

"name" : "local",

"sizeOnDisk" : 81920,

"empty" : false

}

],

"totalSize" : 176128,

"ok" : 1

} |

You create an actor collection (a.k.a. the equivalent of a relational table) with the following syntax, where db maps to the play database that you’re using. Note that you must create or insert one document to begin a document collection. While you can use the insert method, you should use either the newer insertOne() or insertMany() methods.

> db.actors.insertOne({"actor" : {"first_name" : "Chris", "last_name" : "Pine"}, "age" : 40 }) |

A new call to the shell show dbs helper:

> show dbs |

displays your new play database:

admin 0.000GB config 0.000GB local 0.000GB play 0.000GB |

You can add two more documents (a.k.a. for rows in a relational database) with the insertMany() method:

> db.actors.insertMany([{"actor" : {"first_name" : "Chris", "last_name" : "Evans"}, "age" : 39 } ,{"actor" : {"first_name" : "Chris", "last_name" : "Pratt"}, "age" : 41 }]) |

A quick word to the JavaScript novices out there. Don’t forget the square brackets ([{},{},...]) in the insertMany() method call or you’ll get an error like this:

2021-04-12T16:20:13.237-0600 E QUERY [js] TypeError: documents.map is not a function : DBCollection.prototype.insertMany@src/mongo/shell/crud_api.js:295:1 @(shell):1:1 |

You call the shell show collections helper or db.getMongo().getCollectionNames() JavaScript function to display the collections in the play database. The show collections displays a list of collections, and the db.getMongo().getCollectionNames() displays an JavaScript array of collections.

If you’re like me, Mongo’s db convention is a bit risky that I could do something in the wrong database. So, I put the following function into my .mongorc.js (a.k.a., MongoDB Resource file):

prompt = function() { var dbName = db; return dbName + "> " } |

It ensures you will see the current database name to the left of the prompt (“>”), like:

play> |

You can query the documents from the actors collection with the following:

play> db.actors.find().pretty() |

It returns:

{

"_id" : ObjectId("6074c692813c5a85db9cc9df"),

"actor" : {

"first_name" : "Chris",

"last_name" : "Pine"

},

"age" : 40

}

{

"_id" : ObjectId("6074c7ea813c5a85db9cc9e0"),

"actor" : {

"first_name" : "Chris",

"last_name" : "Evans"

},

"age" : 39

}

{

"_id" : ObjectId("6074c7ea813c5a85db9cc9e1"),

"actor" : {

"first_name" : "Chris",

"last_name" : "Pratt"

},

"age" : 41

} |

As always, I hope this helps those looking for a solution.

MongoDB Script Test

There are many ways to test and edit files. A lot of developers only use their favorite Integrated Developer Environment (IDE) but I find testing script files within the scope of a pipelined set of scripts much faster.

The ability to edit a JavaScript file from within the mongo Shell would be nice but unfortunately, it doesn’t exist. You are able to edit a complex variable with a mechanism quite like the Oracle Database. Rather than leave you hanging on how to edit a complex variable in the mongo shell, here are the steps before launching into how to test your JavaScript files:

- You can enter this manually during any connection to the

mongoshell or put it in your.mongorc.jsconfiguration file, like this in Fedora with the fully qualified filename:EDITOR="/usr/bin/vim"

- Let’s say you have a

stoogesarray variable that contains “Moe”, “Curly”, and “Larry” and you want to add “Shemp” to the variable. You can edit thestoogesarray variable withviand add “Shemp” to it by typing edit and the stooges variable name.edit stooges

- Then, you can test the

stoogesarray variable’s new values:stooges

- It returns the following:

[ "Moe", "Curly", "Shemp", "Larry" ]

Unfortunately, these changes to the demo array variable will be lost after you break the connection. While it does afford a quick test case, you should make the changes in the external JavaScript file. Then, the change is there the the next time you access the resource file.

Here’s my quick edit and test script technique for MongoDB from your present working directory:

- Assume you create a

compliment.jstest file, like:/* Declare an array variable and random index value. */ var compliment = ["Bashful","Doc","Dopey","Grumpy","Happy","Sleepy","Sneezy"] var index = Math.floor(Math.random()*7) /* Print a welcome message. */ print("Hello, " + compliment[index] + ".")

- You can edit the

compliment.jsfile withviand test the script interactively from the present working directory.- You can edit the file with the following syntax:

vi compliment.js -

then, you can test the

task.sqlfile:mongo --nodb --norc < compliment.js

- The

--nodboption instructs MongoDB to not connect to a database and the--norcoption instructs MongoDB to not load your.mongorc.jsfile. Effectively, disabling the database connection and loading of the MongoDB resource file (.mongorc.js) lets you test your code in MongoDB’s Javascript shell unencumbered by any overhead from the MongoDB server.The foregoing script returns the following:

MongoDB shell version v4.0.19 Hello, Sneezy. bye

- If you have the desired outcome, you’re done. However, if you need further change you repeat the process.

- You can edit the file with the following syntax:

As always, I hope this helps those looking for a quick way to accomplish a task.

MySQL Script Test

There are many ways to test and edit files. A lot of developers only use their favorite Integrated Developer Environment (IDE) but I find testing script files within the scope of a pipelined set of scripts much faster.

The ability to edit a script from within the MySQL Command-Line Interface (CLI) or MySQL Shell would be nice but unfortunately, doesn’t exist. You can always subshell to edit a file or list files in the present working directory, like:

mysql> \! vi task.sql |

I prefer to test at the OS level while leveraging the up-arrow key for command history. Here’s my quick edit and test script technique from your present working directory:

- Assume you create a

task.sqltest file, like:SELECT user() AS "Current User"\G

- You can edit with

vioremacand test the script interactively from the present working directory.- You can edit the file with the following syntax:

vi task.sql -

then, you can test the

task.sqlfile:mysql -ustudent -p -Dstudentdb < task.sql

- It returns the following:

Enter password: *************************** 1. row *************************** Current User: student@localhost

- If you have the desired outcome, you’re done. However, if you need further change you repeat the process.

- You can edit the file with the following syntax:

As always, I hope this helps those looking for a quick way to accomplish a task.

MySQL & macOS Silicon

It’s a problem for my students who purchased the new Apple hardware that uses Apple Silicon because they can’t install a Docker MySQL instance. However, there is Homebrew formula that works on macOS Big Sur and the new Apple silicon. It supports:

- Intel Silicon: macOS Big Sur, Catalina, and Mojave

- Apple Silicon: macOS Big Sur

The Homebrew Formula does have conflicts that you may need to avoid. It is a solution for those with the new Apple silicon.

As always, I hope this helps those looking for a solution.

MySQL Transaction Scope

The idea of ACID transactions are a basic feature of SQL’s individual Data Manipulation Language (DML) commands, like the INSERT, UPDATE, and DELETE statements. Transactions across two or more tables are a natural extension of ACID compliance features provided by DML commands. However, they require a structured programming approach, like a store procedure or like API implemented in an imperative language.

Surprisingly, transaction management wasn’t covered well in Alan Beaulieu’s Learning SQL because he only provided pseudo code logic. While I thought troubleshoot some broken MySQL SQL/PSM logic would be a good learning experience for students, it wasn’t. So, I wrote this sample code to show how to achieve an all or nothing transaction across four tables.

The code for this example on transaction management lets you perform the important tasks necessary to effect transaction management:

- You must disable autocommit in the scope of the session.

- You must use an imperative programming language like SQL/PSM or Python, et cetera.

- You must identify an error thrown in a series of DML transactions, rollback all completed work, and exit the scope of the program unit.

This SQL defines the four tables:

/* Drop and create four tables. */ DROP TABLE IF EXISTS one, two, three, four; CREATE TABLE one ( id int primary key auto_increment, msg varchar(10)); CREATE TABLE two ( id int primary key auto_increment, msg varchar(10)); CREATE TABLE three ( id int primary key auto_increment, msg varchar(10)); CREATE TABLE four ( id int primary key auto_increment, msg varchar(10)); |

Unfortunately, there’s no way to simply transaction management from the MySQL Command-Line Interface (CLI) because you need to build the logic that manages success and failure. It requires that you create a procedure using MySQL’s SQL/PSM (Persistent Stored Module) or another imperative programming language. You might think why can’t you just write an anonymous block program, like you can do in other stored procedural languages. The answer is simple. You can’t write anonymous blocks in MySQL’s SQL/PSM because they adhere to ANSI SQL-2003 (or more accurately ISO/IEC 9075-4:2003).

The following code block does:

- Conditionally drops the locking() procedure.

- Sets the default semicolon (

;) delimiter to a double-dollar ($$), which lets you use the semicolon as statement and block terminators. - Declares a

locking()procedure with the following:- Sets a parameter list with four IN-mode parameters.

- Declares an

EXIThandler that undoes any writes before an error in a sequence of DDL commands, like theINSERT,UPDATE, orDELETEstatements. TheEXIThandler then aborts completion of the rest of the procedure. (MySQL 13.6.7.2 Declare … Handler Statement) - Disables autocommit in the scope of the session.

- Starts a transaction context and inserts data into four tables as a transaction. The continue handler picks up processing when one of the

INSERTstatements fails with a 1406 error code. The 1406 error code represents an error that occurs because the data is too long for a column’s width. - When all elements of the procedure complete, you commit the work.

/* Conditionally drop procedure. */ DROP PROCEDURE IF EXISTS locking; /* Set delimiter to $$ to allow ; inside the procedure. */ DELIMITER $$ /* Create a transaction procedure. */ CREATE PROCEDURE locking(IN pv_one varchar(10) ,IN pv_two varchar(10) ,IN pv_three varchar(10) ,IN pv_four varchar(10)) BEGIN /* Declare an EXIT Handler when a string is too long for a column. Undo all prior writes with a ROLLBACK statement. */ DECLARE EXIT HANDLER FOR 1406 BEGIN ROLLBACK; END; /* Disable autocommit. */ SET AUTOCOMMIT=0; /* Start transaction scope. */ START TRANSACTION; /* A series of INSERT statement. */ INSERT INTO one (msg) VALUES (pv_one); INSERT INTO two (msg) VALUES (pv_two); INSERT INTO three (msg) VALUES (pv_three); INSERT INTO four (msg) VALUES (pv_four); /* Commit transaction set. */ COMMIT; END; $$ /* Reset delimiter to ; for SQL statements. */ DELIMITER ; |

The next block tests the locking() procedure. The first and third calls are successful but the second one fails because the third parameter is too long for the msg column in the three table. The error triggers the EXIT handler in the locking() procedure.

/* Call locking procedure. */ CALL locking('Donald','Goofy','Mickey','Pluto'); CALL locking('Squirrel','Chipmunk','Monkey business','Raccoon'); CALL locking('Curly','Larry','Moe','Shemp'); |

The query block below:

/* Select from tables, which should be empty. */ SELECT * FROM one; SELECT * FROM two; SELECT * FROM three; SELECT * FROM four; |

Returns the following, which shows only the first and third test cases succeed:

+----+--------+ | id | msg | +----+--------+ | 1 | Donald | | 2 | Curly | +----+--------+ 2 rows in set (0.01 sec) +----+-------+ | id | msg | +----+-------+ | 1 | Goofy | | 2 | Larry | +----+-------+ 2 rows in set (0.00 sec) +----+--------+ | id | msg | +----+--------+ | 1 | Mickey | | 2 | Moe | +----+--------+ 2 rows in set (0.00 sec) +----+-------+ | id | msg | +----+-------+ | 1 | Pluto | | 2 | Shemp | +----+-------+ 2 rows in set (0.00 sec) |

As always, I hope this helps those trying to write transactions across multiple tables.

Oxygen XML Editor

Somebody asked me about how they could convert an XML file to a CSV file to upload into MySQL. They were asking the question based on an old Convert XML to CSV blog post from 2008. Amazing though that is, I had to explain the process no longer requires manual tasks, like calling Java files from the Apache XML Project. All they needed to do was use the Oxygen XML Editor, which is why I wrote this blog post.

For example, I had them use the same sample XML file from the old blog post (shown below) with one change. The encoding value needs to change from latin1 (ISO-8859-1) to unicode (UTF-8). Then, they should put it into a local Windows directory (mine went into the C:\Data directory).

<?xml version="1.0" encoding="UTF-8"?> <character> <name> <role>Indiana Jones</role> <actor>Harrison Ford</actor> <part>protagonist</part> <film>Indiana Jones and Raiders of the Lost Ark</film> <film>Indiana Jones and the Temple of Doom</film> <film>Indiana Jones and the Last Crusade</film> <film>Indiana Jones and the Kingdom of the Crystal Skull</film> </name> <name> <role>Wilhelmina Scott</role> <actor>Kate Capshaw</actor> <part>support</part> <film>Indiana Jones and the Temple of Doom</film> </name> <name> <role>Marion Ravenwood</role> <actor>Karen Allen</actor> <part>support</part> <film>Indiana Jones and Raiders of the Lost Ark</film> <film>Indiana Jones and the Kingdom of the Crystal Skull</film> </name> <name> <role>Elsa Schneider</role> <actor>Alison Doody</actor> <part>support</part> <film>Indiana Jones and the Last Crusade</film> </name> <name> <role>Short Round</role> <actor>Jonathan Ke Quan</actor> <part>support</part> <film>Indiana Jones and the Temple of Doom</film> </name> <name> <role>Sallah</role> <actor>Jonn Rhys-Davies</actor> <part>support</part> <film>Indiana Jones and Raiders of the Lost Ark</film> <film>Indiana Jones and the Last Crusade</film> </name> <name> <role>Professor Henry Jones</role> <actor>Sean Connery</actor> <part>support</part> <film>Indiana Jones and the Last Crusade</film> </name> <name> <role>Henry "Mutt" Williams</role> <actor>Shia LaBeouf</actor> <part>support</part> <film>Indiana Jones and the Kingdom of the Crystal Skull</film> </name> <name> <role>Marcus Brody</role> <actor>Denholm Elliott</actor> <part>support</part> <film>Indiana Jones and Raiders of the Lost Ark</film> <film>Indiana Jones and the Last Crusade</film> </name> <name> <role>Amrish Puri</role> <actor>Mola Ram</actor> <part>antagonist</part> <film>Indiana Jones and the Temple of Doom</film> </name> <name> <role>Rene Belloq</role> <actor>Belloq</actor> <part>antagonist</part> <film>Indiana Jones and Raiders of the Lost Ark</film> </name> <name> <role>Walter Donovan</role> <actor>Julian Glover</actor> <part>antagonist</part> <film>Indiana Jones and the Last Crusade</film> </name> <name> <role>Colonel Vogel</role> <actor>Michael Bryne</actor> <part>antagonist</part> <film>Indiana Jones and the Last Crusade</film> </name> <name> <role>Irina Spalko</role> <actor>Cate Blanchett</actor> <part>antagonist</part> <film>Indiana Jones and the Kingdom of the Crystal Skull</film> </name> </character> |

Then, I had them copy the following XML Style Language Transformation (XSLT) file into the same C:\Data directory with the encoding value change from latin1 to unicode:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | <?xml version="1.0" encoding="UTF-8"?> <xsl:stylesheet version="1.0" xmlns:xsl="http://www.w3.org/1999/XSL/Transform"> <xsl:template match="/"> <!-- This loops through the branch when a sibling meets a condition. --> <xsl:for-each select="character/name[film='Indiana Jones and the Last Crusade']"> <!-- Sorts based on the value in the "role" element. --> <xsl:sort select="role" /> <!-- Eliminates anyone that has a "part" element value of "antagonist". --> <xsl:if test="part != 'antagonist'"> <!-- An apostrophe before and after with a line return. --> <xsl:text>'</xsl:text> <xsl:value-of select="role"/> <!-- An apostrophe followed by a comma --> <xsl:text>',</xsl:text> <xsl:text>'</xsl:text> <xsl:value-of select="actor"/> <xsl:text>',</xsl:text> <xsl:text>'</xsl:text> <xsl:value-of select="film"/> <!-- An apostrophe followed by a line return --> <xsl:text>' </xsl:text> </xsl:if> </xsl:for-each> </xsl:template> </xsl:stylesheet> |

Open or launch the Oxygen XML Editor and do these steps:

- Create a new Project called character.

- Create the

C:\Data\test.xmlandC:\Data\convert.xslfiles in aC:\Datadirectory. - Open the C:\Data\test.xml and C:\Data\convert.xsl files inside the Oxygen XML Editor.

- Click on

convert.xslfile tab before clicking on the Configure Transformation Scenario(s) button. The button looks like a red wrench with a small red arrow to the bottom right. - After launching the Configure Transformation Scenario(s) dialog, click the Edit button in the dialog box and launch the Edit Scenario dialog.

- Enter

file:/C:/Data/test.xml(use the file chooser if don’t want to type it) in the XML URL field in the Edit Scenario dialog. - Click the OK button to close the Edit Scenario dialog and the Apply associated button to close the Configure Transformation Scenario(s) dialog.

- Click the Apply Transformation Scenario button, which is red arrow button. It will transform the XML document into a result pane at the bottom.

- Select All (or

Ctrl+A) in the result panel and right click on that selected area to launch a context sensitive menu. In that menu, click the Save button to launch a file chooser that will let you save your results.

If you know how to write XSLT this is simple and if you don’t it might take a little time to find a working example on the Internet. Better yet, check out the w3schools for the XSLT documentation or tutorials point’s Learn XSLT website. You can see how to Upload the CSV file into MySQL on this older blog post.

In Linux, you can make this conversion using the Command-Line Interface (CLI) by using the xsltproc program. Assume the XML file is test.xml and the XSLT file is tocsv.xsl, then you can generate the Comma-Separated Values file with this syntax:

xsltproc tocsv.xsl test.xml > text.csv |

As always, I hope this helps those looking for a simple solution.

PL/SQL Inheritance Failure

PL/SQL is a great programming language as far as it goes but it lacks true type inheritance for its collections. While you can create an object type and subtype, you can’t work with collections of those types the same way. PL/SQL object type inheritance, unlike the Java class hierarchy and parallel array class hierarchy, only supports a class hierarchy. Effectively, that means:

- You can pass a subtype as a call parameter, or argument, to a parent data type in a function, procedure, or method signature, but

- You can’t pass a collection of a subtype as a call parameter, or argument, to a collection of parent type in a function, procedure, or method signature.

The limitation occurs because collections have their own data type, which is fixed when you create them. Worse yet, because Oracle has never seen fit to fix their two underlying code trees (23 years and counting since Oracle 8i), you have two types of collections using two distinct C/C++ libraries. You define collections of Attribute Data Types (ATDs) when you create a collection of a standard scalar data type, like NUMBER, VARCHAR2, or DATE. You define collection of User-Defined Data Types (UTDs) when you create a collection of a SQL UDT or PL/SQL-only RECORD data type. The former uses one C/C++ library and the latter another.

Now, Oracle even make the differences between Java and PL/SQL more complex because it treats collections known as tables, really lists in most programming languages, differently than varrays, or arrays. You create a TABLE collection, or list, when you create a table of a scalar or UDT data type. There are two options when you create these object types, and they are:

- You create an empty collection with a no element constructor, which means you’ll need to allocate memory before assigning element values later in your program.

- You create a populated collection with a comma-delimited list of elements.

Both approaches give you a list of elements with a densely populated index. A “densely populated index” is Oracle’s jargon for how they characterize a 1-based sequence of integers without any gaps (e.g., 1, 2, 3, …). The initial construction works the same way whether you create a TABLE or VARRAY collection type. Unfortunately, after you’ve built the collection behaviors change. If you use Oracle’s Collection API to delete one or more items from a TABLE collection type, you create gaps in the index’s sequence of values. That means you must use special logic to navigate across a TABLE collection type to ensure it doesn’t fail when encountering a gap in the numeric sequence.

For example, here’s a FOR-LOOP without the logic to vouchsafe a uninterrupted set of sequence values incrementing by a counter of 1 element at a time:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | DECLARE /* Create a local table collection. */ TYPE list IS TABLE OF VARCHAR2(10); /* Statically allocate memory and assign values to for elements. */ lv_list LIST := list('Moe','Shemp','Larry','Curly'); BEGIN /* Remove the second element, Shemp, from the collection of variable length strings. */ lv_list.DELETE(2); /* Loop through the target with a for loop, which depends on densely populated index values. */ FOR i IN 1..lv_list.COUNT LOOP dbms_output.put_line('['||lv_list(i)||']'); END LOOP; END; / |

The program fails when it tries to read the second element of the table collection, which was previously removed. It raises the following error message after print the first element of the table collection:

[Moe] DECLARE * ERROR at line 1: ORA-01403: no data found ORA-06512: at line 16 |

Conveniently, Oracle’s Collection API provides an EXISTS method that we can use to check for the presence of an index’s value. Modifying line 16 by wrapping it in an IF-statement fixes one problem but identifies another:

15 16 17 18 19 | FOR i IN 1..lv_list.COUNT LOOP IF lv_list.EXISTS(i) THEN dbms_output.put_line('['||lv_list(i)||']'); END IF; END LOOP; |

The program no longer fails on a missing index value, or index gap, but it returns fewer lines of output than you might expect.

That’s because the Oracle Collection API’s COUNT method returns the number of elements currently allocated in memory not the number of original elements. We learn that when we deleted the second element, Oracle deleted the memory allocated for it as well. This is the type of behavior you might expect for a singly linked list. It prints:

[Moe] [Larry] |

One more change is required to count past and to the highest index value. One line 15, change the COUNT method call to the LAST method call, which returns the highest index value.

15 16 17 18 19 | FOR i IN 1..lv_list.LAST LOOP IF lv_list.EXISTS(i) THEN dbms_output.put_line('['||lv_list(i)||']'); END IF; END LOOP; |

It now prints the three stooges we would expect to see:

[Moe] [Larry] [Curly] |

Realistically, a FOR-LOOP is not the best control structure for a collection. You should use a WHILE-LOOP and treat the incrementing value as an iterator rather than sequence index value. An iterator doesn’t worry about gaps in the sequence, it simply moves to the next element in the singly linked list. Here’s an example that uses the iterator approach with a WHILE-LOOP:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | DECLARE /* Create a local table collection. */ TYPE list IS TABLE OF VARCHAR2(10); /* Statically allocate memory and assign values to for elements. */ lv_list LIST := list('Moe','Shemp','Larry','Curly'); /* Declare a current index variable. */ CURRENT NUMBER; BEGIN /* Remove the second element, Shemp, from the collection of variable length strings. */ lv_list.DELETE(2); /* Loop through the target with a while loop, which doesn't depend on densely populated index values by setting the starting index value and increment as if with an iterator. */ CURRENT := lv_list.FIRST; WHILE NOT (CURRENT > lv_list.LAST) LOOP dbms_output.put_line('['||lv_list(CURRENT)||']'); CURRENT := lv_list.NEXT(CURRENT); END LOOP; END; / |

The iterator approach prints the elements as:

[Moe] [Larry] [Curly] |

You can reverse the process with the following changes to lines 20-24:

20 21 22 23 24 | CURRENT := lv_list.LAST; WHILE NOT (CURRENT < lv_list.FIRST) LOOP dbms_output.put_line('['||lv_list(CURRENT)||']'); CURRENT := lv_list.PRIOR(CURRENT); END LOOP; |

It prints the list backwards:

[Curly] [Larry] [Moe] |

After covering the issues with sparsely populated, those with gaps in the sequence of indexes values, table collections, let’s examine how you must work around PL/SQL’s lack of a parallel array class hierarchy. The solution lies in combining two programming concepts:

- A function to pack the sparsely populated table collection into a densely populated one, and

- A package with overloaded functions that pack different table collections.

To develop the test case, let’s use an ADT collection because it’s the simplest to work with. The following creates a table collection of a thirty character long scalar string:

1 2 3 | CREATE OR REPLACE TYPE list IS TABLE OF VARCHAR2(30); / |

The following pack function takes a table collection of the thirty character long scalar string, evaluates the string for missing elements, and packs the existing elements into a densely populated list:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | CREATE OR REPLACE FUNCTION pack ( pv_list LIST ) RETURN list IS /* Declare a new list. */ lv_new LIST := list(); BEGIN /* Read, check, and pack an old list into a new one. */ FOR i IN 1..pv_list.LAST LOOP IF pv_list.EXISTS(i) THEN lv_new.EXTEND; lv_new(lv_new.COUNT) := pv_list(i); END IF; END LOOP; RETURN lv_new; END; / |

This anonymous block tests the pack function:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | DECLARE /* Declare a list value. */ lv_test LIST := list('Moe','Shemp','Larry','Curly'); BEGIN /* Remove one element in the middle. */ lv_test.DELETE(2); /* Pack the list of elements into a sequence of values. */ lv_test := pack(lv_test); /* Print the list of elements from the packed list. */ FOR i IN 1..lv_test.COUNT LOOP dbms_output.put_line('['||lv_test(i)||']'); END LOOP; END; / |

It prints the expected three string values:

[Moe] [Larry] [Curly] |

Now, let’s expand the example to build an overloaded package. The first step requires building a base_t object type and a table collection of the object type, like:

1 2 3 4 5 6 7 8 9 | CREATE OR REPLACE TYPE base_t IS OBJECT ( oid NUMBER ) INSTANTIABLE NOT FINAL; / CREATE OR REPLACE TYPE base_list IS TABLE OF base_t; / |

Next, you create a book_t subtype of the base_t object type and a book_list table collection of the book_t subtype, like:

1 2 3 4 5 6 7 8 9 | CREATE OR REPLACE TYPE book_t UNDER base_t ( title VARCHAR2(30) , COST NUMBER); / CREATE OR REPLACE TYPE book_list IS TABLE OF book_t; / |

We can test the base_t and book_t default constructors with the following SQL*Plus formatting and SQL query:

COL oid FORMAT 999 COL title FORMAT A20 COL COST FORMAT 99.99 SELECT * FROM TABLE(book_list(book_t(1,'Neuromancer',15.30) ,book_t(2,'Count Zero',7.99) ,book_t(3,'Mona Lisa Overdrive',7.99) ,book_t(4,'Burning Chrome',8.89))); |

It prints the following output:

OID TITLE COST ---- -------------------- ------ 1 Neuromancer 15.30 2 Count Zero 7.99 3 Mona Lisa Overdrive 7.99 4 Burning Chrome 8.89 |

The following is an overloaded package specification:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | CREATE OR REPLACE PACKAGE packer IS /* A simple ADT list of strings. */ FUNCTION pack ( pv_list LIST ) RETURN list; /* A UDT list of base objects. */ FUNCTION pack ( pv_list BASE_LIST ) RETURN base_list; /* A UDT list of subtype objects. */ FUNCTION pack ( pv_list BOOK_LIST ) RETURN book_list; END; / |

After you create the package specification, you need to provide the implementation. This is typical in any programming language that supports Interface Description Language (IDL). A package body provides the implementation for the package specification. The package body follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 | CREATE OR REPLACE PACKAGE BODY packer IS /* A simple ADT list of strings. */ FUNCTION pack ( pv_list LIST ) RETURN list IS /* Declare a new list. */ lv_new LIST := list(); BEGIN /* Read, check, and pack an old list into a new one. */ FOR i IN 1..pv_list.LAST LOOP IF pv_list.EXISTS(i) THEN lv_new.EXTEND; lv_new(lv_new.COUNT) := pv_list(i); END IF; END LOOP; RETURN lv_new; END pack; /* A simple ADT list of strings. */ FUNCTION pack ( pv_list BASE_LIST ) RETURN base_list IS /* Declare a new list. */ lv_new BASE_LIST := base_list(); BEGIN /* Read, check, and pack an old list into a new one. */ FOR i IN 1..pv_list.LAST LOOP IF pv_list.EXISTS(i) THEN lv_new.EXTEND; lv_new(lv_new.COUNT) := pv_list(i); END IF; END LOOP; RETURN lv_new; END pack; /* A simple ADT list of strings. */ FUNCTION pack ( pv_list BOOK_LIST ) RETURN book_list IS /* Declare a new list. */ lv_new BOOK_LIST := book_list(); BEGIN /* Read, check, and pack an old list into a new one. */ FOR i IN 1..pv_list.LAST LOOP IF pv_list.EXISTS(i) THEN lv_new.EXTEND; lv_new(lv_new.COUNT) := pv_list(i); END IF; END LOOP; RETURN lv_new; END pack; END packer; / |

The test case for the base_list object type is:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | DECLARE lv_test BASE_LIST := base_list(base_t(1),base_t(2) ,base_t(3),base_t(4)); BEGIN /* Remove one element in the middle. */ lv_test.DELETE(2); /* Pack the list of elements into a sequence of values. */ lv_test := packer.pack(lv_test); /* Print the list of elements from the packed list. */ FOR i IN 1..lv_test.LAST LOOP dbms_output.put_line('['||lv_test(i).oid||']'); END LOOP; END; / |

It prints the following output:

[1] [3] [4] |

The test case for the book_list object type is:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | DECLARE lv_test BOOK_LIST := book_list(book_t(1,'Neuromancer',15.30) ,book_t(2,'Count Zero',7.99) ,book_t(3,'Mona Lisa Overdrive',7.99) ,book_t(4,'Burning Chrome',8.89)); BEGIN /* Remove one element in the middle. */ lv_test.DELETE(2); /* Pack the list of elements into a sequence of values. */ lv_test := packer.pack(lv_test); /* Print the list of elements from the packed list. */ FOR i IN 1..lv_test.LAST LOOP dbms_output.put_line( '['||lv_test(i).oid||']' ||'['||lv_test(i).title||']' ||'['||lv_test(i).COST||']'); END LOOP; END; / |

It prints the following output:

[1][Neuromancer][15.3] [3][Mona Lisa Overdrive][7.99] [4][Burning Chrome][8.89] |

In conclusion, you would not have to write overloaded methods for every list if PL/SQL supported class hierarchy and parallel array class hierarchy like Java. Unfortunately, it doesn’t and likely won’t in the future. You can pack table collections as a safety measure when they’re passed as parameters to other functions, procedures, or methods with the code above.

As always, I hope this helps those looking for a solution.

PL/SQL Coupled Loops

The purpose of this example shows you how to navigate a list with a sparsely populated index. This can occur when one element has been removed after the list was initialized. Unlike Oracle’s VARRAY (array), removing an element from a TABLE or list does not re-index the elements of the list.

This example also shows you how to coupled lists. The outer loop increments, notwithstanding the gap in index values, while the inner loop decrements. The upper range of the inner loop is set by the index value of the outer loop.

The example program uses an abbreviated version of the Twelve Days of Christmas, and I’ve tried to put teaching notes throughout the example file.

DECLARE /* Create a single column collection that is a list strings less than 8 characters in length and another of strings less than 20 characters in length. */ TYPE DAY IS TABLE OF VARCHAR2(8); TYPE verse IS TABLE OF VARCHAR2(20); /* Create variables that use the user-defined types: || ================================================= || 1. We give the variable a name of lv_day and lv_verse. || 2. We assign a user-defined ADT (Attribute Data Type) collection. || 3. We assign a list of value to the constructor of the list, which || allocates memory for each item in the comma-delimited list of || string. */ lv_day DAY := DAY('first','second','third','fourth','fifth'); lv_verse VERSE := verse('Partridge','Turtle Doves','French Hen' ,'Calling Birds','Gold Rings'); BEGIN /* || Remove an element from each of the two lists, which makes the two || lists sparsely indexed. A sparsely indexed list has gaps in the || sequential index of the list. */ lv_day.DELETE(3); /* || Loop through the list of days: || ===================================================j || 1. A list created by a comma-delimited list is densely populated, || which means it has no gaps in the sequence of indexes. || 2. A list created by any means that is subsequently accessed || and has one or more items removed is sparsely populated, || which means it may have gaps in the sequence of indexes. || 3. A FOR loop anticipates densely populated indexes and fails || when trying to read a missing index, which is why you should || use an IF statement to check for the element of a list before || accessing it. || 4. A COUNT method returns the number of elements allocated memory || in a list of values and the LAST method returns the highest || index value. The index value is alway an integer for user-defined || ADT (Attribute Data Type) collections, but may be a string for || an associative array or a PL/SQL list indexed by a string. || 5. Removing an element from a list does not change the other || index values but does if you create an array (or varray), which || means COUNT OR LAST may cause the same type of error for a list || with a missing element. */ FOR i IN 1..lv_day.LAST LOOP /* || Verify the index is valid. || ==================================================== || You check whether the element is present in the || list. */ IF lv_day.EXISTS(i) THEN /* Print the beginning of the stanza. */ dbms_output.put_line('On the ['||lv_day(i)||'] of Christmas ...'); /* Print the song. */ FOR j IN REVERSE 1..i LOOP /* Check if the day exists. */ IF lv_verse.EXISTS(j) THEN /* All but first and last verses. */ IF j > 1 THEN dbms_output.put_line('- ['||lv_verse(j)||']'); /* The last verse. */ ELSIF i = j THEN dbms_output.put_line('- A ['||lv_verse(j)||']'||CHR(10)); /* Last verse. */ ELSE dbms_output.put_line('and a ['||lv_verse(j)||']'||CHR(10)); END IF; END IF; END LOOP; ELSE CONTINUE; END IF; END LOOP; END; / |

As always, I hope it helps you solve problems in the real world.

MySQL Join Tutorial

Some believe the most important part of SQL is the ability to query data. Queries typically retrieve data by joining many tables together into useful result sets. This tutorial takes the position that visibility into the data helps those new to SQL understand how joins work. To that end, the queries use Common Tabular Expressions (CTEs) instead of tables.

Default behavior of a JOIN without a qualifying descriptor is not simple because it may return:

- A

CROSS JOIN(or Cartesian Product) when there is noONorUSINGsubclause, or - An

INNER JOINwhen you use anONorUSINGsubclause.

The following query uses JOIN without a qualifier or an ON or USING subclause. It also uses two copies of the single CTE, which is more or less a derived table and the result of a subquery held in memory. This demonstrates the key reason for table aliases. That key reason is you can put two copies of the same table in memory under different identifiers or labels.

1 2 3 4 5 6 7 | WITH alpha AS (SELECT 'A' AS letter, 130 AS amount UNION SELECT 'B' AS letter, 150 AS amount UNION SELECT 'C' AS letter, 321 AS amount) SELECT * FROM alpha a JOIN alpha b; |

It returns a Cartesian product:

+--------+--------+--------+--------+ | letter | amount | letter | amount | +--------+--------+--------+--------+ | A | 130 | A | 130 | | B | 150 | A | 130 | | C | 321 | A | 130 | | A | 130 | B | 150 | | B | 150 | B | 150 | | C | 321 | B | 150 | | A | 130 | C | 321 | | B | 150 | C | 321 | | C | 321 | C | 321 | +--------+--------+--------+--------+ 9 rows in set (0.00 sec) |

By adding an ON clause to line 8, the default JOIN keyword returns an INNER JOIN result.

1 2 3 4 5 6 7 8 | WITH alpha AS (SELECT 'A' AS letter, 130 AS amount UNION SELECT 'B' AS letter, 150 AS amount UNION SELECT 'C' AS letter, 321 AS amount) SELECT * FROM alpha a JOIN alpha b ON a.letter = b.letter; |

It displays results, like:

+--------+--------+--------+--------+ | letter | amount | letter | amount | +--------+--------+--------+--------+ | A | 130 | A | 130 | | B | 150 | B | 150 | | C | 321 | C | 321 | +--------+--------+--------+--------+ 3 rows in set (0.00 sec) |

The next example uses two CTEs. One uses letters 'A', 'B', 'C', and D and the other uses letters 'A', 'B', 'C', and 'E'. The letter D only exists in the alpha derived table and the letter 'E' only exists in the beta derived table. The amount column values differ for their respective letters in the two CTE tables.

The basic query below the comma delimited CTEs joins the alpha and beta derived tables with an INNER JOIN using an ON clause based on the letter column values found in both alpha and beta CTEs.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | WITH alpha AS (SELECT 'A' AS letter, 130 AS amount UNION SELECT 'B' AS letter, 150 AS amount UNION SELECT 'C' AS letter, 321 AS amount UNION SELECT 'D' AS letter, 783 AS amount) , beta AS (SELECT 'A' AS letter, 387 AS amount UNION SELECT 'B' AS letter, 268 AS amount UNION SELECT 'C' AS letter, 532 AS amount UNION SELECT 'E' AS letter, 391 AS amount) SELECT * FROM alpha a INNER JOIN beta b ON a.letter = b.letter; |

The INNER JOIN returns only those rows in alpha and beta CTEs where the letter column values match:

+--------+--------+--------+--------+ | letter | amount | letter | amount | +--------+--------+--------+--------+ | A | 130 | A | 387 | | B | 150 | B | 268 | | C | 321 | C | 532 | +--------+--------+--------+--------+ 3 rows in set (0.01 sec) |

If you change line 17 from an INNER JOIN to a LEFT JOIN, you return all the rows from the alpha CTE and only those rows from the beta CTE that have a matching letter column value. The new line 17 for a LEFT JOIN is:

17 | SELECT * FROM alpha a LEFT JOIN beta b |

It returns the three matching rows plus the one non-matching row from the alpha CTE that is on the left side of the LEFT JOIN operator. You should note that that a left outer join puts null values into the beta CTE columns where there is no matching row for the 'D' letter found in the alpha CTE.

The results are shown below:

+--------+--------+--------+--------+ | letter | amount | letter | amount | +--------+--------+--------+--------+ | A | 130 | A | 387 | | B | 150 | B | 268 | | C | 321 | C | 532 | | D | 783 | NULL | NULL | +--------+--------+--------+--------+ 4 rows in set (0.01 sec) |

If you change line 17 from an LEFT JOIN to a RIGHT JOIN, you return all the rows from the beta CTE and only those rows from the alpha CTE that have a matching letter column value. The new line 17 for a RIGHT JOIN is:

17 | SELECT * FROM alpha a RIGHT JOIN beta b |

It returns the following result set:

+--------+--------+--------+--------+ | letter | amount | letter | amount | +--------+--------+--------+--------+ | A | 130 | A | 387 | | B | 150 | B | 268 | | C | 321 | C | 532 | | NULL | NULL | E | 391 | +--------+--------+--------+--------+ 4 rows in set (0.00 sec) |

MySQL does not support a FULL JOIN operation but you can mimic a full join by combining a LEFT JOIN and RIGHT JOIN with the UNION operator. The UNION operator performs a unique sort operation, which reduces the two copies of matching rows returned by both the left and right join operation to a unique set.

This is the way to write the equivalent of a full join:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | WITH alpha AS (SELECT 'A' AS letter, 130 AS amount UNION SELECT 'B' AS letter, 150 AS amount UNION SELECT 'C' AS letter, 321 AS amount UNION SELECT 'D' AS letter, 783 AS amount) , beta AS (SELECT 'A' AS letter, 387 AS amount UNION SELECT 'B' AS letter, 268 AS amount UNION SELECT 'C' AS letter, 532 AS amount UNION SELECT 'E' AS letter, 391 AS amount) SELECT * FROM alpha LEFT JOIN beta ON alpha.letter = beta.letter UNION SELECT * FROM alpha right JOIN beta ON alpha.letter = beta.letter; |

It returns one copy of the matching rows, and the non-matching rows from both the alpha and beta CTEs:

+--------+--------+--------+--------+ | letter | amount | letter | amount | +--------+--------+--------+--------+ | A | 130 | A | 387 | | B | 150 | B | 268 | | C | 321 | C | 532 | | D | 783 | NULL | NULL | | NULL | NULL | E | 391 | +--------+--------+--------+--------+ 5 rows in set (0.00 sec) |

A NATURAL JOIN would return no rows because it works by implicitly discovering columns with matching names in both CTEs and then joins the result set from both CTEs. While the letter column matches rows between the CTEs the amount column doesn’t hold any matches. The combination of letter and amount columns must match for a NATURAL JOIN operation to return any rows.

You also have the ability to override the cost optimizer and force a left to right join by using the STRAIGHT_JOIN operator. As always, I hope this helps those looking for a solution with an explanation.