SQL Developer JDK

In my classes, we use a VMware Linux install with SQL Developer. One of my students called me in a panic after an upgrade of packages when SQL Developer failed to launch. The student was astute enough to try running it from the command line where it generates an error like:

Oracle SQL Developer Copyright (c) 2005, 2018, Oracle and/or its affiliates. All rights reserved. /opt/sqldeveloper/sqldeveloper/bin/../../ide/bin/launcher.sh: line 954: [: : integer expression expected The JDK (/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.222.b10-0.fc30.x86_64/) is not a valid JDK. The JDK was specified by a SetJavaHome directive in a .conf file or by a --setjavahome option. Type the full pathname of a JDK installation (or Ctrl-C to quit), the path will be stored in /home/student/.sqldeveloper/19.2.0/product.conf Error: Unable to get APP_JAVA_HOME input from stdin after 10 tries |

The error is simple, the SQL Developer package update wipe clean the configuration of the SetJavaHome variable in the user’s ~/.sqldeveloper/19.2.0/product.conf file. The fix is three steps because its very likely that the Java packages were also updated. Here’s how to fix it:

- Navigate to the directory where you’ve installed the Java Virtual Machine (JVM) and find the current version of the JVM installed:

cd /usr/lib/jvm ls java*

It will return a set of files, like:

java java-1.8.0 java-1.8.0-openjdk java-1.8.0-openjdk-1.8.0.252.b09-0.fc30.x86_64 java-openjdk jre jre-1.8.0 jre-1.8.0-openjdk jre-1.8.0-openjdk-1.8.0.252.b09-0.fc30.x86_64 jre-openjdk

- Navigate to your user’s product configuration file with this command:

cd ~/.sqldeveloper/19.2.0

- Add the following line to the

product.conffile:# SetJavaHome /path/jdk SetJavaHome /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.252.b09-0.fc30.x86_64/

Now, you should be able to run it from the command line. The shortcut icon should also work if one was installed. Also, don’t forget to update your $JAVA_HOME variable in the master Bash resource file, or your local user’s .bashrc files.

As always, I hope this helps those looking for a quick solution.

Oracle Docker Container

Install, configure, and use an Oracle Docker Container

Installing a Docker instance isn’t quite straightforward nor is it terribly difficult. It can be quite overwhelming if you’re unfamiliar with the technology of virtualization and containerization. This essay shows you how to create, configure, and manage an Oracle Database 18c XE Docker instance on the macOS. There are some slight differences when you install it on Windows OS.

Installation

You need to download the Oracle Database 18c XE file for Linux. You will find it on Oracle’s OTN website at https://www.oracle.com/downloads/. Click the Database link under the Developer Downloads banner. You want to download the Oracle Database Express Edition (XE), Release 18.4.0.0.0 (18c) file.

The file is a Linux Red Hat Package Manager (rpm) file. The file is approximately 2.5 GB in size, which means you may want to take a break while downloading it. Whether you take a break or not, this step may take more time than you like.

While downloading the Oracle database, you want to work on the two other tasks concurrently. You need to download and install Docker and Homebrew software, which aren’t installed from Apple’s Application Store. Many macOS systems disallow by default software from outside the comfy boundaries and inspections of the Apps Store. You may need to change your system preferences to install Docker and Homebrew.

You can download Docker for the macOS from the following website:

https://docs.docker.com/docker-for-mac/install/

The Homebrew (the missing package manager for macOS) website will tell you to install it from the macOS Command Line Interface (CLI). Please note that you must already have the Xcode Command Line Tools installed before you install Homebrew. The following Homebrew installation will update your Command Line Tools to macOS Mojave Version 10.14.

Open a Terminal session from your finder and run this command:

bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install.sh)" |

After you install Homebrew in the Terminal, type the following to go to your user’s home folder (or directory):

cd |

In your home directory (/Users/username [~]), create the docker-images directory from the available GitHub docker containers with these two commands (separated by the semicolon):

cd ~/; git clone https://github.com/oracle/docker-images.git |

Move the Oracle Database XE 18c rpm file from your Downloads folder to the target docker-images subfolder with the following command:

mv ~/Downloads/oracle-database-xe-18c-1.0-1.x86_64-2.rpm \ ~/docker-images/OracleDatabase/SingleInstance/dockerfiles/18.4.0/. |

Change your present working directory with the following cd command:

cd docker-images/OracleDatabase/SingleInstance/dockerfiles |

Build the Docker image with the following command (from the directory where the buildDockerImage.sh shell script is found):

./buildDockerImage.sh -v 18.4.0 -x |

The Docker image build takes quite some time. It may take more than 10 minutes on some macOS computers. After it completes, you should see that it was successfully built and tagged in the Terminal. You can confirm the image build with this command:

docker images |

It should return something like this:

REPOSITORY TAG IMAGE ID CREATED SIZE oracle/database 18.4.0-xe 926f4349b277 12 minutes ago 5.89GB oraclelinux 7-slim 153f8d73287e 8 weeks ago 131MB |

Before you start your Docker container, you need to open a Terminal session. You will be in your home directory, which should give you a prompt like:

machine_name:~ username$ |

If you issue a pwd command, you should see the following:

/Users/username |

Create an oracle directory as subdirectory:

mkdir oracle |

While you might wonder about the oracle directory at this point, it’s to help keep straight Docker containers on the macOS file system. For example, when you install Docker instances for MySQL and PostgreSQL, you can see the Docker file systems as:

/Users/username/mysql /Users/username/oracle /Users/username/postgres |

Now, you start the Docker container with the following command:

sudo \ docker run --name videodb -d -p 51521:1521 -p 55500:5500 -e ORACLE_PWD=cangetin \ -e ORACLE_CHARACTERSET=AL32UTF8 -v ~/oracle:/home oracle/database:18.4.0-xe |

After starting the Docker container, you check the container’s status the following command:

docker ps |

Congratulations, you have successfully installed the Docker container.

Configure

The standard docker container prepares a base platform for you. It doesn’t create a schema or containerized user. It simply installs the Oracle Database Management System (DBMS) and Oracle Listener. You need to configure your Linux environment and your database.

You connect to the container as the root user, like:

docker exec -it videodb bash |

You should update any of the older packages with the following command:

yum update |

Next, you should install the openssh-server and vim packages. They’re not installed as part of the docker container’s default configuration. You’ll need them when you create non-root users and edit configuration files. This command installs them both:

yum openssh-server vim |

There are a number of things for you to do at this point. They don’t all have to be done in the order that this essay takes. Like any other installation of Oracle on Linux, there is an oracle user who owns the installation. The oracle user is a non-login user. A non-login user doesn’t have a password and disallows a ssh connection. You need to first become the root user before you can use the su (substitute user) command to become the oracle user. Only superuser accounts hold the privileges to su without credentials because they’re trusted users.

The easiest thing to do while you’re the root user is test your ability to connect to the Oracle database’s system schema. You set the system schema’s password to cangetin when you ran the docker run command. At the command prompt, type the following to connect to the database:

sqlplus system/cangetin@xe |

You should see the following when you connect as the system user:

SQL*Plus: Release 18.0.0.0.0 - Production on Sun Sep 13 02:48:44 2020 Version 18.4.0.0.0 Copyright (c) 1982, 2018, Oracle. All rights reserved. Last Successful login time: Sat Sep 12 2020 21:13:33 +00:00 Connected to: Oracle Database 18c Express Edition Release 18.0.0.0.0 - Production Version 18.4.0.0.0 SQL> |

Please note that only the oracle user can connect without referencing the @xe service identifier, which is defined in the $ORACLE_HOME/network/admin/tnsnames.ora file. You can read more about the SQL*Net configuration in the documentation. The quit command exits the SQL*Plus Command Line Interface. At this point, as root, lets you create a missing vi symbolic link to the vim utility you installed earlier.

ln -s /usr/bin/vim /usr/bin/vi |

With vi configured, let’s su to the oracle user and create an .bashrc file for it. You should note that a non-login user doesn’t have a .bashrc file by default. You become the oracle user with the following command:

su oracle |

You may notice that you’re not in the oracle user’s home directory. Let’s change that by moving to the correct home directory.

The home directory for any user is configured in the /etc/passwd file and available by using the echo command to see the $HOME environment variable’s value. This is true for Red Hat, Oracle Unbreakable Linux, CentOS, and Fedora distributions. They create users’ home directories as subdirectories in the /home directory.

The .bashrc file is a hidden file. Hidden files have no file name before the period and are not visible with an ls (list) command. You can find them by using a -al flag value with the ls command

ls -al |

You can use the vi editor to create a .bashrc file like this:

vi .bashrc |

A minimal oracle .bashrc (bash resource) file should look like this:

# Source global definitions if [ -f /etc/bashrc ]; then . /etc/bashrc fi # User specific environment if ! [[ "$PATH" =~ "$HOME/.local/bin:$HOME/bin:" ]] then PATH="$HOME/.local/bin:$HOME/bin:$PATH" fi export PATH # Set Prompt export PS1="[\u@localhost \W]\$ " # Change to home directory. cd $HOME # Uncomment the following line if you don't like systemctl's auto-paging feature: # export SYSTEMD_PAGER= # User specific aliases and functions |

If you know about the Linux CLI prompt, the localhost string may seem odd. It’s there to suppress the random string otherwise provided by the docker container.

A number of other Oracle environment parameters have already been set. You can see them with this command:

env | grep -i oracle |

You can connect as the privileged sysdba role, once known as the internal user, to start and stop the database instance without stopping the docker container. That command syntax is:

sqlplus / as sysdba |

Only the oracle user has privileges to connect with the sysdba role by default. That’s because the oracle user is the owner of the Oracle database installation.

While connected as the oracle user, you should make three changes. One change to oracle executable file permissions and two changes to the glogin.sql default configuration file.

The initial permissions on the $ORACLE_HOME/bin/oracle executable file in the docker container are shown below.

-rwxr-x--x 1 oracle oinstall 437755981 Oct 18 2018 oracle |

The setuid bit is disabled when the user’s permissions are rwx. The oracle executable should always run with the permissions and ownership of the oracle user. That only happens when the setuid bit is enabled. You set the setuid. bit with the following syntax as the oracle user or privileged root superuser (from the $ORACLE_HOME/bin directory):

chmod u+s oracle |

Relisting the file in a long list view (ls -al) after the change, you should see the following:

-rwsr-x--x 1 oracle oinstall 437755981 Oct 18 2018 oracle |

The setuid bit is enabled when the user permissions are rws. Connections to the database by non-privileged Oracle users may raise ORA-01017 and ORA-12547 errors when the setuid bit is unset.

The glogin.sql file is read and processed with every connection to the database. Therefore, you should put little in there, and some would argue nothing in there. You’re going to enter the command that lets you interactively launch vi from a SQL> command prompt and set a SQL*Plus environment variable. The SQL*Plus environment variable lets you see debug messages raised by your PL/SQL programs.

To edit the glogin.sql file, change your terminal directory as follows:

cd $ORACLE_HOME/sqlplus/admin |

Add the following two lines at the bottom of the glogin.sql file:

define _editor=vi SET SERVEROUTPUT ON SIZE UNLIMITED |

That’s it for configuring the oracle user’s account. Type exit to return to the root user shell. Type exit again, this time to leave the root user’s account and return to your hosting macOS.

The next configuration step sets up a non-privileged student account in Linux. You setup the student user with the following Docker command (for reference, it can’t be done from within the docker container):

sudo \ docker exec -it videodb bash -c "useradd -u 501 -g dba -G users \ -d /home/student -s /bin/bash -c "Student" -n student" |

You will be prompted for a password when this command runs. Try to keep the password simple. Using a password like cangetin is recommended when it’s a development instance. You can connect with the following docker command:

docker exec -it --user student videodb bash |

After logging in to the docker container as the student user, you need to configure the .bashrc file. You should use the following minimal .bashrc file in the /home/student directory, which you can create with the vi editor.

# Source global definitions if [ -f /etc/bashrc ]; then . /etc/bashrc fi # User specific environment if ! [[ "$PATH" =~ "$HOME/.local/bin:$HOME/bin:" ]] then PATH="$HOME/.local/bin:$HOME/bin:$PATH" fi export PATH # Set Prompt export PS1="[\u@localhost \W]\$ " # Change to home directory. cd $HOME # Uncomment the following line if you don't like systemctl's auto-paging feature: # export SYSTEMD_PAGER= # User specific aliases and functions # Set Oracle environment variables. export ORACLE_SID=XE export ORACLE_BASE=/opt/oracle export ORACLE_HOME=/opt/oracle/product/18c/dbhomeXE |

As the c##student user, you need to connect to the system schema and provision a c##student container database. You can connect to the system schema with the following syntax:

sqlplus system/cangetin@xe |

There are four steps required to provision a container database. These steps are different than the steps for previous non-container databases. In non-container databases, you could grant privileges directly to database users. Oracle now requires that you create database roles, which bundle privileges together. Then, you grant roles to users. The four provisioning steps are:

- Create a user, which must adhere to the following naming convention from Oracle Database 12c forward. The database user’s name must start with the letter

cand two#(pound) symbols followed by a character and then a string of letters and numbers. - Create a role, which must use the same naming convention as containerized users. Failure to use the correct naming convention raises an

ORA-65096error. - Grant database privileges to a role.

- Grant a database role to a user.

You create a c##student container database user with the following syntax:

CREATE USER c##student IDENTIFIED BY student DEFAULT TABLESPACE users QUOTA 100M ON users TEMPORARY TABLESPACE temp; |

Next, you create a c##studentrole container role with the following syntax:

CREATE ROLE c##studentrole CONTAINER = ALL; |

Then, you grant the following privileges to your newly created c##studentrole role:

GRANT CREATE CLUSTER, CREATE INDEXTYPE, CREATE PROCEDURE, CREATE SEQUENCE, CREATE SESSION, CREATE TABLE, CREATE TRIGGER, CREATE TYPE, CREATE VIEW TO c##studentrole; |

Finally, you grant a c##studentrole role (bundle of privileges) to a c##videodb user:

GRANT c##studentrole TO c##student; |

After completing these tasks, you should use the quit command to exit the SQL*Plus CLI. Please note that unlike some database CLIs, you do not need to add a semicolon to quit the connection. Oracle divides its CLI commands into SQL*Plus and SQL commands; and the quit command is a SQL*Plus command. SQL*Plus commands do not require the terminating semicolon. SQL commands do require the semicolon or a line return and forward slash, which dispatches the SQL command to the SQL engine.

You should confirm that the provisioning worked by reconnecting to the Oracle database as the c##student user with this syntax:

sqlplus c##student/student@xe |

You have now provisioned and verified the connection to a database container user. Use the quit command to disconnect from the Oracle database, and the exit command to return to your host operating system.

At this point you have a couple options for using the docker container. You can install a proprietary Integrated Development Environment (IDE), like Oracle’s free SQL Developer. There are several others and some support more than one database engine. Unfortunately, all the others have annual licensing costs.

Post Install: Access Tools

Oracle’s SQL Developer is a Java-based solution that runs on numerous platforms. You can download SQL Developer from Oracle’s OTN web site:

https://www.oracle.com/downloads/

Click on the Developer Tools link to find the current version of the SQL Developer. Before you install SQL Developer on your macOS, you will need to download and install the Java 8 Software Development Kit (SDK) from this web site:

http://www.oracle.com/technetwork/java/javase/downloads/

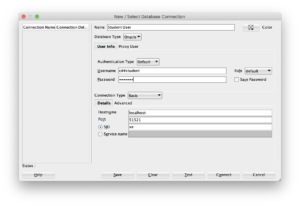

You configure a SQL Developer connection with the following values: use localhost as the host, c##student as the user, xe as the network service identifier, and 51521 as the port address. Click the plus button to add a connection where you enter these values, like shown below:

While the Java code in SQL Developer supports a port connection, Docker maps the port to the Oracle docker container. You don’t need to resolve the connection between SQL Developer and the Oracle Database listener through the network layer because this solution uses an Internal Process Control (IPC) model, based on socket to socket communication.

With virtualization you would need to install the Oracle Instant Client software on the hosting operating system. Then, you would configure your /etc/hosts file on both the hosting (macOS) and hosted (Oracle Linux) operating systems. Alternatively, you could add both IP addresses to a DNS server. The IP addresses let you map the connection between your physical macOS system and the Docker container running Oracle Linux. You can find further qualification of the connection mechanisms and repackaging in the Oracle Docker User Guide.

Containers map a local directory to operating system inside the container. Based on the earlier instructions the ~/oracle directory maps to the /home directory in the docker container. You have the ability to edit and move files within this portion of the file system’s hierarchy, which means you have complete control of the portion of the file system owned by the student user.

The next few steps help you verify the dual access to this portion of the docker container. Open a Terminal session and check your present working directory (with the pwd utility).

macName:~ username$ pwd |

It should return:

/Users/username |

During the installation, you created two subdirectories in the /Users/username directory. They were the oracle and docker-images subdirectories. In your host macOS, you should list (with the ls utility) the contents of your oracle subdirectory:

ls ~/oracle |

It should return the following:

oracle student |

As mentioned, your macOS /Users/username/oracle directory holds the contents of your docker container’s /home directory. That means that your /Users/username/oracle/student directory mirrors the /home/student directory in your docker container.

Assume your GitHub code repository for Oracle development is in a directory on your macOS side. The local mapping to the ~/oracle/student directly lets you put symbolic links in the hosted student user’s subdirectories. These symbolic links would point to the editable code on the macOS file system, which can be concurrently linked to your GitHub code repository.

Misleading Oracle Errors

It’s always interesting when you get in a hurry, have multiple terminal sessions open and type the wrong thing in the wrong terminal session. This is especially true when working with the Oracle database.

In this case, it was implementing a Docker Container of Oracle Database 18c on macOS. I typed the following to connect as the privileged system user:

sqlplus system/cangetin |

It generated the following error stack:

[student@localhost ~]$ sqlplus system/cangetin SQL*Plus: Release 18.0.0.0.0 - Production on Tue Sep 15 15:02:30 2020 Version 18.4.0.0.0 Copyright (c) 1982, 2018, Oracle. All rights reserved. ERROR: ORA-27140: attach to post/wait facility failed ORA-27300: OS system dependent operation:invalid_egid failed with status: 1 ORA-27301: OS failure message: Operation not permitted ORA-27302: failure occurred at: skgpwinit6 ORA-27303: additional information: startup egid = 54321 (oinstall), current egid = 54322 (dba) |

Really, that’s suppose to help an end-user or even an average DBA? Shouldn’t it really return an error that says the OS user isn’t the owner of the database? Naturally, there’s nothing wrong with connecting as the system privileged user when you’re OS account is not the owner provided you use the network service identifier, like

sqlplus system/cangetin@xe |

It works fine with the xe network service identifier. I hope this helps anybody confused by the error stack.

Correlated Updates

It’s always interesting when I answer questions. This question was how to you perform a correlated UPDATE statement. My answer was assuming you’re updating the rating_id foreign key column in the rating table with the value from an item_rating column in the item table where on or another column value in the rating table match the item_rating column value in the item table match, you would write a correlated UPDATE statement like:

UPDATE item i SET i.rating_id = r.rating_id WHERE EXISTS (SELECT NULL FROM rental r WHERE r.rating = i.item_rating OR r.description = i.item_rating); |

This works in Oracle, MySQL, MariaDB, and MS SQL Server. I thought my work was done but I was wrong. The individual was trying to write a correlated UPDATE statement for PostgreSQL. The statement returned the following error:

ERROR: syntax error at or near "WHERE"

LINE 3: WHERE EXISTS

^ |

I did didn’t find an article to point the individual to after quick Google and DuckDuckGo searches. So, I thought I’d provide how you do it in PostgreSQL:

UPDATE item i SET rating_id = r.rating_id FROM rating r WHERE r.rating = i.item_rating OR r.description = i.item_rating; |

In short, PostgreSQL doesn’t do what most expect because the UPDATE statement supports a FROM clause. Let’s give them the prize for different dialect. While I hope that I’m not a syntax bigot because I use MySQL more, I think the default syntax should always be supported in SQL dialects. After all, MySQL has a far superior named-notation INSERT statement alternative to the standard with the assignment method but MySQL also supports the standard syntax.

While I’ve shown you how to do it in PostgreSQL, what do you think? Should PostgreSQL be as responsible as MySQL is in maintaining standard SQL approaches?

JavaScript Streams?

A quick followup to my post on how to write a server-side shell component in JavaScript. Naturally, it’s based on a question posed to me about the original article. It asked, “Why didn’t I use JavaScript’s streams instead of synchronized files?”

Moreover, the question asks why I wrote logic (lines 69 thru 105) that wrote to local files rather than a stream. While they didn’t provide an example, here’s a rewritten solution that uses a stream.

69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 | else { // Returns RowDataPacket from query. for(let element in result) { data += result[element].item_title + ', ' + result[element].release_date = '\n' } // Write file when data string is not empty. if (data.length > 0) { buffer = Buffer.alloc(data.length, data) // Check for data from database query and write file. if (path.length > 0) { let writeStream = fs.createWriteStream(path) writeStream.write(buffer) writeStream.on(buffer) } // Set standard out (stdout) and exit program. console.log(data) process.exit(0) } else { console.error('Query returned no rows.') } } |

The Node.js stream replacement code has a significant problem when the target file can’t be read and written to by a Node.js application. This could be an ownership- or permission-driven problem coupled with the lazy file opening behavior of a stream in JavaScript. The lazy open is a hidden behavior of the createWriteStream() method, which actually calls the fs.open() method. It may raise the following type of error:

events.js:174

throw er; // Unhandled 'error' event

^

Error: EACCES: permission denied, open 'output.csv'

Emitted 'error' event at:

at lazyFs.open (internal/fs/streams.js:277:12)

at FSReqWrap.args [as oncomplete] (fs.js:140:20) |

You can prevent this type of unhandled exception by putting this type of block right after you verify the target file name in the script. It ensures that your program raises a handled exception before your code tries to access a target file as a stream.

69 70 71 72 73 74 75 76 | // Verify access to the file. try { fs.accessSync(path, (fs.constants.R_OK && fs.constants.W_OK)) access = true } catch { console.error("Error accessing [%s] file.", path) } |

Naturally, you also need to define the access variable at the top of your script. The preceding block lets you set the access variable to true on line 72 when you have permissions to the file used by the stream. It also lets you replace line 76 (from the prior example code) with the following statement that effectively blocks any attempt to use a stream that will fail because of the lazy file opening process:

76 | if (access && (path.length > 0)) { |

Adding the extra block does lengthen the program, and change line numbers. I hope I’ve adjusted in a way that makes sense by referencing the old numbers for the change of the decision making if-statement.

As always, I hope this helps those looking for a related solution.

MySQL JSON Server

A student question: Does JavaScript make context switching for web-based applications obsolete? Wow! I asked what that meant. He said, it means JavaScript replaces all other server-side programming languages, like PHP, C#, or Python. I asked the student why he believed that. His answer was that’s what two interviewing managers told him.

I thought it would be interesting to put the idea to a test. Below is a Node.js script that acts as a utility that queries the MySQL database with substitution variables in query. It also returns a standard out (stdout) stream of the MySQL query’s results. It also supports three flag and value pairs as arguments, and optionally writes the results of the MySQL query to a log file while still returning result as the stdout value. All errors are written to the standard error (stderr) stream.

The Node.js solution is completely portable between Windows and Linux. You can deploy it to either platform without any edits for Windows case insensitive Command-Line Interface (CLI). Clearly, Node.js offers a replacement for direct interaction with the .NET components in PowerShell. This appears to mean basic Linux shell or PowerShell knowledge is all that’s required to write and deploy JavaScript programs as server-side programming solutions. It means anything that you would have done with the .NET you can do with JavaScript. Likewise, you can replace PHP, C#, Python, or Ruby server-side scripts with JavaScript programs.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 | // Declare constants. const fs = require('fs') const util = require('util') const express = require('express') const mysql = require('mysql') const connection = mysql.createConnection({ host: 'localhost', user: 'student', password: 'student', database: 'studentdb' }) // Declare local variables for case insensitive use. var data = '' var buffer = Buffer.alloc(0) var path = '' // Declare default query variables dates. var startDate = new Date('1980-01-01') var endDate = new Date() // Set default endDate value as tomorrow. endDate.setDate(new Date().getDate() + 1) // Define a regular expression for valid file names. var regexp = /^([0-9a-zA-Z]+|[0-9a-zA-Z]+\.+[0-9a-zA-Z]{3})$/ // Assign dynamic variables from arguments. var argv = process.argv.slice(2) // Check for paired values, evaluate and assign them to local variables. if ((argv.length % 2) == 0) { for (let i = 0; i < argv.length; i += 2) { // Assign a file name to write to the output path. if ((argv[i].toLowerCase() == '-f') && (regexp.test(argv[i+1]))) { // Assign present working for Windows or Linux. if (process.platform == 'win32') path = '.\\' + argv[1] else path = './' + argv[1] } // Assign a start date from the input string. else if (argv[i].toLowerCase() == '-b') { startDate = new Date(argv[i+1]) } // Assign a end date from the input string. else if (argv[i].toLowerCase() == '-e') { endDate = new Date(argv[i+1]) } } } else { console.error('Arguments must be in pairs: flag and value.') } // Define and run MySQL query. connection.query("SELECT i.item_title " + ", date_format(i.release_date,'%d-%M-%Y') AS release_date " + "FROM item i JOIN common_lookup cl " + "ON i.item_type = cl.common_lookup_id " + "WHERE cl.common_lookup_type = 'BLU-RAY' " + "AND i.release_date BETWEEN ? AND ? " + "ORDER BY i.release_date" ,[startDate, endDate], function (err, result) { if (err) { console.error('Query contains error ...') console.error('-> ' + err) } else { // Prints the index value in the RowDataPacket. for(let element in result) { data += result[element].item_title + ', ' + result[element].release_date + '\n' } // Write file when data string is not empty. if (data.length > 0 ) { buffer = Buffer.alloc(data.length,data) // Check for a defined path before writing a file. if (path.length > 0) { // Open the file. fs.open(path, 'w', function(err, fd) { if (err) { console.error('Could not open [' + path + '] file [' + err + ']') } else { // Write the file. fs.write(fd, buffer, 0, buffer.length, null, function(err) { if (err) console.error('Error writing [' + path + '] file [' + err + ']') fs.close(fd, function() { if (fs.existsSync(path)) { process.exit(0) } }) }) } }) } // Set standard out (stdout). console.log(data) } else { console.error('Query returned no rows.') } } }) // Close MySQL connection. connection.end() |

You can call this code with the default values, like

node app.js |

You can call this code with a user defined file name, and a custom start and end date values, like

node app.js -f output.csv -b '2001-01-01' -e '2004-12-31' |

The latter command returns the following by querying my MySQL studentdb video store:

Star Wars II, 16-May-2002 Harry Potter and the Chamber of Secrets, 28-May-2002 Harry Potter and the Sorcerer's Stone, 28-May-2002 Die Another Day, 03-June-2003 Harry Potter and the Prisoner of Azkaban, 23-October-2004 |

As always, I hope this helps somebody trying to sort it out.

iTunes Support?

It’s funny to use the word Apple and Support. It seems congress found that out today too. Not really surprised but there’s no way I could post a Apple’s simple spelling error on the web page. You would think there would be a way, so they could correct their errors when they make them but alas not. I tried the web page, which gave me no potential solutions and no chat opportunity to report it or fix it. I imagine they think their staff too professional to make a mistake like this.

It’s simply tedious problem. They search for Endeavour correctly in the iTunes Store but when you purchase the Endeavour Season 7, it downloads Endeavor (American spelling). One has to wonder what’s underneath the hood of iTunes now. Is it a relational database where their should be only one spelling of a title or a document database where there may be more than one spelling? Who knows, here’s what it looks like in my iTunes screen:

If anybody knows how to report this to correct it without calling Apple Support, let me know. I already know how to fudge (finesse) it but would prefer not to do so. Thanks to anybody who can fix it! BTW, I’m on hold for Apple Support to report it. 😉

Express.js & MySQL

Sometimes, you just half to chuckle. A couple folks felt that I didn’t give enough information in my post showing how to configure a small Node.js application that could access a MySQL database. Specifically, they wanted me to explain the following:

- Configure your Express.js and MySQL development in a single Node.js application.

- How to convert the list of

RowDataPacketobjects as elements of data, which is really just simple JavaScript knowledge. - How to bind variables into the query.

Like the other blog post, this one assumes you’ve performed a global install of Node.js on a Linux server. If you’re unfamiliar with how to perform a global Node.js installation, I cover how to do it in this earlier blog post.

Before you write the Node.js applicaiton, you need to setup a db developer directory. A global install of Node.js means you need to create a node_modules symbolic link to the /usr/local/lib/node_modules directory in the db directory (in Linux). You can use the following Linux command from within the db directory to create the appropriate symbolic link:

ln -s /usr/local/lib/node_modules `pwd`/node_modules |

or, assuming you have a /home/some_user/db directory

ln -s /usr/local/lib/node_modules /home/some_user/node_modules |

After creating the node_modules symbolic link, you need to run the following two npm commands. Please note that second command holds the secret-sauce for generating a package.json file that supports Express.js and the MySQL driver:

npm init --y sudo npm install --save express mysql |

Then, you need to replace the package.json file with the contents of the package-lock.json file from your last npm command.

Here’s a small sample program that uses Express.js, converts the RowDataPackets collection, and binds local variables into the query:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 | // Require libraries. const express = require('express') const mysql = require('mysql') // Create a mysql connection. const connection = mysql.createConnection({ host: 'localhost', user: 'student', password: 'student', database: 'studentdb' }) // Declare two local variables. const start_date = '2001-01-01' const end_date = '2003-12-31' // Connect and display results in the console log. connection.connect((err) => { if (err) throw err else { console.log('Connected to MySQL Server!\n') connection.query("SELECT i.item_title " + ", date_format(i.release_date,'%d-%M-%Y') AS release_date " + "FROM item i JOIN common_lookup cl " + "ON i.item_type = cl.common_lookup_id " + "WHERE cl.common_lookup_type = 'BLU-RAY' " + "AND i.release_date BETWEEN ? AND ? " + "ORDER BY i.release_date" ,[start_date, end_date], function (err, result) { if (err) throw err else { // Prints the index value in the RowDataPacket. for(let element in result) { console.log(result[element].item_title + ', ' + result[element].release_date) } console.log('') console.log('Press Ctrl-C to terminate ...') } }) } }) |

Line 28 shows two question marks. They act as placeholders for binding variables. Then, on line 30 you see a collection of the start_date and end_date local variables, which is the second argument to the query() function.

Rather than define individual variables, you can pass them as a collection directly. For example, you replace lines 14 and 15 with this single line:

14 | const dates = ['2001-01-01','2003-12-31'] |

Then, you can pass dates as the second argument to the query() function, like this:

30 | ,dates, function (err, result) { |

Lines 35 and 36 show you how to convert a collection of RowDataPacket objects into elements of data. The for loop assigns the index value to the element variable, which lets you address a single RowDataPacket object instance. The dot (“.“) notation lets you use the name in a name-value pair to reference its value.

It displays the following:

Connected to MySQL Server! Star Wars II, 16-May-2002 Harry Potter and the Chamber of Secrets, 28-May-2002 Harry Potter and the Sorcerer's Stone, 28-May-2002 Die Another Day, 03-June-2003 Press Ctrl-C to terminate ... |

Naturally, I’ll get around to writing something up that shows how to leverage MySQL data into a dynamic form with Handlebars at some point in the near future.

As always, I hope this helps those looking for a solution.

Node.js & MySQL

These are my notes for creating a small Node.js application that queries a MySQL database. The post will show you how to:

- Configure your Node.js development directory.

- Build a small application to test a MySQL connection.

- Build a small application that connects to the MySQL database and queries data.

This blog post assumes you’ve performed a global install of Node.js on a Linux server. If you’re unfamiliar with how to perform a global Node.js installation, I cover how to do it in this earlier blog post.

Before you write the Node.js applicaiton, you need to setup a db developer directory. Then, create a node_modules symbolic link to the /usr/local/lib/node_modules directory in the db directory. You can use the following command from the db directory:

ln -s /usr/local/lib/node_modules `pwd`/node_modules |

After creating the node_modules symbolic link, you need to run the following two npm commands:

npm init --y npm install --save mysql |

The first command sets up a generic package.json file, and the second adds the mysql package and supporting packages to the package.json file. These two steps configure the Node.js side of these examples.

They both require that you create the student user with a native password, like so:

CREATE USER 'student'@'localhost' IDENTIFIED WITH 'mysql_native_password' BY 'student'; GRANT ALL PRIVILEGES ON studentdb.* TO 'student'@'localhost'; FLUSH PRIVILEGES; |

The following example shows you how to check a connection to the MySQL database:

const mysql = require('mysql') const connection = mysql.createConnection({ host: 'localhost', user: 'student', password: 'student', database: 'studentdb' }) connection.connect((err) => { if (err) throw err else console.log('Connected to MySQL Server!\n') console.log('User configured wiht mysql_native_password.\n'); console.log('Press Ctrl-C to terminate ...') }) |

You extend the previous example by adding a query component and returning the query result value to the console’s log (leveraging the w3school’s Node.js and MySQL tutorial example):

const mysql = require('mysql') const connection = mysql.createConnection({ host: 'localhost', user: 'student', password: 'student', database: 'studentdb' }) connection.connect((err) => { if (err) throw err else { console.log('Connected to MySQL Server!\n') connection.query('SELECT DISTINCT item_title FROM item', function (err, result) { if (err) throw err else console.log(result) console.log('Press Ctrl-C to terminate ...') }) } }) |

It should display the following:

Connected to MySQL Server!

[ RowDataPacket { item_title: 'The Hunt for Red October' },

RowDataPacket { item_title: 'Star Wars I' },

RowDataPacket { item_title: 'Star Wars II' },

RowDataPacket { item_title: 'Star Wars III' },

RowDataPacket { item_title: 'The Chronicles of Narnia' },

RowDataPacket { item_title: 'RoboCop' },

RowDataPacket { item_title: 'Pirates of the Caribbean' },

RowDataPacket { item_title: 'MarioKart' },

RowDataPacket { item_title: 'Splinter Cell' },

RowDataPacket { item_title: 'Need for Speed' },

RowDataPacket { item_title: 'The DaVinci Code' },

RowDataPacket { item_title: 'Cars' },

RowDataPacket { item_title: 'Beau Geste' },

RowDataPacket { item_title: 'I Remember Mama' },

RowDataPacket { item_title: 'Tora! Tora! Tora!' },

RowDataPacket { item_title: 'A Man for All Seasons' },

RowDataPacket { item_title: 'Hook' },

RowDataPacket { item_title: 'Around the World in 80 Days' },

RowDataPacket { item_title: 'Harry Potter and the Sorcerer\'s Stone' },

RowDataPacket { item_title: 'Camelot' },

RowDataPacket { item_title: 'Casino Royale' },

RowDataPacket { item_title: 'Die Another Day' },

RowDataPacket { item_title: 'Golden Eye' },

RowDataPacket { item_title: 'Tomorrow Never Dies' },

RowDataPacket { item_title: 'The World Is Not Enough' },

RowDataPacket { item_title: 'Brave Heart' },

RowDataPacket { item_title: 'Christmas Carol' },

RowDataPacket { item_title: 'Scrooge' },

RowDataPacket { item_title: 'Clear and Present Danger' },

RowDataPacket { item_title: 'Harry Potter and the Chamber of Secrets' },

RowDataPacket { item_title: 'Harry Potter and the Prisoner of Azkaban' },

RowDataPacket { item_title: 'Harry Potter and the Goblet of Fire' },

RowDataPacket { item_title: 'Harry Potter and the Order of the Phoenix' },

RowDataPacket { item_title: 'Harry Potter and the Half Blood Prince' },

RowDataPacket { item_title: 'Harry Potter and the Deathly Hallows, Part 1' },

RowDataPacket { item_title: 'Harry Potter and the Deathly Hallows, Part 2' },

RowDataPacket { item_title: 'Tron' },

RowDataPacket { item_title: 'The Avengers' },

RowDataPacket { item_title: 'Thor: The Dark World' } ]

Press Ctrl-C to terminate ... |

As always, I hope this helps those looking to learn

Node.js Routing

I liked the example used to explore basic routing in Chapter 2 of the Web Development with Node & Express book. I embellished the Node.js router example and static pages just a bit. The routing example requires you create a public subdirectory where you deploy the code and put the about.html, home.html, and 404.html files in the public subdirectory. Then, you must put a logo.png file in a tmp subdirectory inside of the public directory. The book assumes you know how to build these pages, which seems reasonable but just in case, here are the files I used to test the book’s example.

The about.html page:

<html> <head> <title>About Page</title> </head> <body> <p>A small sample Node.js routing example.</p> <p><img width="25" src="/img/logo.png" /> <sup><i>MEAN Stack Development</i></sup></p> </body> </html> |

The home.html page:

<html> <head> <title>Home Page</title> </head> <body> <p style="font-size:110%">Star Trek: The Original Series - Season 1</p> <p><img width="300" src="/img/StarTrekOriginal1.png" /></p> <p><img width="25" src="/img/logo.png" /> <sup><i>MEAN Stack Development</i></sup></p> </body> </html> |

The 404.html page:

<html> <head> <title>404 Error Message</title> </head> <body> <p>A 404 Error Message Page.</p> <p><img width="25" src="/img/logo.png" /> <sup><i>MEAN Stack Development</i></sup></p> </body> </html> |

The routing example sets the path to lowercase, which is important when you type the URL to verify physical files. For example, you need to use the routing startrekoriginal1.png string value in the URL. It will fail if you use the mixed case actual file name of the StarTrekOriginal1.png file. That’s because the routing maps the lowercase string to the physical file.

While the /public directory is the home directory of the virtual map as a rule, you can’t use it in the URL (as explained in next Chapter 3). Also, the router uses /img as a virtual directory which maps to the physical /tmp subdirectory. When you want to validate a physical image file you need to know these two rules. They explain why the following URL lets you verify a physical image file found in the /public/tmp directory.

localhost:3000/img/StarTrekOriginal1.png |

you will trigger the 404 Error page. Here’s the Node.js routing code:

/* Construct a web server. */ const http = require('http') const fs = require('fs') const port = process.env.PORT || 3000 /* Function uses the fs package to read files. */ function serveStaticFile(res, path, contentType, responseCode = 200) { fs.readFile(__dirname + path, (err, data) => { if (err) { res.writeHead(500, { 'Content-Type': 'text/plain' }) return res.end('500 - Internal Error') } res.writeHead(responseCode, { 'Content-Type': contentType }) res.end(data) }) } /* Create the Node.js server. */ const server = http.createServer((req, res) => { // Normalize URL by removing query string, optional // trailing slash, and making it lowercase. const path= req.url.replace(/\/?(?:\?.*)?$/,'').toLowerCase() switch(path) { case '': serveStaticFile(res, '/public/home.html', 'text/html' ) break case '/about': serveStaticFile(res, '/public/about.html', 'text/html' ) break case '/img/startrekoriginal1.png': serveStaticFile(res, '/public/tmp/StarTrekOriginal1.png', 'image/png' ) break case '/img/logo.png': serveStaticFile(res, '/public/tmp/logo.png', 'image/png' ) break default: serveStaticFile(res, '/public/404.html', 'text/html', 404 ) break } }) server.listen(port, () => console.log(`server started on port ${port}; ` + 'press Ctrl-C to terminate...')) |

Assuming you name the Node.js routing example helloworld3.js, you would start the router with the following command:

node helloworld3.js |

It should start the router. Enter the following URL:

http://localhost:3000 |

You should see a page rendered like the following:

As always, I hope this helps those trying to use this technology.