Archive for the ‘Windows OS’ Category

MySQL 5-Table Procedure

A student wanted a better example of writing a MySQL Persistent Stored Module (PSM) that maintains transactional scope across a couple tables. Here’s the one I wrote about ten years ago to build the MySQL Video Store model. It looks I neglected to put it out there before, so here it is for reference.

-- Conditionally drop procedure if it exists. DROP PROCEDURE IF EXISTS contact_insert; -- Reset the delimiter so that a semicolon can be used as a statement and block terminator. DELIMITER $$ SELECT 'CREATE PROCEDURE contact_insert' AS "Statement"; CREATE PROCEDURE contact_insert ( pv_member_type CHAR(12) , pv_account_number CHAR(19) , pv_credit_card_number CHAR(19) , pv_credit_card_type CHAR(12) , pv_first_name CHAR(20) , pv_middle_name CHAR(20) , pv_last_name CHAR(20) , pv_contact_type CHAR(12) , pv_address_type CHAR(12) , pv_city CHAR(30) , pv_state_province CHAR(30) , pv_postal_code CHAR(20) , pv_street_address CHAR(30) , pv_telephone_type CHAR(12) , pv_country_code CHAR(3) , pv_area_code CHAR(6) , pv_telephone_number CHAR(10)) MODIFIES SQL DATA BEGIN /* Declare variables to manipulate auto generated sequence values. */ DECLARE member_id int unsigned; DECLARE contact_id int unsigned; DECLARE address_id int unsigned; DECLARE street_address_id int unsigned; DECLARE telephone_id int unsigned; /* Declare local constants for who-audit columns. */ DECLARE lv_created_by int unsigned DEFAULT 1001; DECLARE lv_creation_date DATE DEFAULT UTC_DATE(); DECLARE lv_last_updated_by int unsigned DEFAULT 1001; DECLARE lv_last_update_date DATE DEFAULT UTC_DATE(); /* Declare a locally scoped variable. */ DECLARE duplicate_key INT DEFAULT 0; /* Declare a duplicate key handler */ DECLARE CONTINUE HANDLER FOR 1062 SET duplicate_key = 1; /* Start the transaction context. */ START TRANSACTION; /* Create a SAVEPOINT as a recovery point. */ SAVEPOINT all_or_none; /* Insert into the first table in sequence based on inheritance of primary keys by foreign keys. */ INSERT INTO member ( member_type , account_number , credit_card_number , credit_card_type , created_by , creation_date , last_updated_by , last_update_date ) VALUES ((SELECT common_lookup_id FROM common_lookup WHERE common_lookup_context = 'MEMBER' AND common_lookup_type = pv_member_type) , pv_account_number , pv_credit_card_number ,(SELECT common_lookup_id FROM common_lookup WHERE common_lookup_context = 'MEMBER' AND common_lookup_type = pv_credit_card_type) , lv_created_by , lv_creation_date , lv_last_updated_by , lv_last_update_date ); /* Preserve the sequence by a table related variable name. */ SET member_id = last_insert_id(); /* Insert into the first table in sequence based on inheritance of primary keys by foreign keys. */ INSERT INTO contact VALUES ( null , member_id ,(SELECT common_lookup_id FROM common_lookup WHERE common_lookup_context = 'CONTACT' AND common_lookup_type = pv_contact_type) , pv_first_name , pv_middle_name , pv_last_name , lv_created_by , lv_creation_date , lv_last_updated_by , lv_last_update_date ); /* Preserve the sequence by a table related variable name. */ SET contact_id = last_insert_id(); /* Insert into the first table in sequence based on inheritance of primary keys by foreign keys. */ INSERT INTO address VALUES ( null , last_insert_id() ,(SELECT common_lookup_id FROM common_lookup WHERE common_lookup_context = 'MULTIPLE' AND common_lookup_type = pv_address_type) , pv_city , pv_state_province , pv_postal_code , lv_created_by , lv_creation_date , lv_last_updated_by , lv_last_update_date ); /* Preserve the sequence by a table related variable name. */ SET address_id = last_insert_id(); /* Insert into the first table in sequence based on inheritance of primary keys by foreign keys. */ INSERT INTO street_address VALUES ( null , last_insert_id() , pv_street_address , lv_created_by , lv_creation_date , lv_last_updated_by , lv_last_update_date ); /* Insert into the first table in sequence based on inheritance of primary keys by foreign keys. */ INSERT INTO telephone VALUES ( null , contact_id , address_id ,(SELECT common_lookup_id FROM common_lookup WHERE common_lookup_context = 'MULTIPLE' AND common_lookup_type = pv_telephone_type) , pv_country_code , pv_area_code , pv_telephone_number , lv_created_by , lv_creation_date , lv_last_updated_by , lv_last_update_date); /* This acts as an exception handling block. */ IF duplicate_key = 1 THEN /* This undoes all DML statements to this point in the procedure. */ ROLLBACK TO SAVEPOINT all_or_none; END IF; /* This commits the write when successful and is harmless otherwise. */ COMMIT; END; $$ -- Reset the standard delimiter to let the semicolon work as an execution command. DELIMITER ; |

You can then call the procedure, like:

SELECT 'CALL contact_insert() PROCEDURE 5 times' AS "Statement"; CALL contact_insert('INDIVIDUAL','R11-514-34','1111-1111-1111-1111','VISA_CARD','Goeffrey','Ward','Clinton','CUSTOMER','HOME','Provo','Utah','84606','118 South 9th East','HOME','011','801','423\-1234'); CALL contact_insert('INDIVIDUAL','R11-514-35','1111-2222-1111-1111','VISA_CARD','Wendy',null,'Moss','CUSTOMER','HOME','Provo','Utah','84606','1218 South 10th East','HOME','011','801','423-1234'); CALL contact_insert('INDIVIDUAL','R11-514-36','1111-1111-2222-1111','VISA_CARD','Simon','Jonah','Gretelz','CUSTOMER','HOME','Provo','Utah','84606','2118 South 7th East','HOME','011','801','423-1234'); CALL contact_insert('INDIVIDUAL','R11-514-37','1111-1111-1111-2222','MASTER_CARD','Elizabeth','Jane','Royal','CUSTOMER','HOME','Provo','Utah','84606','2228 South 14th East','HOME','011','801','423-1234'); CALL contact_insert('INDIVIDUAL','R11-514-38','1111-1111-3333-1111','VISA_CARD','Brian','Nathan','Smith','CUSTOMER','HOME','Spanish Fork','Utah','84606','333 North 2nd East','HOME','011','801','423-1234'); |

I hope this code complete approach helps those looking to learn how to write MySQL PSMs.

PostgreSQL+PowerShell

This post explains and demonstrates how to install, configure, and use the psqlODBC (32-bit) and psqlODBC (64-bit) libraries to connect your Microsoft PowerShell programs to a locally installed PostgreSQL 14 database. It relies on you previously installing and configuring a PostgreSQL 14 database. This post is a step-by-step guide to installing PostgreSQL 14 on Windows 10, and this post shows you how to configure the PostgreSQL 14 database.

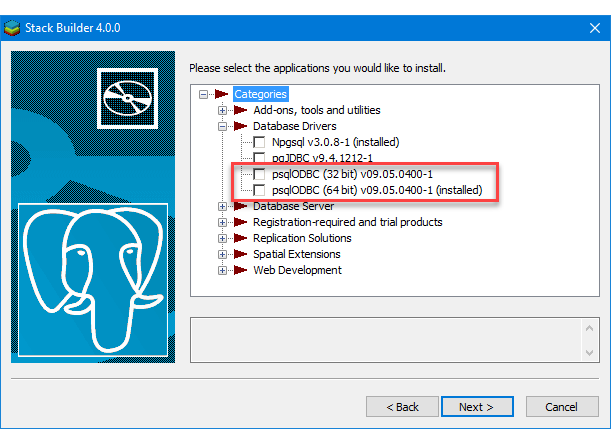

If you didn’t follow the instructions to get the psqlODBC libraries in the installation blog post, you will need to get those libraries, as qualified by Microsoft with the PostgreSQL Stack Builder.

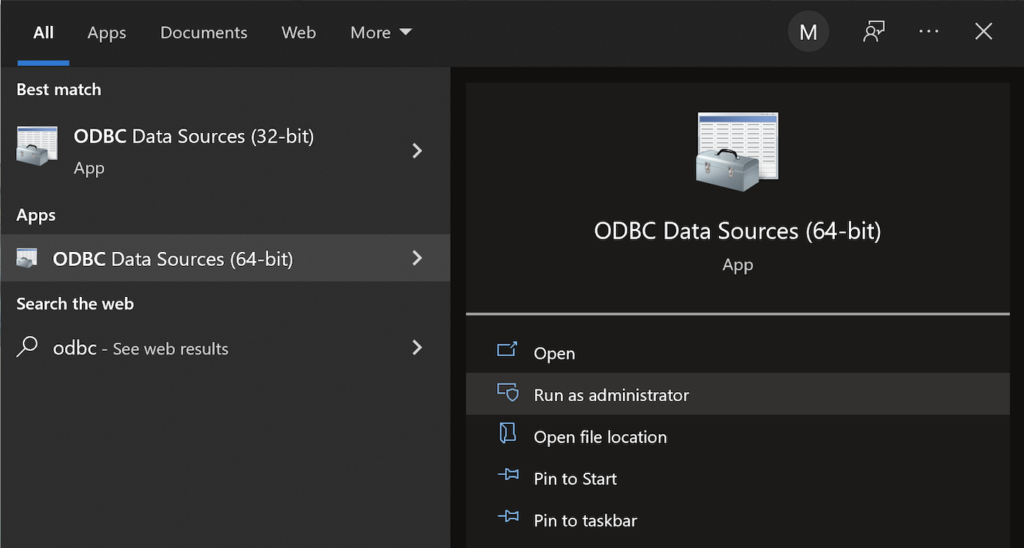

You can launch PostgreSQL Stack Builder after the install by clicking on Start -> PostgreSQL -> Stack Builder. Choose to enable Stack Builder to change your system and install the psqlODBC libraries. After you’ve installed the psqlODBC library, use Windows search field to find the ODBC Data Sources dialog and run it as administrator.

There are six steps to setup, test, and save your ODBC Data Source Name (DSN). You can click on the images on the right to launch them in a more readable format or simply read the instructions.

PostgreSQL ODBC Setup Steps

- The Microsoft DSN (Data Source Name) dialog automatically elects the User DSN tab. Click on the System DSN tab.

- The view under the System DSN is exactly like the User DSN tab. Click the Add button to start the workflow.

- The Create New Data Source dialog requires you select the PostgreSQL ODBC Driver(UNICODE) option from the list and click the Finish button to proceed.

- The PostgreSQL Unicode ODBC Driver Setup dialog should complete the prompts as follows below and consistent with the PostgreSQL 14 Configuration blog. If you opt for localhost as the server value because you have a DCHP IP address, make sure you’ve configured your hosts file in the C:\Windows\System32\drivers\etc directory. You should enter the following two lines in the hosts file:

127.0.0.1 localhost ::1 localhost

These are the string values you should enter in the PostgreSQL Unicode ODBC Driver Setup dialog:

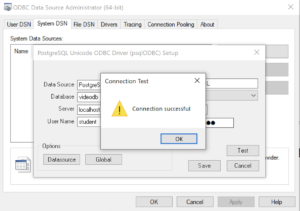

Data Source: PostgreSQL35W Database: videodb Server: localhost User Name: student Description: PostgreSQL SSL Mode: disable Port: 5432 Password: student

After you complete the entry, click the Test button.

- The Connection Test dialog should return a “Connection successful” message. Click the OK button to continue.

- The ODBC Data Source Administrator dialog should show the PostgreSQL35W System Data Source. Click the OK button to continue.

After you have created the System PostgreSQL ODBC Setup, it’s time to build a PowerShell Cmdlet (or, Commandlet). Some documentation and blog notes incorrectly suggest you need to write a connection string with a UID and password, like:

$ConnectionString = 'DSN=PostgreSQL35W;Uid=student;Pwd=student' |

The UID and password is unnecessary in the connection string. As a rule, the UID and password are only necessary in the ODBC DSN, like:

$ConnectionString = 'DSN=PostgreSQL35W' |

You can create a readcursor.ps1 Cmdlet like the following:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | # Define a ODBC DSN connection string. $ConnectionString = 'DSN=PostgreSQL35W' # Define a MySQL Command Object for a non-query. $Connection = New-Object System.Data.Odbc.OdbcConnection; $Connection.ConnectionString = $ConnectionString # Attempt connection. try { $Connection.Open() # Create a SQL command. $Command = $Connection.CreateCommand(); $Command.CommandText = "SELECT current_database();"; # Attempt to read SQL command. try { $Reader = $Command.ExecuteReader(); # Read while records are found. while ($Reader.Read()) { Write-Host "Current Database [" $Reader[0] "]"} } catch { Write-Error "Message: $($_.Exception.Message)" Write-Error "StackTrace: $($_.Exception.StackTrace)" Write-Error "LoaderExceptions: $($_.Exception.LoaderExceptions)" } finally { # Close the reader. $Reader.Close() } } catch { Write-Error "Message: $($_.Exception.Message)" Write-Error "StackTrace: $($_.Exception.StackTrace)" Write-Error "LoaderExceptions: $($_.Exception.LoaderExceptions)" } finally { $Connection.Close() } |

Line 14 assigns a SQL query that returns a single row with one column as the CommandText of a Command object. Line 22 reads the zero position of a row or record set with only one column.

You call the readcursor.ps1 Cmdlet with the following syntax:

powershell .\readcursor.ps1 |

It returns:

Current Database [ videodb ] |

A more realistic way to write a query would return multiple rows with a set of two or more columns. The following program queries a table with multiple rows of two columns, but the program logic can manage any number of columns.

# Define a ODBC DSN connection string. $ConnectionString = 'DSN=PostgreSQL35W' # Define a MySQL Command Object for a non-query. $Connection = New-Object System.Data.Odbc.OdbcConnection; $Connection.ConnectionString = $ConnectionString # Attempt connection. try { $Connection.Open() # Create a SQL command. $Command = $Connection.CreateCommand(); $Command.CommandText = "SELECT last_name, first_name FROM contact ORDER BY 1, 2"; # Attempt to read SQL command. try { $row = $Command.ExecuteReader(); # Read while records are found. while ($row.Read()) { # Initialize output for each row. $output = "" # Navigate across all columns (only two in this example). for ($column = 0; $column -lt $row.FieldCount; $column += 1) { # Mechanic for comma-delimit between last and first name. if ($output.length -eq 0) { $output += $row[$column] } else { $output += ", " + $row[$column] } } # Write the output from the database. Write-Host $output } } catch { Write-Error "Message: $($_.Exception.Message)" Write-Error "StackTrace: $($_.Exception.StackTrace)" Write-Error "LoaderExceptions: $($_.Exception.LoaderExceptions)" } finally { # Close the reader. $row.Close() } } catch { Write-Error "Message: $($_.Exception.Message)" Write-Error "StackTrace: $($_.Exception.StackTrace)" Write-Error "LoaderExceptions: $($_.Exception.LoaderExceptions)" } finally { $Connection.Close() } |

You call the readcontact.ps1 Cmdlet with the following syntax:

powershell .\readcontact.ps1 |

It returns an ordered set of comma-separated values, like

Clinton, Goeffrey Gretelz, Simon Moss, Wendy Royal, Elizabeth Smith, Brian Sweeney, Ian Sweeney, Matthew Sweeney, Meaghan Vizquel, Doreen Vizquel, Oscar Winn, Brian Winn, Randi |

As always, I hope this helps those looking for a complete concrete example of how to make Microsoft Powershell connect and query results from a PostgreSQL database.

PostgreSQL Arrays

If you’re wondering about this post, it shows the basic array of a set of integers and strings before showing you how to create nested tables of data in PostgreSQL. By the way, they’re not called nested tables in PostgreSQL, like they are in Oracle but perform like their Oracle cousins.

Let’s create a table with an auto-incrementing column and two arrays, one array of integers and another of strings:

-- Conditionally drop the demo table. DROP TABLE IF EXISTS demo; -- Create the test table. CREATE TABLE demo ( demo_id serial , demo_number integer[5] , demo_string varchar(5)[7]); |

You can insert test values like this:

INSERT INTO demo (demo_number, demo_string) VALUES ( array[1,2,3,4,5] , array['One','Two','Three','Four','Five','Six','Seven']); |

Then, you can query them with this unnest function, like:

SELECT unnest(demo_number) AS numbers , unnest(demo_string) AS strings FROM demo; |

It returns:

numbers | strings

---------+---------

1 | One

2 | Two

3 | Three

4 | Four

5 | Five

| Six

| Seven

(7 rows) |

You may note that the two arrays are asymmetrical. It only becomes an issue when you navigate the result in a PL/pgSQL cursor or imperative programming language, like Python.

Now, let’s do something more interesting like work with a composite user-defined type, like the player structure. You would create the composite user-defined type with this syntax:

-- Conditionally drop the player type. DROP TYPE IF EXISTS player; -- Create the player type. CREATE TYPE player AS ( player_no integer , player_name varchar(24) , player_position varchar(14) , ab integer , r integer , h integer , bb integer , rbi integer ); |

You can create a world_series table that include a players column that uses an array of player type, like

-- Conditionally drop the world_series table. DROP TABLE IF EXISTS world_series; -- Create the player type. CREATE TABLE world_series ( world_series_id serial , team varchar(24) , players player[30] , game_no integer , year integer ); |

If you’re familiar with the Oracle Database, you’d have to specify a tested table in the syntax. Fortunately, PostgreSQL doesn’t require that.

Insert two rows with the following statement:

INSERT INTO world_series ( team , players , game_no , year ) VALUES ('San Francisco Giants' , array[(24,'Willie Mayes','Center Fielder',5,0,1,0,0)::player ,(5,'Tom Haller','Catcher',4,1,2,0,2)::player] , 4 , 1962 ); |

You can append to the array with the following syntax. A former student and I have a disagreement on whether this is shown in the PostgreSQL 8.15.4 Modifying Array documentation. I believe it can be inferred from the document and he doesn’t believe so. Anyway, here’s how you add an element to an existing array in a table with the UPDATE statement:

UPDATE world_series SET players = (SELECT array_append(players,(7,'Henry Kuenn','Right Fielder',3,0,0,1,0)::player) FROM world_series) WHERE team = 'San Francisco Giants' AND year = 1962 AND game_no = 4; |

Like Oracle’s nested tables, PostgreSQL’s arrays of composite user-defined types requires writing a PL/pgSQL function. I’ll try to add one of those shortly in another blog entry to show you how to edit and replace entries in stored arrays of composite user-defined types.

You can query the unnested rows and get a return set like a Python tuple with the following query:

SELECT unnest(players) AS player_list FROM world_series WHERE team = 'San Francisco Giants' AND year = 1962 AND game_no = 4; |

It returns the three rows from the players array:

player_list ---------------------------------------------- (24,"Willie Mayes","Center Field",5,0,1,0,0) (5,"Tom Haller",Catcher,4,1,2,0,2) (7,"Henry Kuenn","Right Fielde",3,0,0,1,0) (3 rows) |

It returns the data set in entry-order. If we step outside of the standard 8.15 Arrays PostgreSQL Documentation, you can do much more with arrays (or nested tables). The balance of this example demonstrates some new syntax that helps you achieve constructive outcomes in PostgreSQL.

You can use a Common Table Expression (CTE) to get the columnar display of the player composite user-defined type. This type of solution is beyond the standard , like:

WITH list AS (SELECT unnest(players) AS row_result FROM world_series WHERE team = 'San Francisco Giants' AND year = 1962 AND game_no = 4) SELECT (row_result).player_name , (row_result).player_no , (row_result).player_position FROM list; |

If you’re unfamiliar with accessing composite user-defined types, I wrote a post on that 7 years ago. You can find the older blog entry PostgreSQL Composites on my blog.

It returns only the three requested columns of the player composite user-defined type:

player_name | player_no | player_position --------------+-----------+----------------- Willie Mayes | 24 | Center Fielder Tom Haller | 5 | Catcher Henry Kuenn | 7 | Right Fielder (3 rows) |

You should note that the data is presented in an entry-ordered manner when unnested alone in the SELECT-list. That behavior changes when the SELECT-list includes non-array data.

The easiest way to display data from the non-array and array columns is to list them inside the SELECT-list of the CTE, like:

WITH list AS (SELECT game_no AS game , year , unnest(players) AS row_result FROM world_series WHERE team = 'San Francisco Giants' AND year = 1962 AND game_no = 4) SELECT game , year , (row_result).player_name , (row_result).player_no , (row_result).player_position FROM list; |

It returns an ordered set of unnested rows when you include non-array columns, like:

game | year | player_name | player_no | player_position

------+------+--------------+-----------+-----------------

4 | 1962 | Henry Kuenn | 7 | Right Fielder

4 | 1962 | Tom Haller | 5 | Catcher

4 | 1962 | Willie Mayes | 24 | Center Fielder

(3 rows) |

While you can join the world_series table to the unnested array rows (returned as a derived table, its a bad idea. The mechanics to do it require you to return the primary key column in the same SELECT-list of the CTE. Then, you join the CTE list to the world_series table by using the world_series_id primary key.

However, there is no advantage to an inner join approach and it imposes unnecessary processing on the database server. The odd rationale that I heard when I noticed somebody was using a CTE to base-table join was: “That’s necessary so they could use column aliases for the non-array columns.” That’s not true because you can use the aliases inside the CTE, as shown above when game is an alias to the game_no column.

As always, I hope this helps those looking to solve a problem in PostgreSQL.

Setting SQL_MODE

In MySQL, the @@sql_mode parameter should generally use ONLY_FULL_GROUP_BY. If it doesn’t include it and you don’t have the ability to change the database parameters, you can use a MySQL PSM (Persistent Stored Module), like:

Create the set_full_group_by procedure:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | -- Drop procedure conditionally on whether it exists already. DROP PROCEDURE IF EXISTS set_full_group_by; -- Reset delimter to allow semicolons to terminate statements. DELIMITER $$ -- Create a procedure to verify and set connection parameter. CREATE PROCEDURE set_full_group_by() LANGUAGE SQL NOT DETERMINISTIC SQL SECURITY DEFINER COMMENT 'Set connection parameter when not set.' BEGIN /* Check whether full group by is set in the connection and if unset, set it in the scope of the connection. */ IF NOT EXISTS (SELECT NULL WHERE REGEXP_LIKE(@@SQL_MODE,'ONLY_FULL_GROUP_BY')) THEN SET SQL_MODE=(SELECT CONCAT(@@sql_mode,',ONLY_FULL_GROUP_BY')); END IF; END; $$ -- Reset the default delimiter. DELIMITER ; |

Run the following SQL command before you attempt the exercises in the same session scope:

CALL set_full_group_by(); |

As always, I hope this helps those looking for a solution. Naturally, you can simply use the SET command on line #21 above.

Drop Overloaded Routine

In October 2019, I wrote a post with anonymous block programs to drop tables, sequences, routines, and triggers. Two weeks later, I wrote another post to drop all overloaded routines. However, I recognized the other day that I should have written a function that let you target which function or procedure you want to drop.

The older code only let you drop all of your functions or procedures. That was overkill when you’re working on new functions or procedures.

This post provides a utility for those writing functions and procedures in a public schema of any database in a PostgreSQL installation. It is designed to drop functions or procedures from the public schema.

The code follows below:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 | CREATE OR REPLACE FUNCTION drop_routine( IN pv_routine_name VARCHAR(64) , IN pv_routine_type VARCHAR(64)) RETURNS INTEGER AS $$ DECLARE /* Declare the current catalog. */ lv_local_catalog VARCHAR(64) := current_database(); /* Declare return type variable. */ lv_retval INTEGER := 1; /* Manage data dictionary case mechanics: ====================================== routine_name is always in lowercase. routine_type is always in uppercase. */ lv_routine_name VARCHAR(64) := LOWER(pv_routine_name); lv_routine_type VARCHAR(64) := UPPER(pv_routine_type); /* Declare an indefinite length string for SQL statement. */ sql VARCHAR; /* Declare variables to manage cursor return values. */ row RECORD; arg VARCHAR; /* Declare parameter list. */ list VARCHAR; /* Declare a routine cursor. */ routine_cursor CURSOR( cv_routine_name VARCHAR , cv_routine_type VARCHAR ) FOR SELECT r.routine_name , r.specific_name , r.routine_type FROM information_schema.routines r WHERE r.specific_catalog = current_database() AND r.routine_schema = 'public' AND r.routine_type = cv_routine_type AND r.routine_name = cv_routine_name; /* Declare a parameter cursor. */ parameter_cursor CURSOR( cv_specific_name VARCHAR ) FOR SELECT args.data_type FROM information_schema.parameters args WHERE args.specific_catalog = current_database() AND args.specific_schema = 'public' AND args.specific_name = cv_specific_name; BEGIN /* Open the cursor. */ OPEN routine_cursor(lv_routine_name, lv_routine_type); <<row_loop>> LOOP /* Fetch table names. */ FETCH routine_cursor INTO row; /* Exit when no more records are found. */ EXIT row_loop WHEN NOT FOUND; /* Initialize parameter list. */ list := '('; /* Open the parameter cursor. */ OPEN parameter_cursor(row.specific_name::varchar); <<parameter_loop>> LOOP FETCH parameter_cursor INTO arg; /* Exit the parameter loop. */ EXIT parameter_loop WHEN NOT FOUND; /* Add parameter and delimit more than one parameter with a comma. */ IF LENGTH(list) > 1 THEN list := CONCAT(list,',',arg); ELSE list := CONCAT(list,arg); END IF; END LOOP; /* Close the parameter list. */ list := CONCAT(list,')'); /* Close the parameter cursor. */ CLOSE parameter_cursor; /* Concatenate together a DDL to drop the table with prejudice. */ sql := 'DROP '||row.routine_type||' IF EXISTS '||row.routine_name||list; /* Execute the DDL statement. */ EXECUTE sql; /* Assign success flag of 0. */ lv_retval := 0; END LOOP; /* Close the routine_cursor. */ CLOSE routine_cursor; /* Return the output text variable. */ RETURN lv_retval; END $$ LANGUAGE plpgsql; |

If you now create a series of hello overloaded functions, like:

CREATE OR REPLACE FUNCTION hello() RETURNS text AS $$ DECLARE output VARCHAR; BEGIN SELECT 'Hello World!' INTO output; RETURN output; END $$ LANGUAGE plpgsql; CREATE OR REPLACE FUNCTION hello(whom text) RETURNS text AS $$ DECLARE output VARCHAR; BEGIN SELECT CONCAT('Hello ',whom,'!') INTO output; RETURN output; END $$ LANGUAGE plpgsql; CREATE OR REPLACE FUNCTION hello(id int, whom text) RETURNS text AS $$ DECLARE output VARCHAR; BEGIN SELECT CONCAT('[',id,'] Hello ',whom,'!') INTO output; RETURN output; END $$ LANGUAGE plpgsql; |

After you create the overloaded functions, you can query their status from the information_schema.routines table in the data dictionary:

SELECT routine_name , specific_name , routine_type FROM information_schema.routines WHERE specific_catalog = current_setting('videodb.catalog_name') AND routine_schema = 'public' AND routine_name = 'hello'; |

Which shows you the three versions of the hello function:

routine_name | specific_name | routine_type --------------+---------------+-------------- hello | hello_18100 | FUNCTION hello | hello_18101 | FUNCTION hello | hello_18102 | FUNCTION (3 rows) |

You can drop all versions of the hello functions by calling the drop_routine function:

SELECT CASE WHEN drop_routine('hello','function') = 0 THEN 'Success' ELSE 'Failure' END AS drop_routine; |

It returns the following:

drop_routine -------------- Success (1 row) |

As always, I hope this helps those looking for how to routinely test new functions and procedures.

PostgreSQL Tables

The most straightforward way to view the description of a PostgreSQL table is the \d command. For example, this lets you display an account_list table:

\d account_list |

Unfortunately, this shows you the table, indexes, and foreign key constraints. Often, you only want to see the list of columns in positional order. So, I wrote a little function to let me display only the table and columns.

There are a few techniques in the script that might seem new to some developers. For example, the data types of the return parameter values of a function that returns values from the data dictionary are specific to types used by the data dictionary. These specialized types are required because the SQL cursor gathers the information from the data dictionary in the information_schema, and most of these types can’t be cast as variable length strings.

A simple assumption that the data dictionary strings would implicitly cast to variable length strings is incorrect. That’s because while you can query them like VARCHAR variables they don’t cast to variable length string. If you wrote a wrapper function that returned VARCHAR variables, you would probably get a result like this when you call your function:

ERROR: structure of query does not match function result type DETAIL: Returned type information_schema.sql_identifier does not match expected type character varying in column 1. |

The “character varying” is another name for a VARCHAR data type. Some notes will advise you to fix this type of error by using the column name and a %TYPE. The %TYPE anchors the data type in the function’s parameter list to the actual data type of the data dictionary’s table. You would implement that suggestion with code like:

RETURNS TABLE ( table_schema information_schema.columns.table_schema%TYPE

, table_name information_schema.columns.table_name%TYPE

, ordinal_position information_schema.columns.ordinal_position%TYPE

, column_name information_schema.columns.column_name%TYPE

, data_type information_schema.columns.data_type%TYPE

, is_nullable information_schema.columns.is_nullable%TYPE ) AS |

Unfortunately, your function would raise a NOTICE for every dynamically anchored column at runtime. The NOTICE messages would appear as follows for the describe_table function with anchored parameter values:

psql:describe_table.sql:34: NOTICE: type reference information_schema.columns.table_schema%TYPE converted to information_schema.sql_identifier psql:describe_table.sql:35: NOTICE: type reference information_schema.columns.table_name%TYPE converted to information_schema.sql_identifier psql:describe_table.sql:36: NOTICE: type reference information_schema.columns.ordinal_position%TYPE converted to information_schema.cardinal_number psql:describe_table.sql:37: NOTICE: type reference information_schema.columns.column_name%TYPE converted to information_schema.sql_identifier psql:describe_table.sql:38: NOTICE: type reference information_schema.columns.data_type%TYPE converted to information_schema.character_data psql:describe_table.sql:39: NOTICE: type reference information_schema.columns.is_nullable%TYPE converted to information_schema.yes_or_no |

As a rule, there’s a better solution when you know how to discover the underlying data types. You can discover the required data types with the following query of the pg_attribute table in the information_schema:

SELECT attname

, atttypid::regtype

FROM pg_attribute

WHERE attrelid = 'information_schema.columns'::regclass

AND attname IN ('table_schema','table_name','ordinal_position','column_name','data_type','is_nullable')

ORDER BY attnum; |

It returns:

attname | atttypid ------------------+------------------------------------ table_schema | information_schema.sql_identifier table_name | information_schema.sql_identifier ordinal_position | information_schema.cardinal_number column_name | information_schema.sql_identifier is_nullable | information_schema.yes_or_no data_type | information_schema.character_data (6 rows) |

Only the character_data type can be replaced with a VARCHAR data type, the others should be typed as shown above. Here’s the modified describe_table function.

CREATE OR REPLACE FUNCTION describe_table (table_name_in VARCHAR) RETURNS TABLE ( table_schema information_schema.sql_identifier , table_name information_schema.sql_identifier , ordinal_position information_schema.cardinal_number , column_name information_schema.sql_identifier , data_type VARCHAR , is_nullable information_schema.yes_or_no ) AS $$ BEGIN RETURN QUERY SELECT c.table_schema , c.table_name , c.ordinal_position , c.column_name , CASE WHEN c.character_maximum_length IS NOT NULL THEN CONCAT(c.data_type, '(', c.character_maximum_length, ')') ELSE CASE WHEN c.data_type NOT IN ('date','timestamp','timestamp with time zone') THEN CONCAT(c.data_type, '(', numeric_precision::text, ')') ELSE c.data_type END END AS modified_type , c.is_nullable FROM information_schema.columns c WHERE c.table_schema NOT IN ('information_schema', 'pg_catalog') AND c.table_name = table_name_in ORDER BY c.table_schema , c.table_name , c.ordinal_position; END; $$ LANGUAGE plpgsql; |

If you’re new to PL/pgSQL table functions, you can check my basic tutorial on table functions. You call the describe_table table function with the following syntax:

SELECT * FROM describe_table('account_list'); |

It returns:

table_schema | table_name | ordinal_position | column_name | data_type | is_nullable --------------+--------------+------------------+------------------+--------------------------+------------- public | account_list | 1 | account_list_id | integer(32) | NO public | account_list | 2 | account_number | character varying(10) | NO public | account_list | 3 | consumed_date | date | YES public | account_list | 4 | consumed_by | integer(32) | YES public | account_list | 5 | created_by | integer(32) | NO public | account_list | 6 | creation_date | timestamp with time zone | NO public | account_list | 7 | last_updated_by | integer(32) | NO public | account_list | 8 | last_update_date | timestamp with time zone | NO (8 rows) |

As always, I hope this helps those looking for a solution to functions that wrap the data dictionary and display table data from the PostgreSQL data dictionary.

PL/pgSQL Function

How to write an overloaded set of hello_world functions in PostgreSQL PL/pgSQL. The following code lets you write and test overloaded functions and the concepts of null, zero-length string, and string values.

-- Drop the overloaded functions. DROP FUNCTION IF EXISTS hello_world(), hello_world(whom VARCHAR); -- Create the function. CREATE FUNCTION hello_world() RETURNS text AS $$ DECLARE output VARCHAR(20); BEGIN /* Query the string into a local variable. */ SELECT 'Hello World!' INTO output; /* Return the output text variable. */ RETURN output; END $$ LANGUAGE plpgsql; -- Create the function. CREATE FUNCTION hello_world(whom VARCHAR) RETURNS text AS $$ DECLARE output VARCHAR(20); BEGIN /* Query the string into a local variable. */ IF whom IS NULL OR LENGTH(whom) = 0 THEN SELECT 'Hello World!' INTO output; ELSE SELECT CONCAT('Hello ', whom, '!') INTO output; END IF; /* Return the output text variable. */ RETURN output; END $$ LANGUAGE plpgsql; -- Call the function. SELECT hello_world(); SELECT hello_world(Null) AS output; SELECT hello_world('') AS output; SELECT hello_world('Harry') AS output; |

It should print:

output

--------------

Hello World!

(1 row)

output

--------------

Hello World!

(1 row)

output

--------------

Hello World!

(1 row)

output

--------------

Hello Harry!

(1 row) |

As always, I hope this helps those looking for the basics and how to solve problems.

PostgreSQL 14 Install

This post is a step-by-step install guide to PostgreSQL 14 on Windows 10. It sometimes makes me curious that folks want a summary of screen shots from a Microsoftw Software Installer (MSI) because they always appear to me as straightforward.

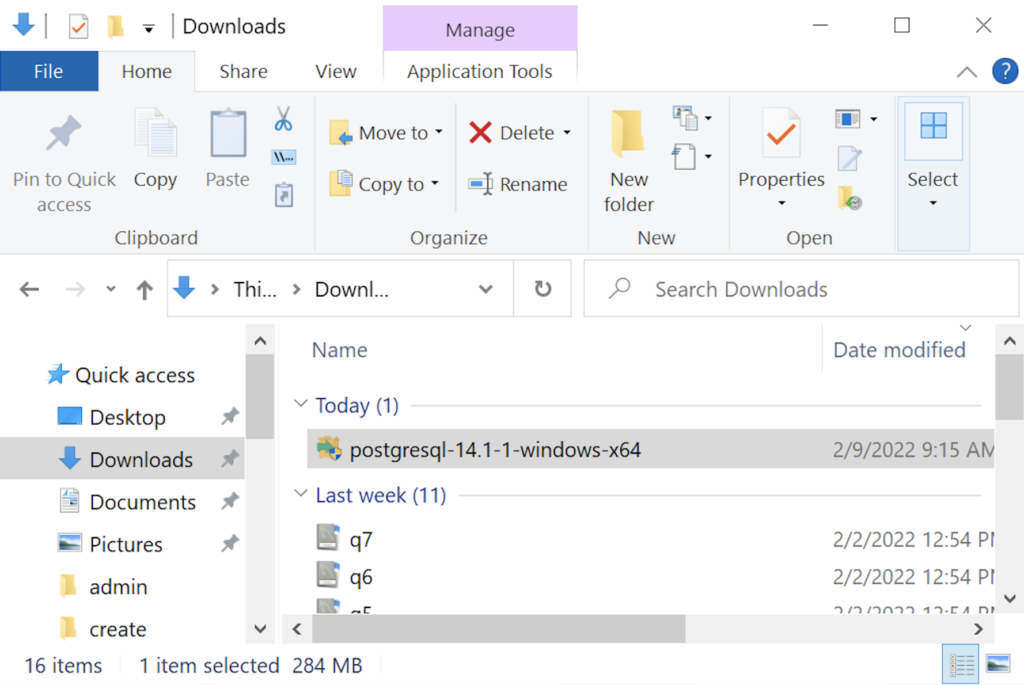

This walks you through installing PostgreSQL 14, EDS’s version of Apache, supplemental connection libraries, and pgAdmin4. You can find the post-installation steps in my earlier Configure PostgreSQL 14 post.

PostgreSQL Database 14 Installation Steps

- The first thing you need to do is download the PostgreSQL MSI file, which should be in your C:\Users\username\Downloads directory. You can double-click on the MSI file.

- After double-clicking on the MSI file, you are prompted by User Account Control to allow the PostgreSQL MSI to make changes to your device. Clicking the Yes button is the only way forward.

- The Setup – PostgreSQL dialog requires you click the Next button to proceed.

- The Installation Directory dialog prompts you for an installation directory. The default directory is C:\Program Files\PostgreSQL\14 and you should use it. Click the Next button to continue.

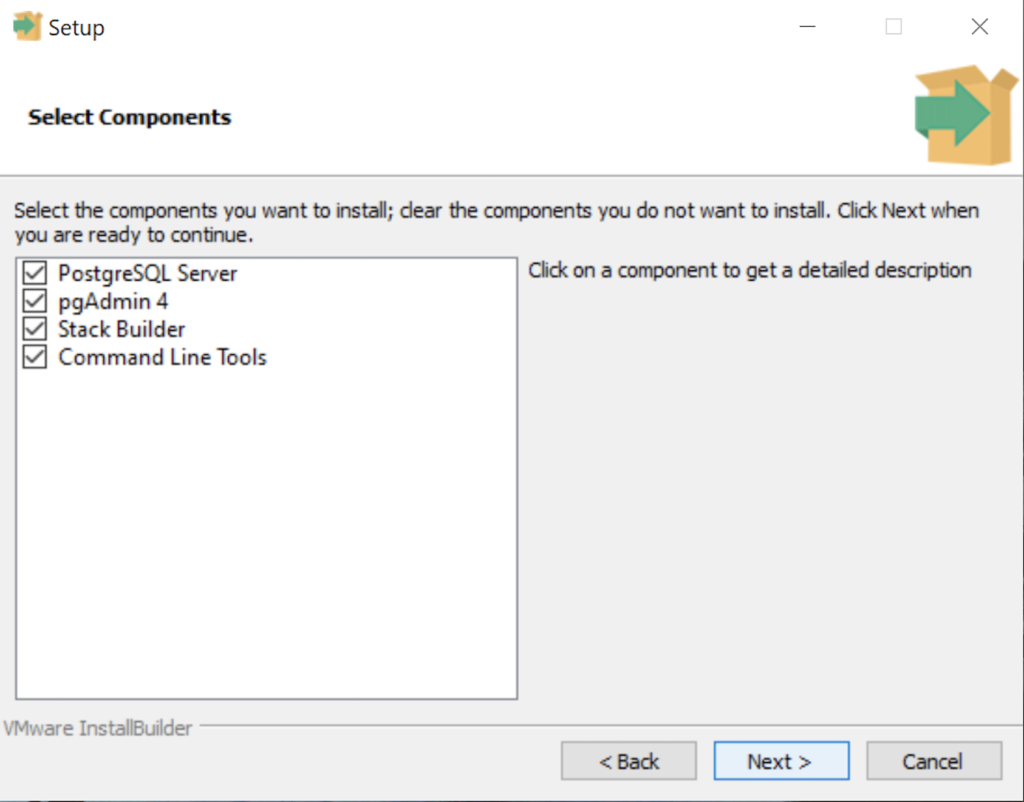

- The Select Components dialog prompts you to choose the products to install. You should choose all four – PostgreSQL Server, pgAdmin 4, Stack Builder, and Command Line Tools. Click the Next button to continue.

- The Password dialog prompts you for the PostgreSQL superuser password. In a development system for your local computer, you may want to use something straightforward like cangetin. Click the Next button to continue.

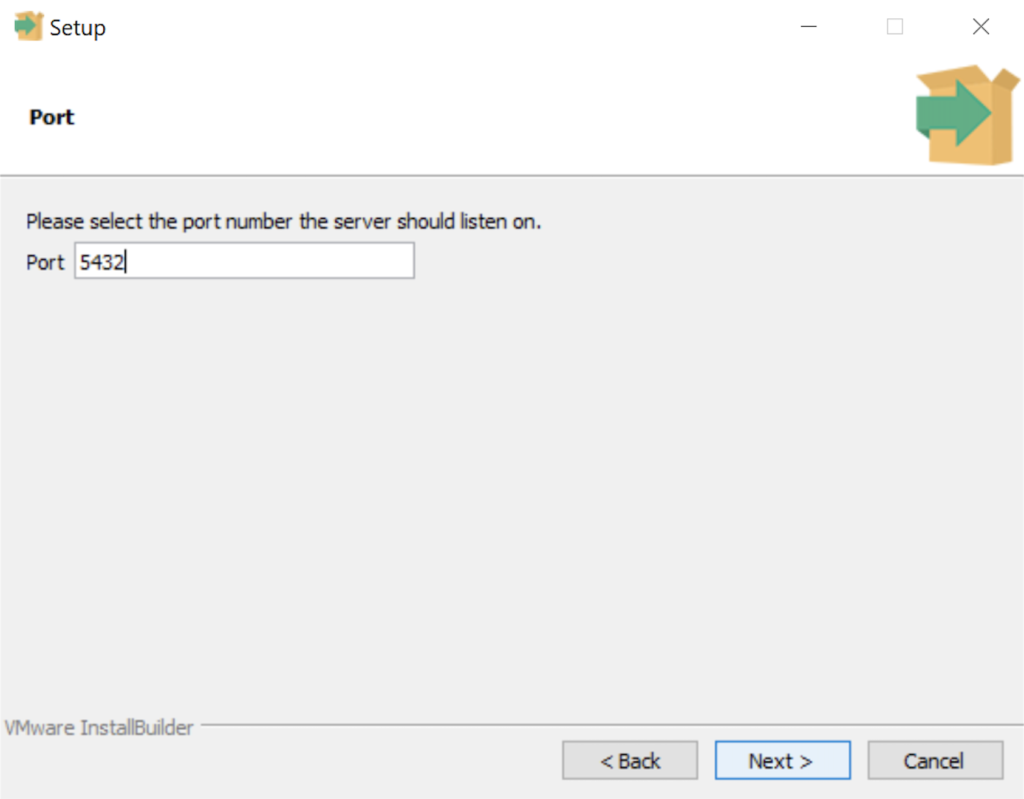

- The Setup dialog lets you select the port number for the PostgreSQL listener. Port 5432 is the standard port for a PostgreSQL database, and ports 5433 and 5434 are used sometimes. Click the Next button to continue.

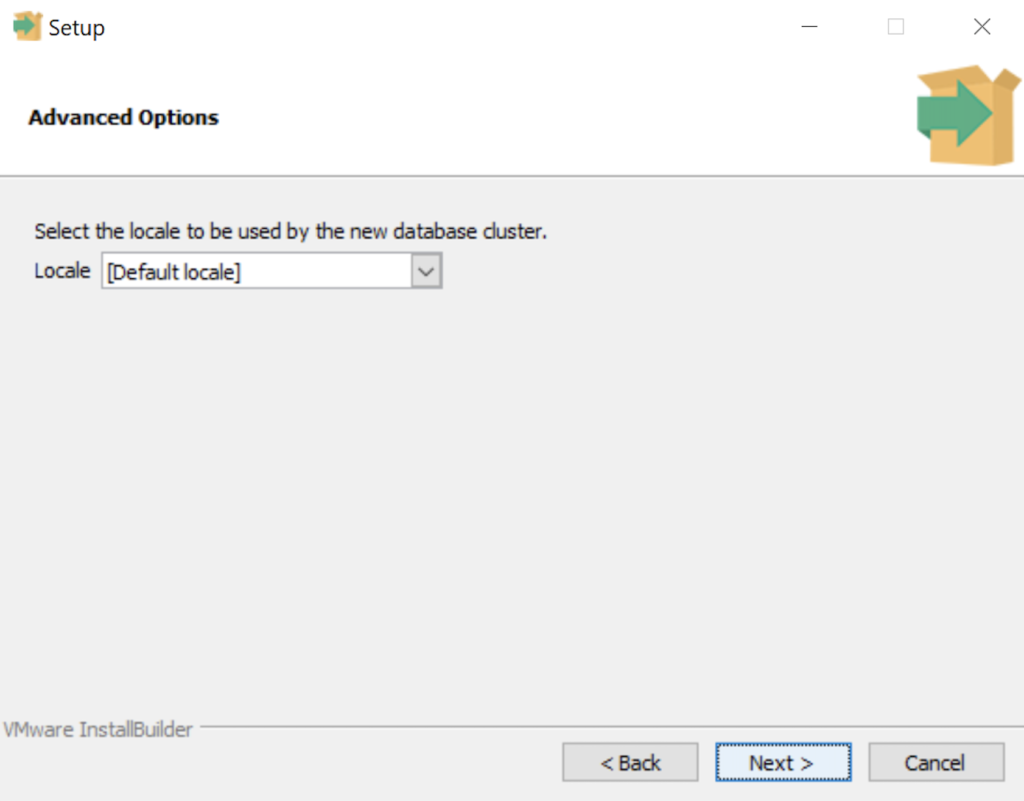

- The Advanced Options dialog lets you select the Locale for the database. As a rule for a development instance you should chose the Default locale. Click the Next button to continue.

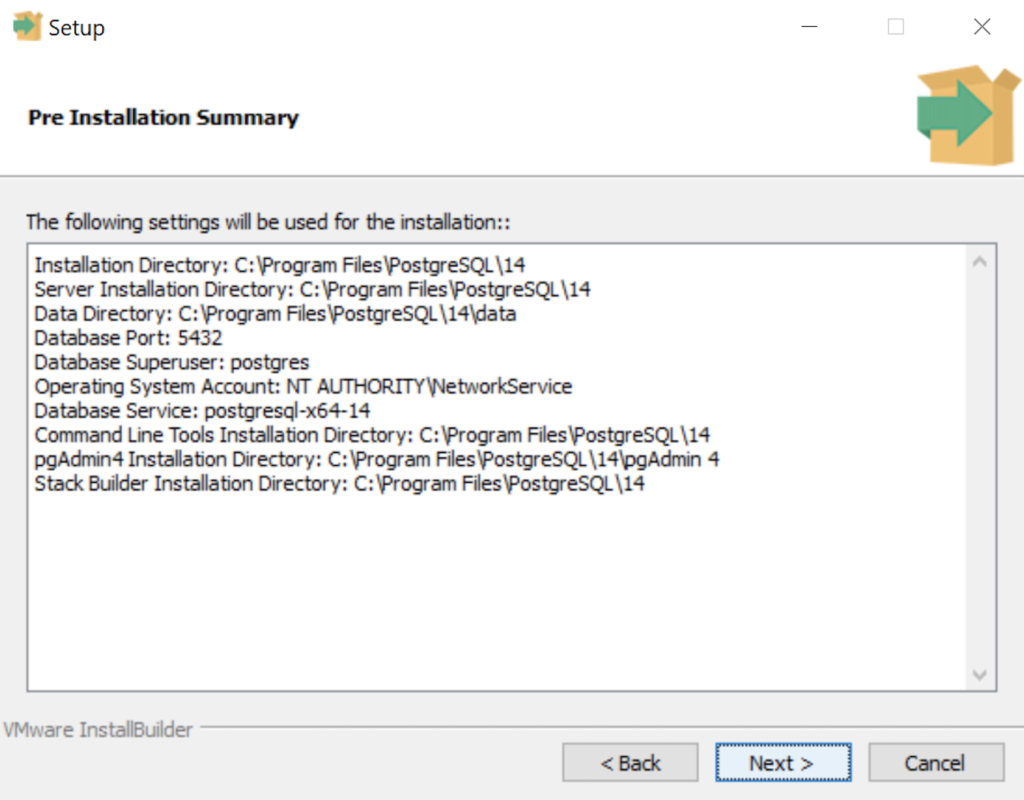

- The Pre Installation Summary dialog tells you what you’ve chosen to install. It gives you a chance to verify what you are installing. Click the Next button to continue.

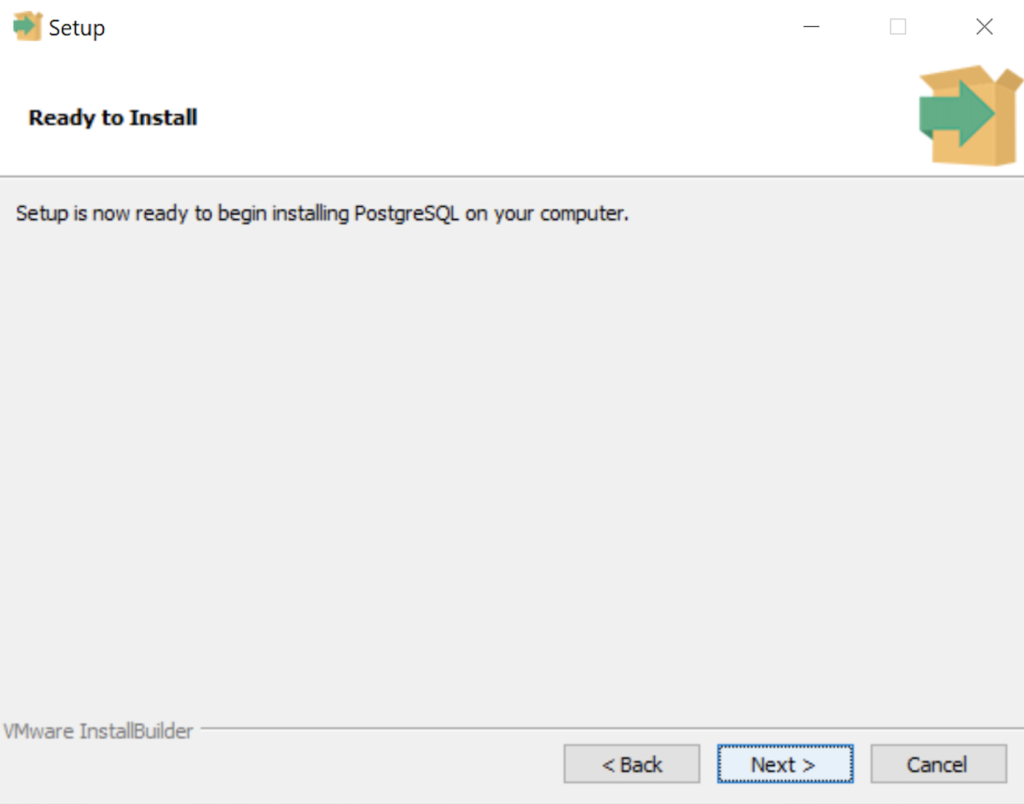

- The Ready to Install dialog lets you pause before you install the software. Click the Next button to continue.

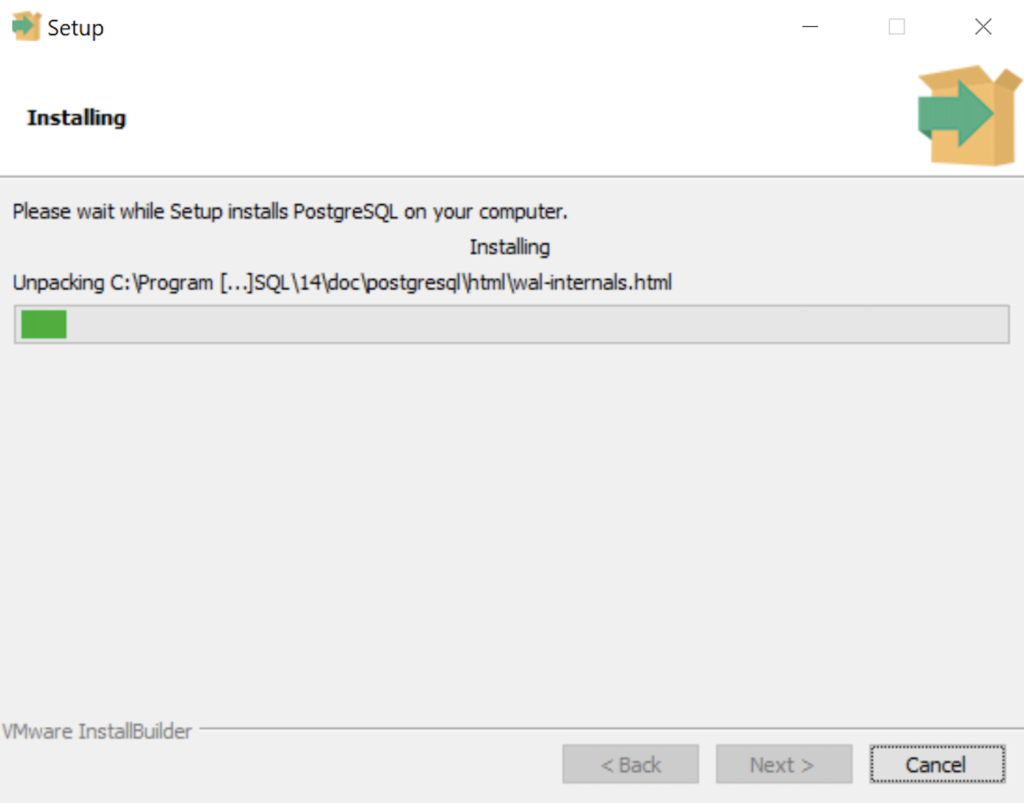

- The Installing dialog is a progress bar that will take several minutes to complete. When the progress bar completes, click the Next button to continue.

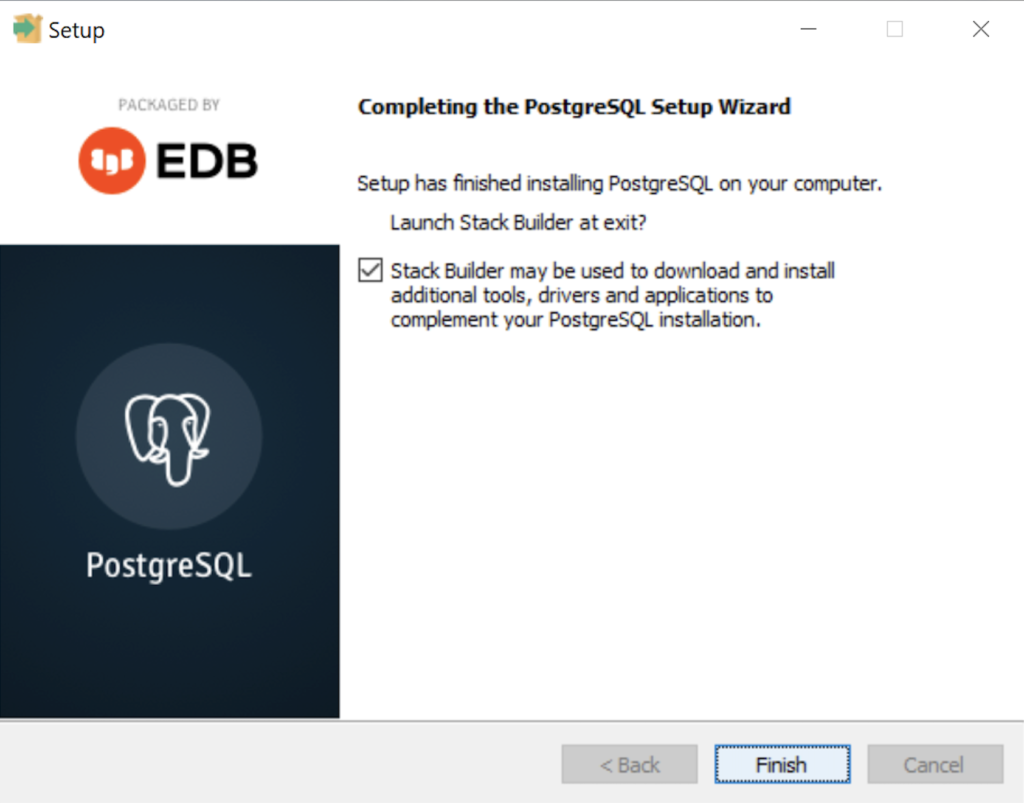

- The Completing the PostgreSQL Setup Wizard dialog tells you that the installation is complete. Click the Finish button to complete the PostgreSQL installation.

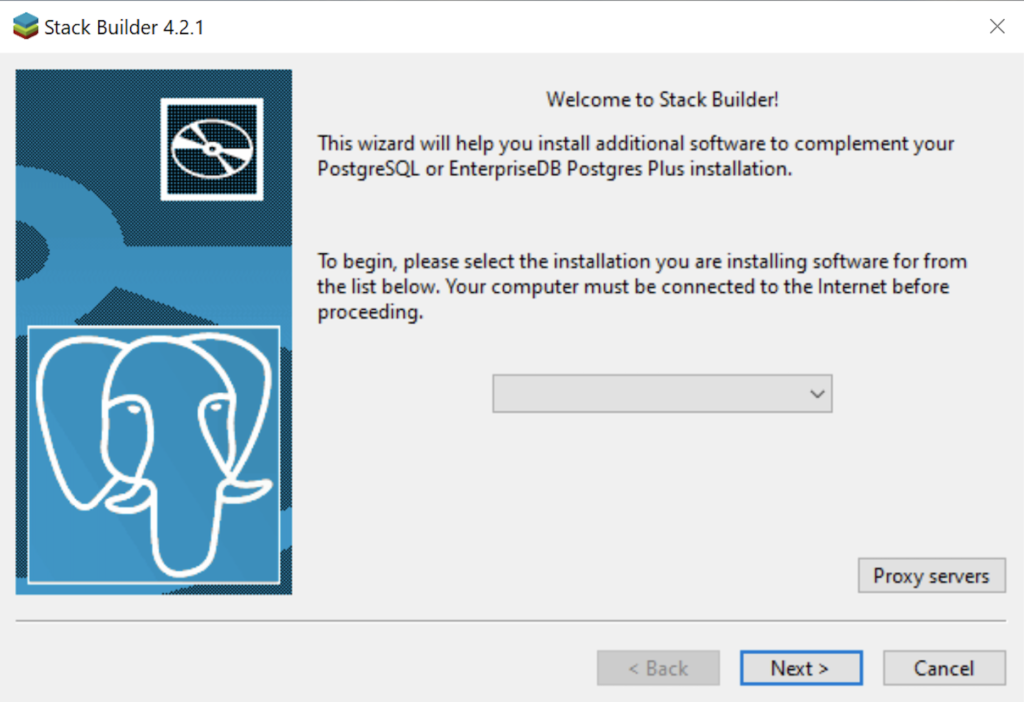

- The Welcome to Stack Builder! dialog lets you choose an installation from those on your computer to build a software stack. Click the drop down box to chose an installation.

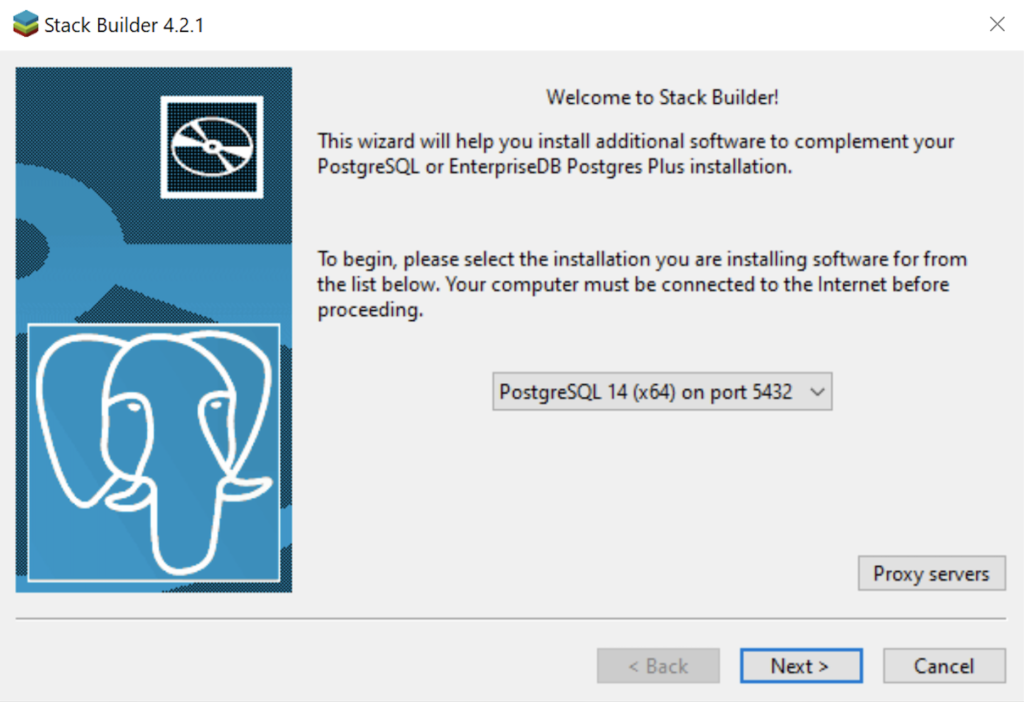

- The second copy of the Welcome to Stack Builder! dialog shows the choice of the PostgreSQL installation you just completed. Click on the Next button to continue.

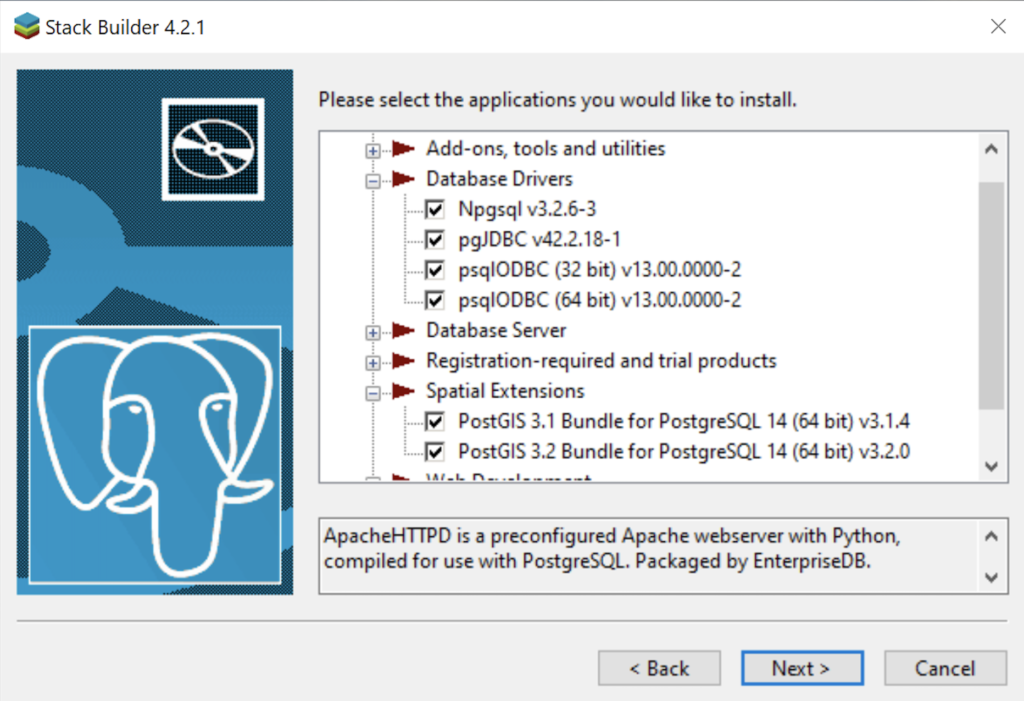

- The Stack Builder dialog prompts you to choose the products to install. You should choose all four database drivers – Npgsql, pgJDBC, psqlODBC, psqlODBC; and the PostGIS 3.1 and PostGIS 3.2 Bundles for PostgreSQL. Then, click the Next button to continue.

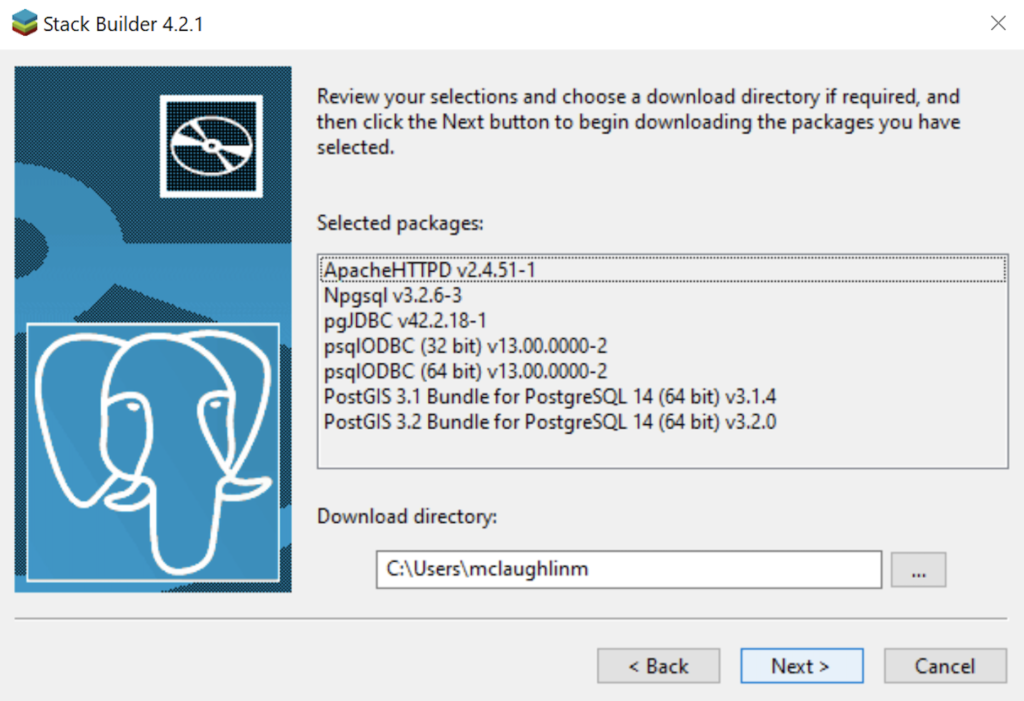

- The Stack Builder dialog shows you the products you will install. You should choose all four database drivers – Npgsql, pgJDBC, psqlODBC, psqlODBC; and the PostGIS 3.1 and PostGIS 3.2 Bundles for PostgreSQL. Click the Next button to continue.

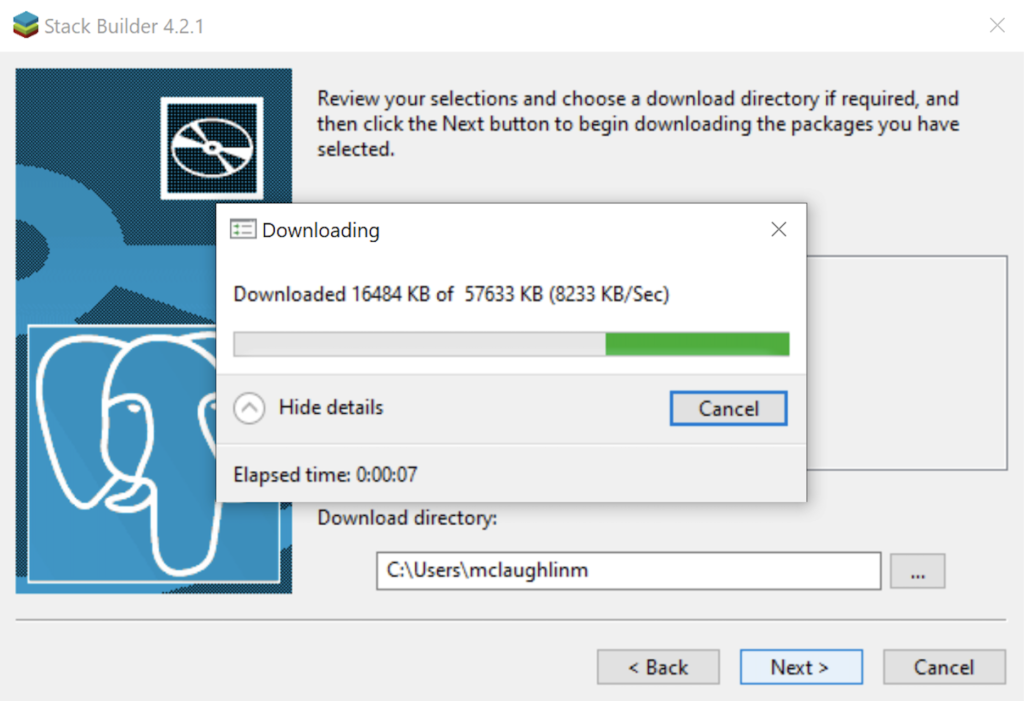

- The Stack Builder dialog shows a download progress bar subdialog, which may take some time to complete. The Stack Builder dialog’s Progress Bar automatically advances to the next dialog box.

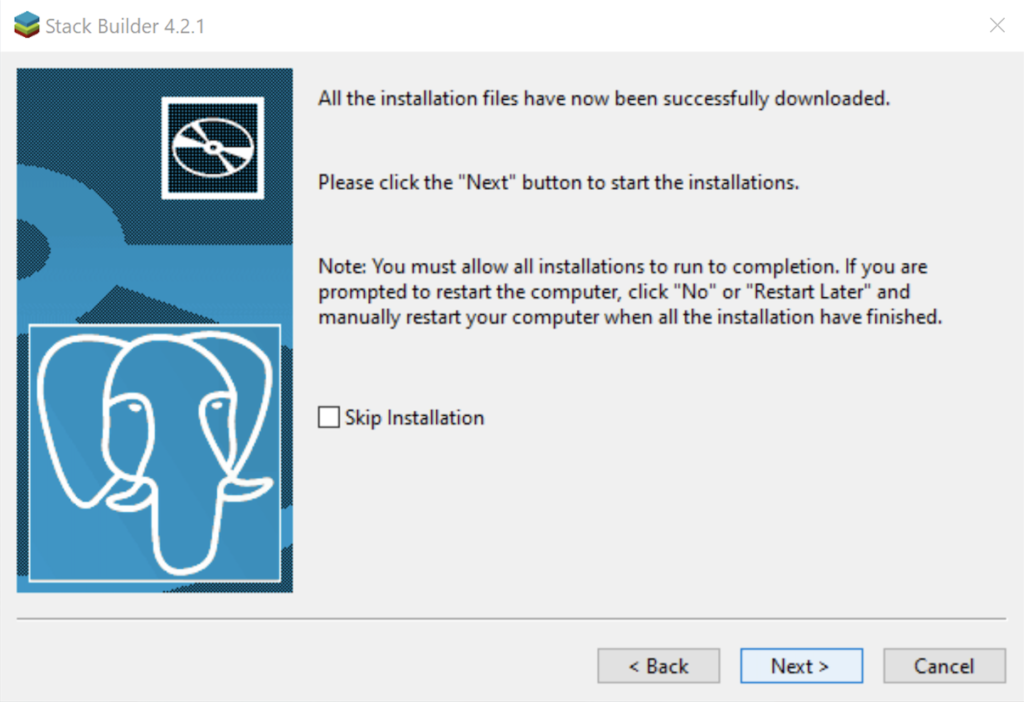

- The Stack Builder dialog tells you the products you downloaded. Click the Next button to continue the developer stack.

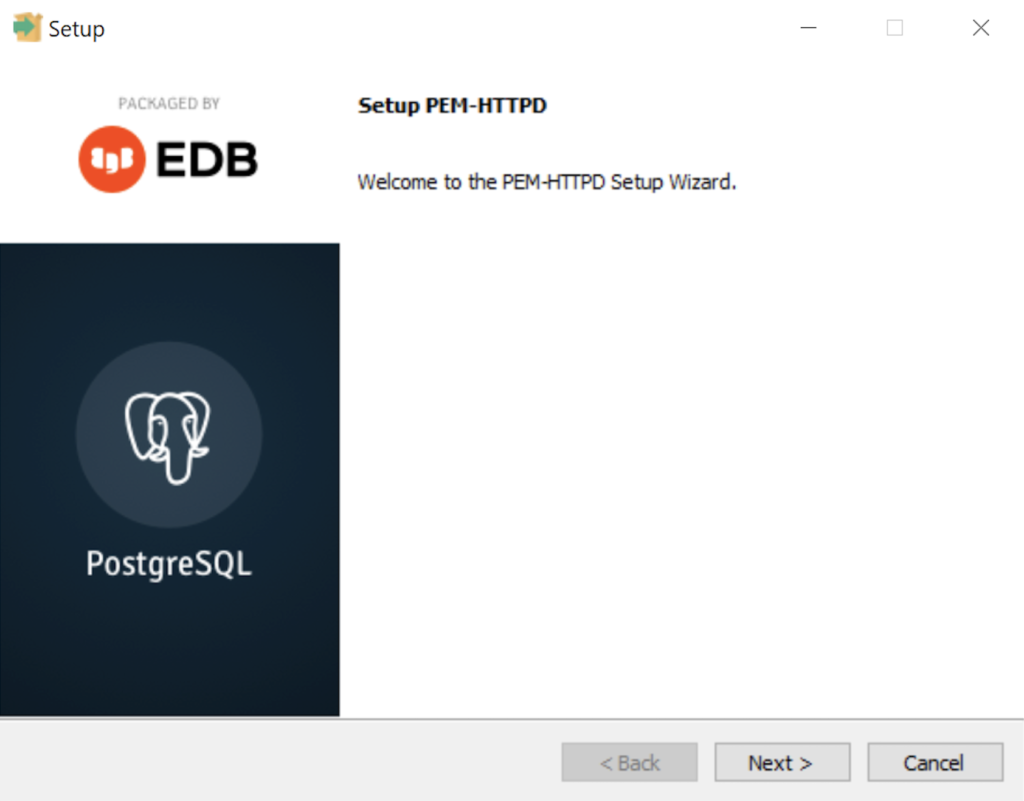

- The Setup dialog advises that you are installing the PEM-HTTPD Setup Wizard. Click the Next button to continue.

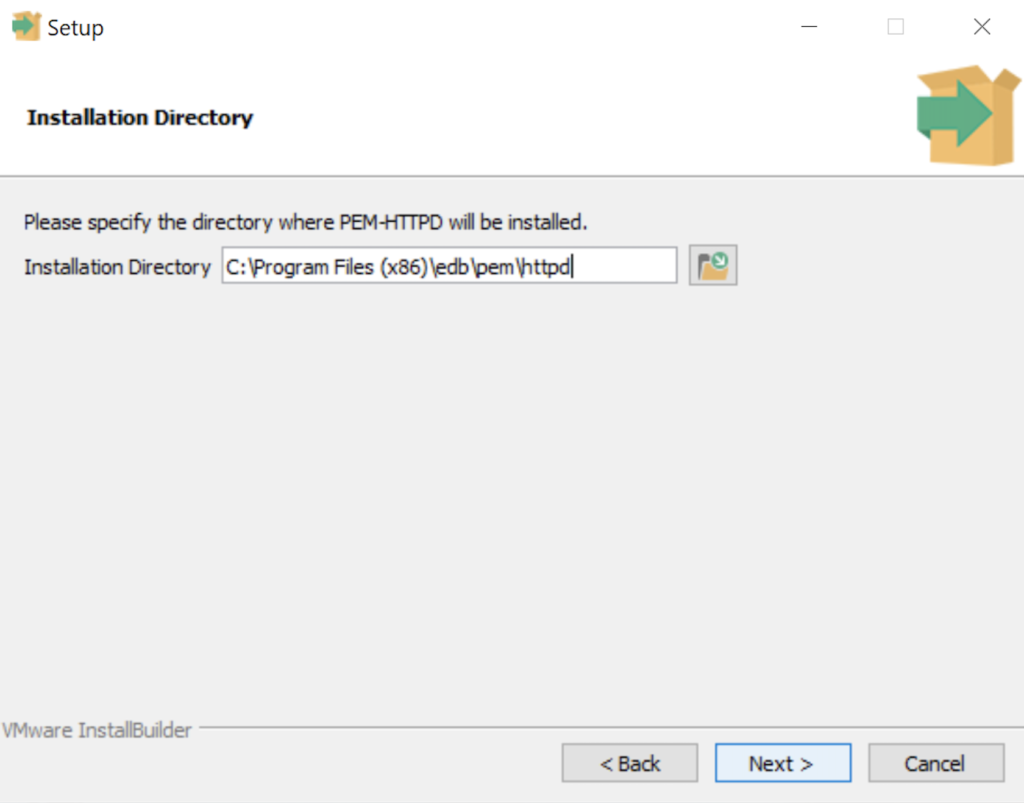

- The Installation Directory dialog prompts you for an installation directory. The default directory is C:\Program Files (x86)\edb\pem\httpd and you should use it because that’s where Windows 10 puts 64-bit libraries. Click the Next button to continue.

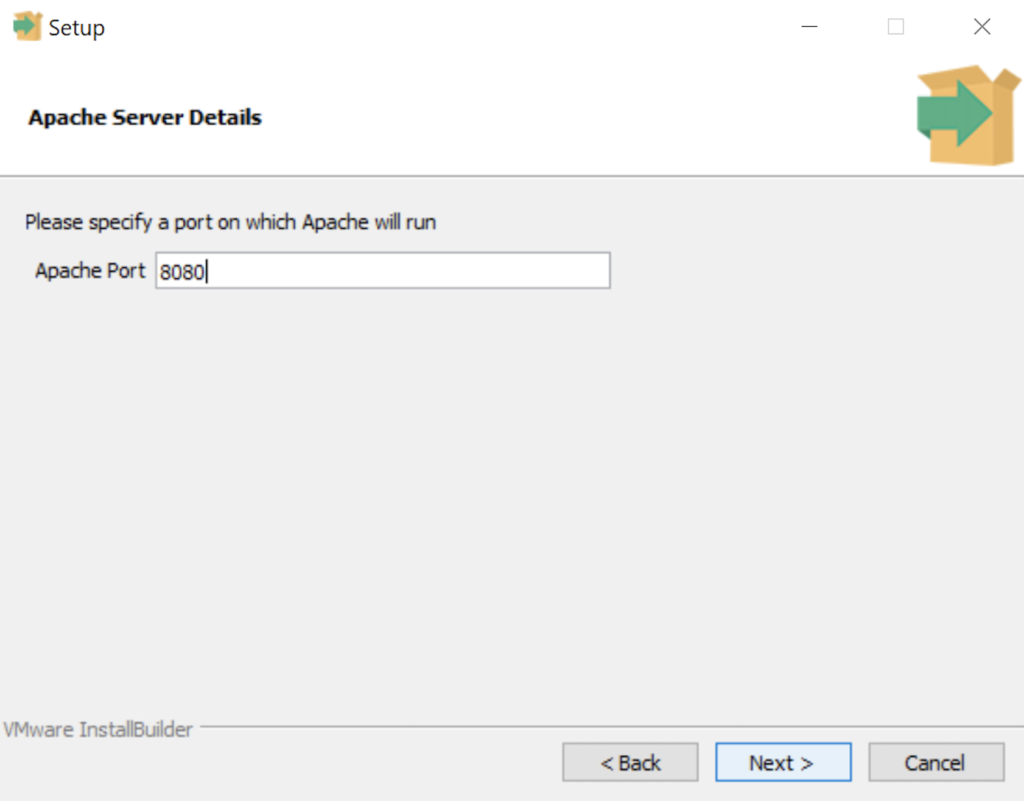

- The Setup dialog lets you select the port number for the HTTP listener. Port 8080 is the standard port for an HTTP listener, and ports 8081, 8082, and so forth are used when you have more than one HTTP listener on a single server or workstation. Click the Next button to continue.

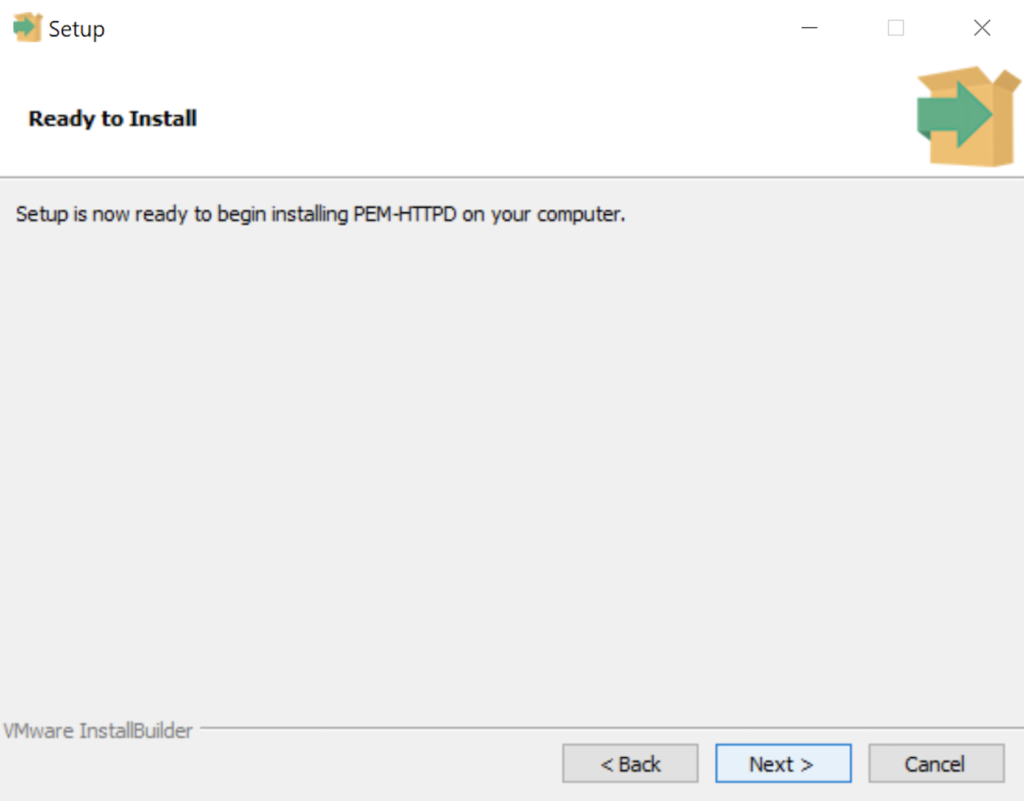

- The Ready to Install dialog lets you pause before you install the software. Click the Next button to continue.

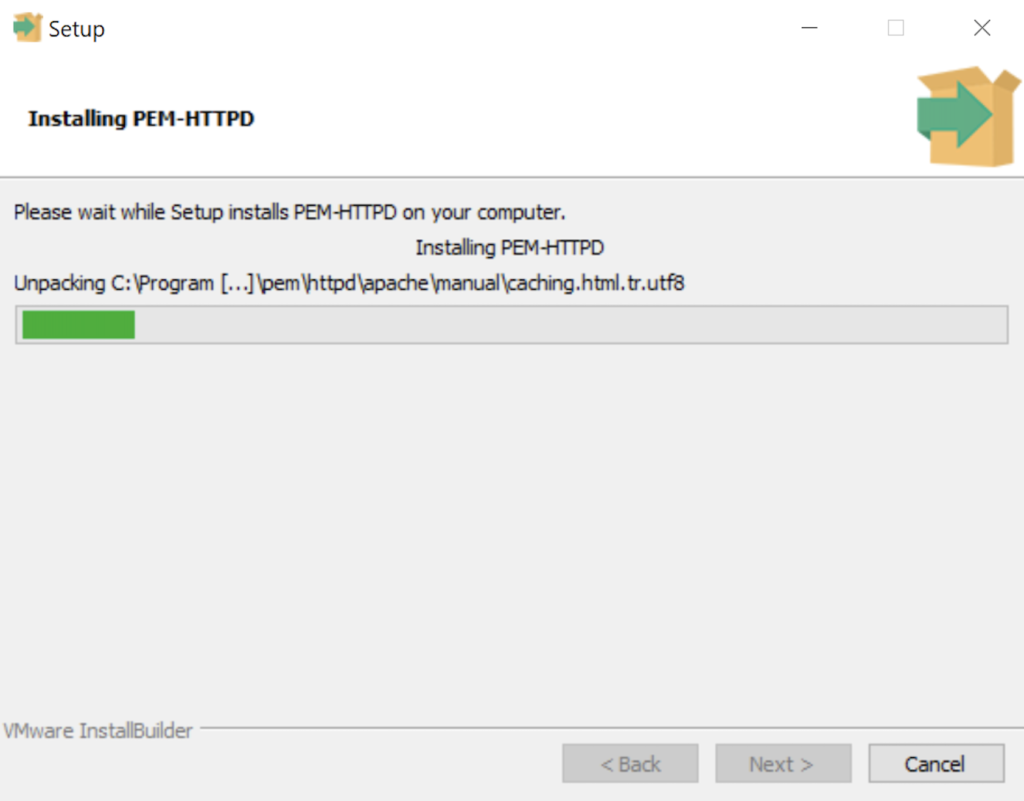

- The Installing dialog is a progress bar that will take several minutes to complete. When the progress bar completes, click the Next button when it becomes available to continue.

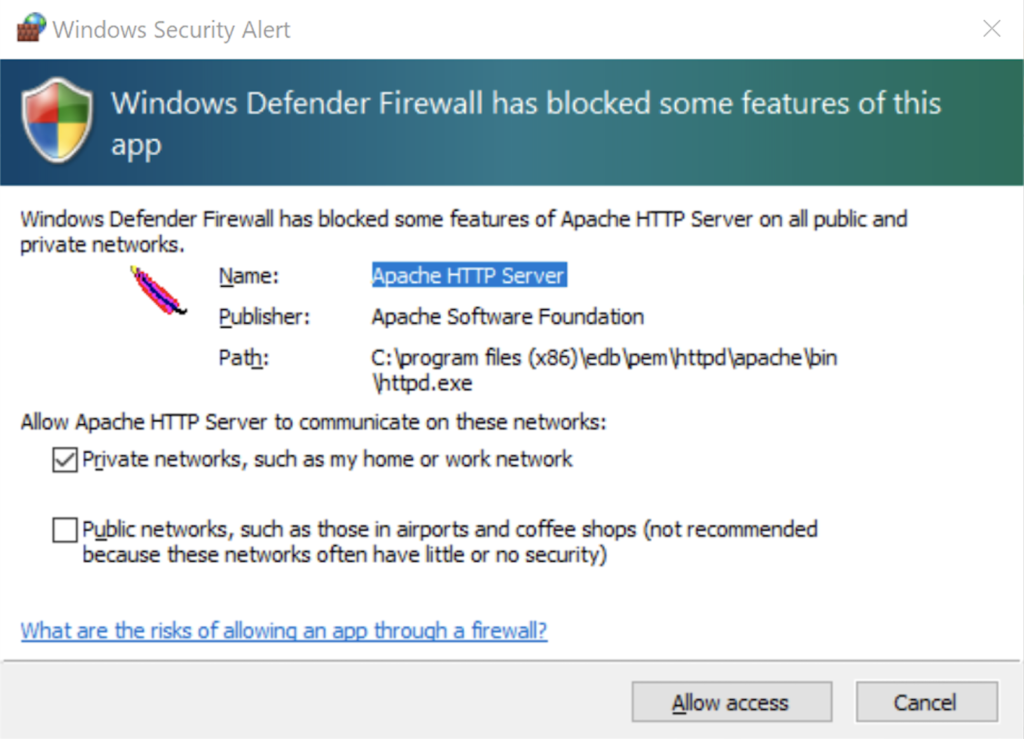

- The Windows Security Alert dialog asks you to allow the Apache HTTP Server to use port 8080. Click the Private networks, such as my home or work network checkbox and then the Allow access button to continue.

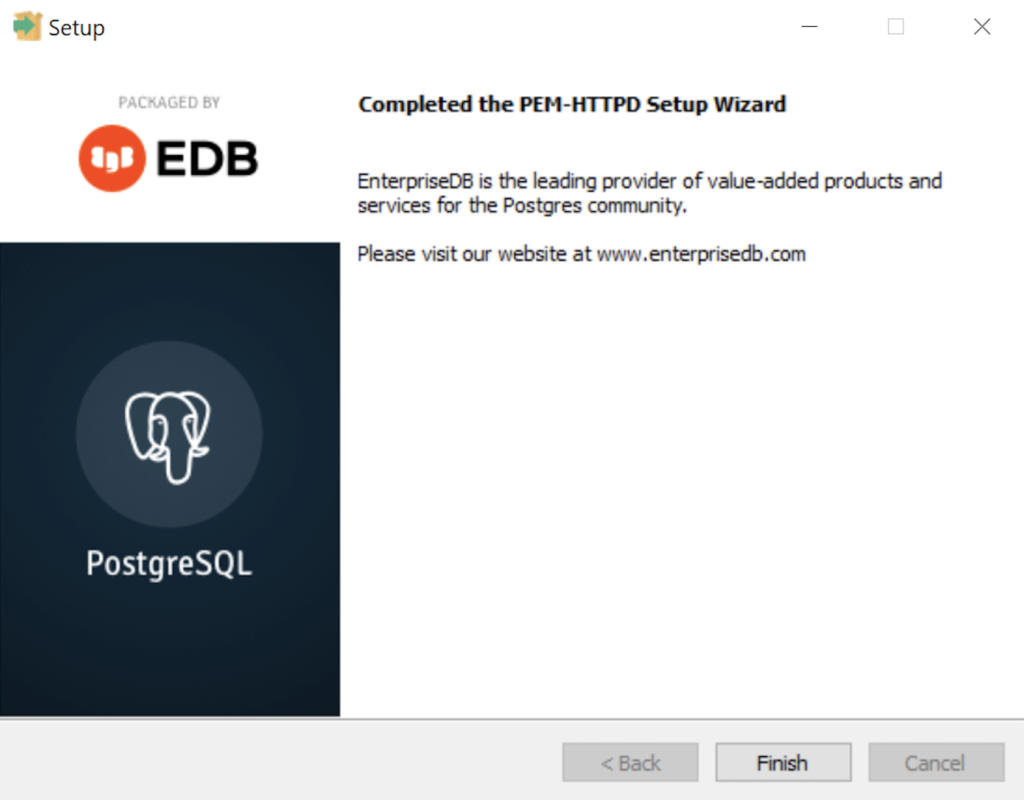

- The Setup dialog advises that you have completed the installation of the PEM-HTTPD Setup Wizard. Click the Finish button to continue.

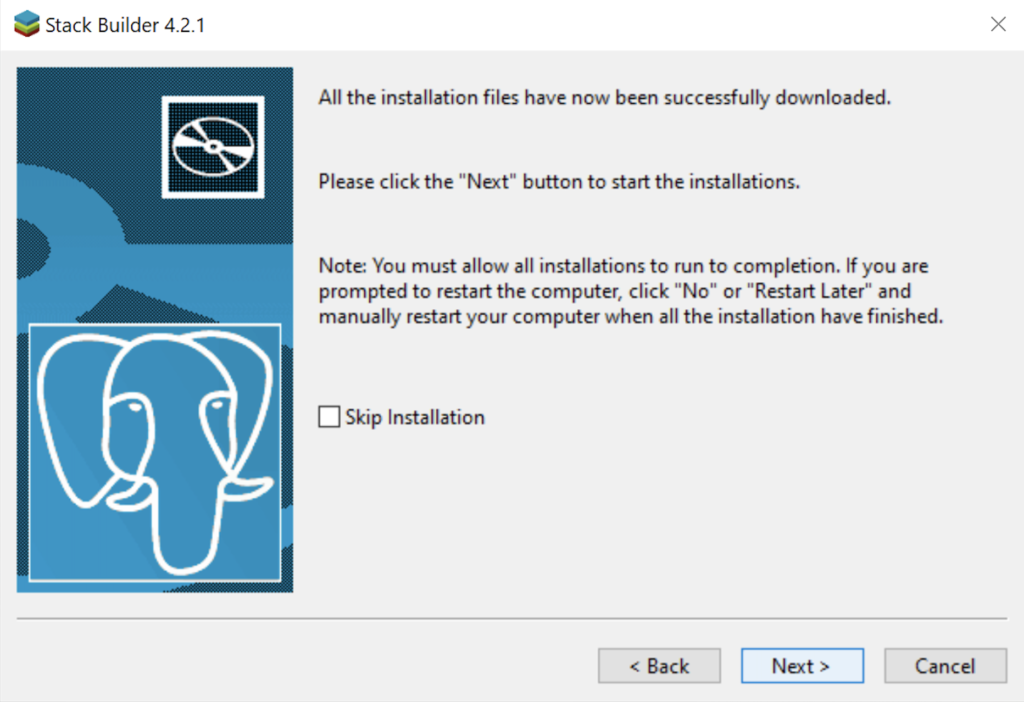

- The Stack Builder dialog advises you that all four database drivers – Npgsql, pgJDBC, psqlODBC, psqlODBC are downloaded and ready to install. Click the Next button to continue.

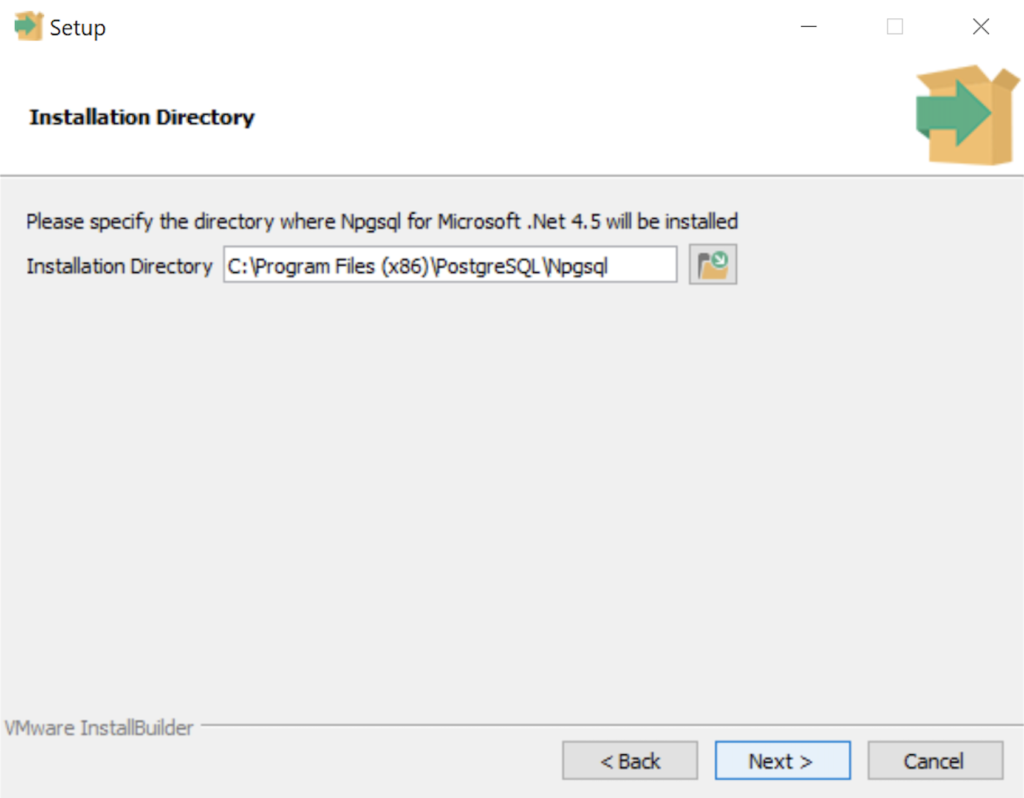

- The Installation Directory dialog prompts you for an installation directory. The default directory is C:\Program Files (x86)\PostgreSQL\Npgsql and you should use it because that’s where Windows 10 puts 64-bit libraries and the subdirectory meets the standard installation convention for Microsoft .Net libraries. Click the Next button to continue.

- The Ready to Install dialog lets you pause before you install the Npgsql software for Microsoft .Net. Click the Next button to continue.

- The Setup dialog advises that you have completed the installation of npgsql driver for Microsoft .Net. Click the Finish button to continue.

- The Setup dialog advises that you are installing the pgJDBC diver Setup Wizard. Click the Next button to continue.

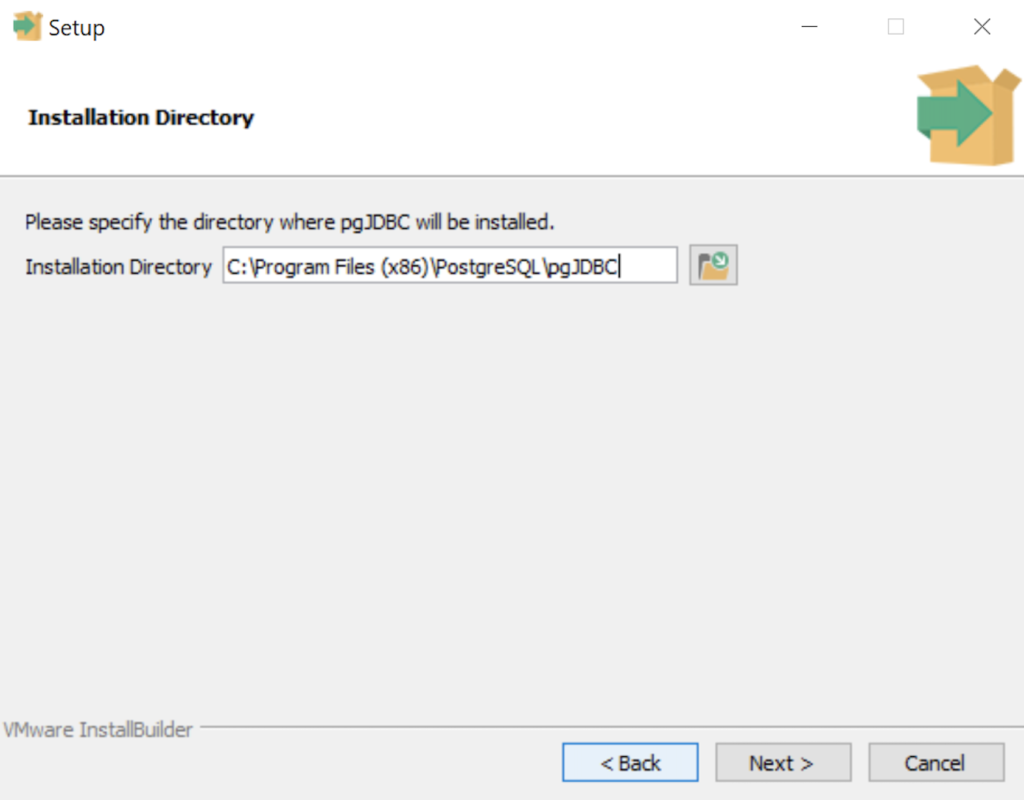

- The Installation Directory dialog prompts you for an installation directory. The default directory is C:\Program Files (x86)\PostgreSQL\pgJDBC and you should use it because that’s where Windows 10 puts 64-bit libraries and the subdirectory meets the standard installation convention for libraries. Click the Next button to continue.

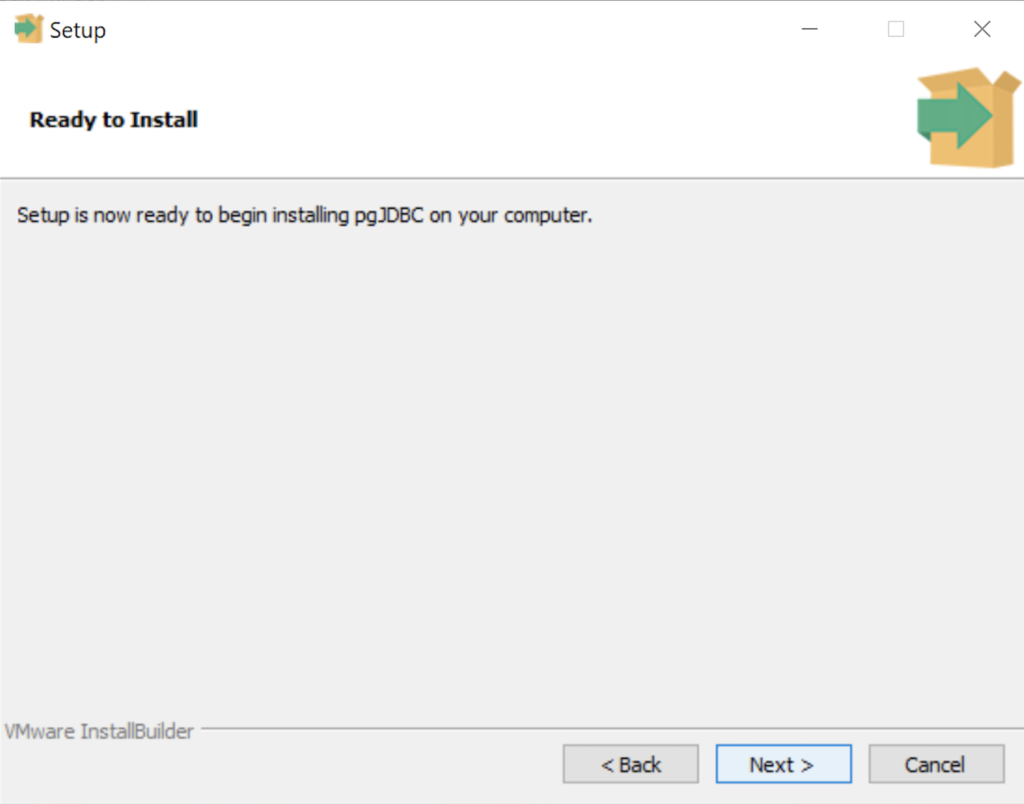

- The Ready to Install dialog lets you pause before you install the pgJDBC software. Click the Next button to continue.

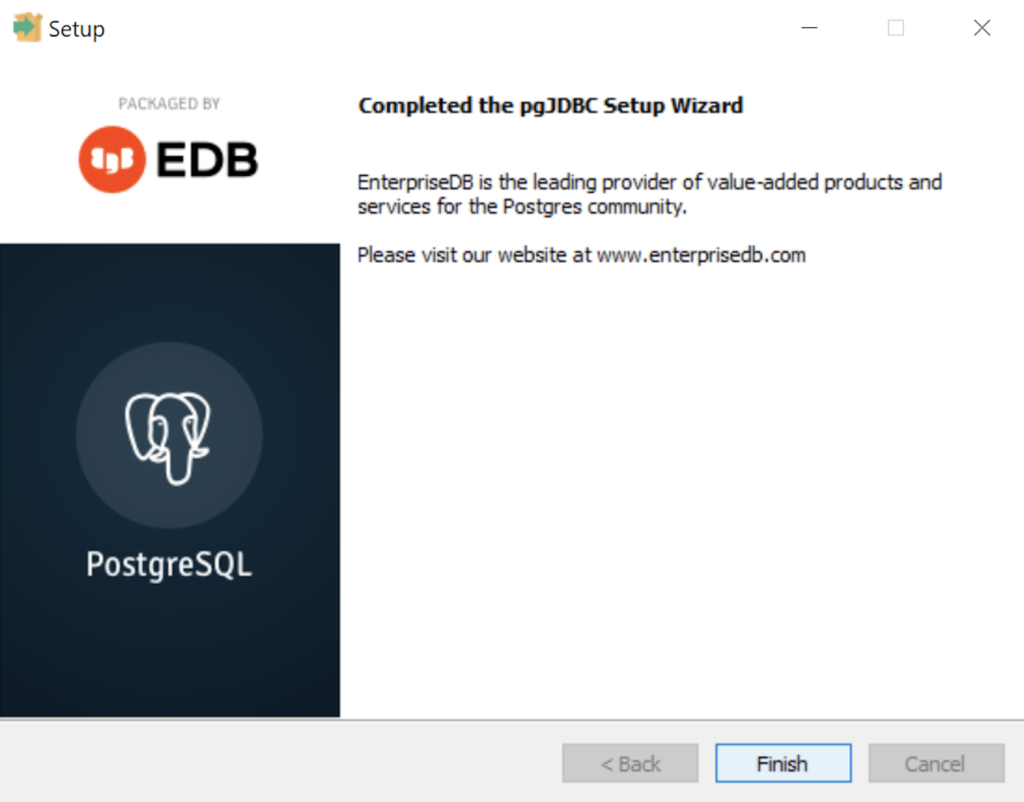

- The Setup dialog advises that you have completed the installation of pgJDBC driver. Click the Finish button to continue.

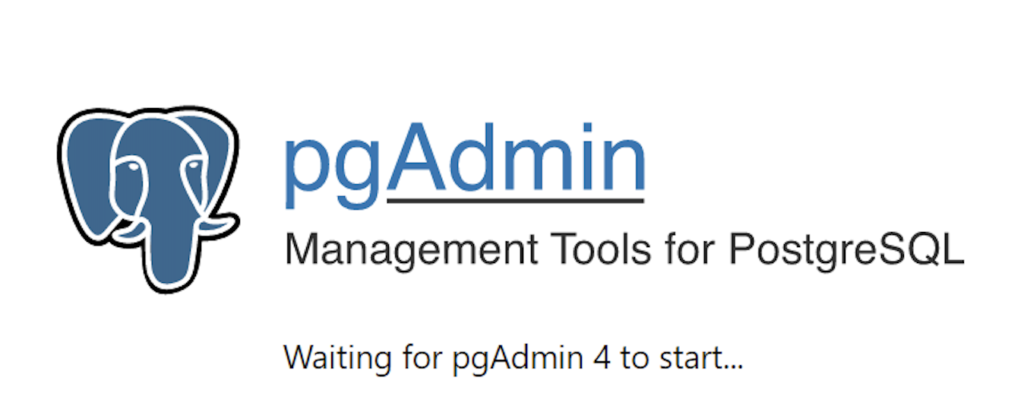

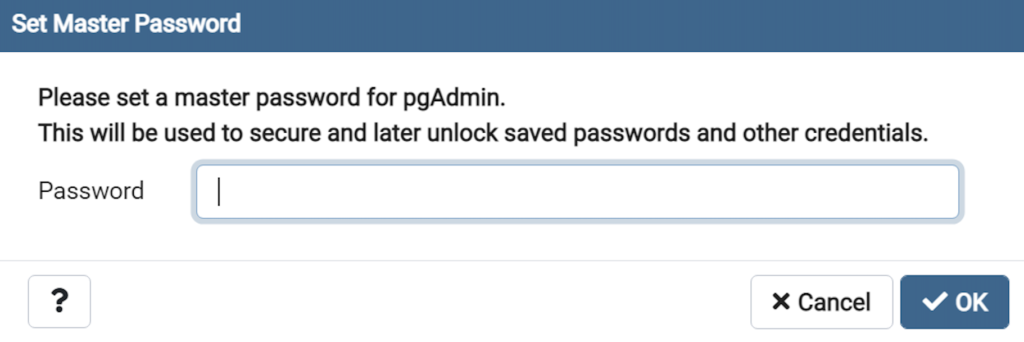

- The Advisory Message pgAdmin is Starting dialog is really telling you to be patient. It can take a couple minutes to launch pgAdmin.

- The Password dialog prompts you for the pgAdmin superuser password. In a development system for your local computer, you may want to use something straightforward like cangetin. Click the Next button to continue.

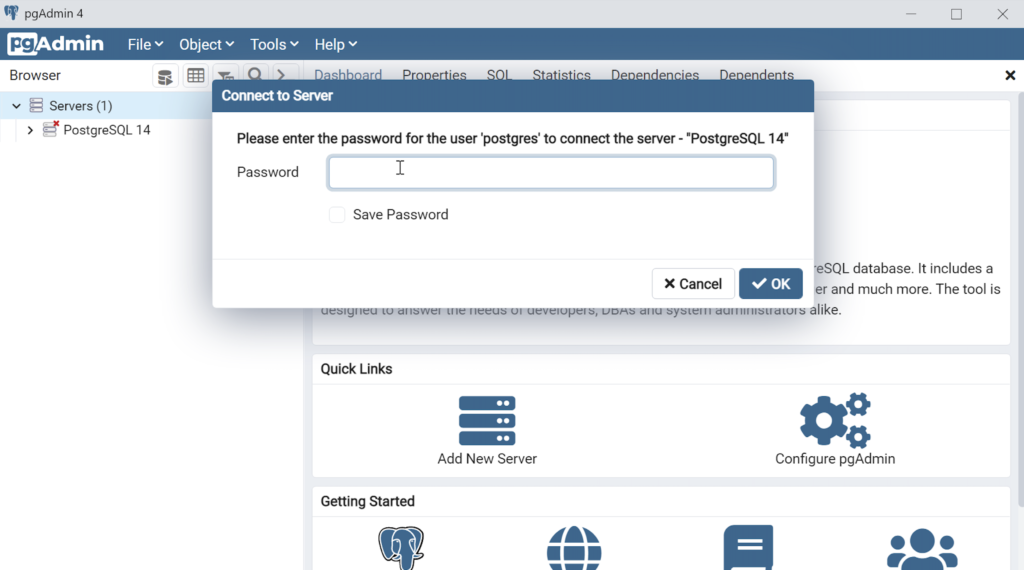

- Enter your password from the earlier step and click the OK button.

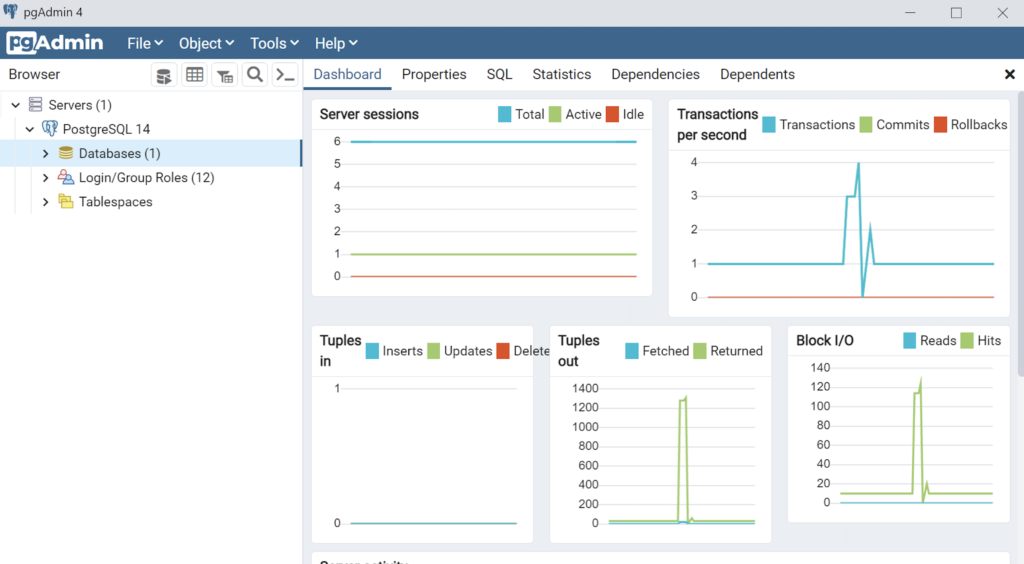

- This is the pgAdmin console. You should see one database and tweleve login/group roles.

The foregoing walked you through the installation of PostgreSQL, the connector libraries and pgAdmin utility. Next, you have to make it real with configuration, which sets up the tablespace, database, and connectivity. I hope it helps those who would like to see the installation steps.

Configure PostgreSQL 14

After you install PostgreSQL 14 on Windows, there are a few steps to create a sandbox database. This post shows you those steps, including a couple Windows OS tasks you’ll need to complete. You should note that these instructions are for the PostgreSQL psql Command Line Interface (CLI).

Open a Command Prompt with Administrator privileges. It should give you a command prompt like the following:

Microsoft Windows [Version 10.0.19042.1466] (c) Microsoft Corporation. All rights reserved. C:\Users\username> |

Type psql to launch the PostgreSQL CLI and then the return or enter key:

C:\Users\username>psql |

Most likely, you’ll get the following error message. It means that your System Path environment variable doesn’t include the directory where the psql executable is found, and that’s why the command is not recognized.

'psql' is not recognized as an internal or external command, operable program or batch file. |

You can set it temporarily in your current Windows CLI with the following command:

set PATH=%PATH%;C:\Program Files\PostgreSQL\14\bin; |

For those familiar with Windows CLI navigation in prior releases, the convention is to append a semicolon at the end of the item added to the %PATH% environment variable. If you were to put the semicolon between the %PATH% and new directory path there would be two semicolons together. While it won’t do any harm, it’s best to follow the new convention or style.

CRITICAL NOTE: The rest of the post assumes you have set the correct %PATH% environment variable or added it to your System’s Path environment variable and restarted the Windows CLI after adding it through the GUI tool. The reason you need to restart the Windows CLI is that the %PATH% environment variable is inherited at startup and doesn’t change in an open Windows CLI shell.

Another common mistake some users make, at least those who have used an older version of the psql utility on a Linux distribution (or “distro”), is to type psql without any arguments to become the superuser. This error doesn’t occur in Linux because you most likely connected as the postgres user before trying to connect to the PostgreSQL database. A quick demonstration should illustrate the error and support explaining why it occurs on the Windows OS.

Attempting to connect to the PostgreSQL database as a non-postgres user:

C:\Users\username>psql |

You should get the following error:

psql: error: connection to server at "localhost" (::1), port 5432 failed: fe_sendauth: no password supplied |

This error occurs because you’re not the postgres user, and all other users must designate that they’re connecting to the superuser account. The correct syntax is:

C:\Users\username>psql -U postgres |

Then, you’ll be prompted for the password that you set when you installed PostreSQL database. Enter that password from the installation at the prompt.

Password for user postgres:

psql (14.1)

WARNING: Console code page (437) differs from Windows code page (1252)

8-bit characters might not work correctly. See psql reference

page "Notes for Windows users" for details.

Type "help" for help.

postgres=# |

The warning message is telling you that the character set collation differs between your Windows OS and the PostgreSQL database. We’ll discuss this more later but for the sake of almost all your work, it won’t matter. If the warning message bothers you, you can run the chcp command before launching PostgreSQL when you open your Windows CLI:

chcp 1252 |

The chcp command changes your terminal character set to align with the Latin 1 character set, which enables you to use things like non-English accent characters (the umlaut over an o, or ö). After running the You will see this when you connect after running that command:

psql (14.2) Type "help" for help. postgres# |

INFO: The chcp command is used to supplement the international keyboard and character set information, allowing MS-DOS to be used in other countries and with different languages. Before the chcp command can be used, the nlsfunc must be loaded, and the country.sys must be loaded into the OS configuration.

If you are an experienced Windows OS user, you may want to edit your Windows Registry to change this behavior automatically for each Windows CLI session. You can do that by opening the Windows Registry with the regedit command as an Administrator. In regedit, add an Autorun element with a value of chcp 1252 to this part of the registry:

Computer\HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Command Processor |

This value automatically sets your Windows CLI to a Latin 1 character set everytime you launch a terminal shell with the cmd.exe utility. You should only do this if you understand it completely.

Now that you’re connected as the superuser, let’s examine the steps to configure your playground or videodb database. There are five steps to configure your database and one step to connect and use the videodb database as the student user

- Create Physical Directory

The directory for the data dictionary changes with PostgreSQL installations. You can find it with the following psql CLI command:

postgres=# show data_directory; |

This will return the following:

data_directory ------------------------------------- C:/Program Files/PostgreSQL/14/data (1 row) |

While it is possible to store your subdirectory in the data dictionary, it will raise a warning message. It’s actually a better practice to install your local databases in another location on your file system.

Open a new Windows OS CLI to create a subdirectory (or in Windows parlance folder) where you will store your videoDB database. Each new Windows OS CLI opens in your home directory. You need to create a physical video_db subdirectory in your home directory.

HINT: The name of the database inside PostgreSQL should map to the tablespace name and differ from the physical directory. Otherwise there is a chance you might get confused and make an error in the future.

In a new command line shell, you can use the following syntax to create videoDB subdirectory:

md video_db |

- Create Tablespace

Returning to the original Windows CLI shell where you are connected as the postgres superuser, you can create a video_db tablespace with the following syntax:

CREATE TABLESPACE video_db OWNER postgres LOCATION 'C:\Users\username\video_db'; |

This will return the following:

CREATE TABLESPACE |

You can query whether you successfully create the video_db tablespace with the following:

SELECT * FROM pg_tablespace; |

It should return the following:

oid | spcname | spcowner | spcacl | spcoptions -------+------------+----------+--------+------------ 1663 | pg_default | 10 | | 1664 | pg_global | 10 | | 16395 | video_db | 10 | | (3 rows) |

- Create a Database

You need to know the PostgreSQL default collation before you create a new database. You can write the following query to determine the default correlation:

postgres=# SELECT datname, datcollate FROM pg_database WHERE datname = 'postgres'; |

It should return something like this:

datname | datcollate -----------+---------------------------- postgres | English_United States.1252 (1 row) |

The datcollate value of the postgres database needs to the same value for the LC_COLLATE and LC_CTYPE parameters when you create a database. You can create a videodb database with the following syntax provided you’ve made appropriate substitutions for the LC_COLLATE and LC_CTYPE values below:

CREATE DATABASE videodb WITH OWNER = postgres ENCODING = 'UTF8' TABLESPACE = video_db LC_COLLATE = 'English_United States.1252' LC_CTYPE = 'English_United States.1252' CONNECTION LIMIT = -1; |

You can verify the creation of the videodb with the following command:

postgres# \l |

It should show you a display like the following:

List of databases

Name | Owner | Encoding | Collate | Ctype | Access privileges

-----------+----------+----------+----------------------------+----------------------------+-----------------------

postgres | postgres | UTF8 | English_United States.1252 | English_United States.1252 |

template0 | postgres | UTF8 | English_United States.1252 | English_United States.1252 | =c/postgres +

| | | | | postgres=CTc/postgres

template1 | postgres | UTF8 | English_United States.1252 | English_United States.1252 | =c/postgres +

| | | | | postgres=CTc/postgres

videodb | postgres | UTF8 | English_United States.1252 | English_United States.1252 |

(4 rows) |

Then, you can assign comment to the database with the following syntax:

COMMENT ON DATABASE videodb IS 'Video Store Database'; |

- Create a Role, Grant, and User

In this section you create a dba role, grant privileges on a videodb database to a role, and create a user with the role that you created previously with the following three statements. There are three steps in this sections.

- The first step creates a dba role:

CREATE ROLE dba WITH SUPERUSER;

- The second step grants all privileges on the videodb database to both the postgres superuser and the dba role:

GRANT ALL PRIVILEGES ON DATABASE videodb TO postgres; GRANT ALL PRIVILEGES ON DATABASE videodb TO dba;

Any work in pgAdmin4 requires a grant on the videodb database to the postgres superuser. The grant enables visibility of the videodb database in the pgAdmin4 console as shown in the following image.

- The third step creates a student user with the dba role:

CREATE USER student WITH ROLE dba ENCRYPTED PASSWORD 'student';

After this step, you need to disconnect as the postgres superuser with the following command:

QUIT;

- It is possible that you may (and should if this is a new instance you are building) encounter an error when you try to connect as a sandboxed user. The syntax to connect as the student user is:

psql -d videodb -U student -W

All the options, which are preceded with a single dash (–) are case sensitive. The -d option sets the database for the connection. The -U option set user for the connection and the -W option instructs the psql CLI to prompt for the password.

While you shouldn’t encounter the following error during a Windows OS installation,

psql: FATAL: Peer authentication failed for user "student"

You can fix this in PostgreSQL 14 by changing the user access parameters in the pg_hba.conf configuration file. The file is found in the C:\Program Files\PostgreSQL\14\data directory. These are the correct out of the box settings you should see.

# TYPE DATABASE USER ADDRESS METHOD # "local" is for Unix domain socket connections only local all all scram-sha-256 # IPv4 local connections: host all all 127.0.0.1/32 scram-sha-256 # IPv6 local connections: host all all ::1/128 scram-sha-256 # Allow replication connections from localhost, by a user with the # replication privilege. local replication all scram-sha-256 host replication all 127.0.0.1/32 scram-sha-256 host replication all ::1/128 scram-sha-256

If you find something that’s broken, fix it. The values above should ensure you make the changes successfully. You will need to restart the postgres service if you make changes.

If you plan on using the copy command to read external CSV (Comma Separated Value) files, you need to grant as the postgres superuser another privilege to the student user. This grants the pg_read_server_files role to the student user.

GRANT pg_read_server_files TO student;

- Create a Schema

The PostgreSQL database supports multiple schemas inside databases. The default schema for any database is the public schema. You must create separate schemas and refer to them explicitly when accessing them unless you alter the default search path. This section demonstrates how to:

- Create an app schema.

- Create a revision_history table in the app schema.

- Modify the standard search path to include other schemas

The process of creating a schema requires you grant the CREATE ON DATABASE privilege to the user as the postgres user. The initial grant of the create privilege requires the postgres superuser’s privileges. That means you must connect as the postgres user, like:

psql -U postgres -W |

Then, as the postgres superuser, you use the following syntax to grant the create privilege to the student user on the videodb database:

GRANT CREATE ON DATABASE videodb TO student; |

After granting the create privilege, you should exit the postgres superuser’s account, like

QUIT; |

Now, you should connect as the student user to the videodb database (syntax introduced earlier but provided again below).

psql -U postgres -W |

As the student user, create the app schema with the following syntax:

CREATE SCHEMA app; |

Then, you can query the result as follows:

SELECT * FROM pg_catalog.pg_namespace ORDER BY nspname; |

You should see the following:

oid | nspname | nspowner | nspacl

-------+--------------------+----------+-------------------------------------

16399 | app | 16398 |

13388 | information_schema | 10 | {postgres=UC/postgres,=U/postgres}

11 | pg_catalog | 10 | {postgres=UC/postgres,=U/postgres}

99 | pg_toast | 10 |

2200 | public | 10 | {postgres=UC/postgres,=UC/postgres}

(5 rows) |

If you create a table without a schema name, it is automatically placed in the public schema. That means any attempt to describe the table with the \d command line option returns without prepending the schema name returns an error, however, this is only true when you are using the default search parameter.

Let’s create a revision_history table in the app schema with a script file. A script file is a set of related SQL commands, like the following example that suppresses notices, drops any pre-existing revision_history table, and create the revision_history table.

-- Set client messages to warning or error, which means any -- notice associated with the if exists clause is suppressed. SET client_min_messages TO warning; -- Conditionally drop an existing revision_history table in -- the app schema. DROP TABLE IF EXISTS revision_history; -- Create a revision_history table. CREATE TABLE app.revision_history ( revision_history_id serial , session_id VARCHAR , table_name VARCHAR , revision_id INTEGER ); |

You can run a script file by using a relative or absolute file name. An absolute file name includes a full path from a Windows logical driver letter, like C:\ or a Linux mount point. A relative file name is simply the file name.

If you want to use a relative file name, you must first navigate to the directory where you have saved the file first. This directory becomes your local drive and allows you call any file in it from the psql command prompt by using only its file name.

You should connect as the student user to the videodb database. The \i command lets you run a file, assuming you put the preceding script file into a revision_history file in your local directory.

\I revision_history.sql |

If you try to describe the revision_history table with the \d command line option, like

\d revision_history |

It will show the following:

Did not find any relation named "revision_history". |

That’s because there is no revision_history table in the public schema and the default search path only includes the public schema.

You can show the search path with the following:

show search_path; |

It should return the following, which is a schema that shares the user’s name and public.

search_path ----------------- "$user", public (1 row) |

You reset the search path as follows:

SET search_path TO app, "$user", public; |

After you set the search_path, an attempt to describe the table will work because it searches for the table in the app and public schema. That means the following command:

\d revision_history |

Shows:

Table "app.revision_history"

Column | Type | Collation | Nullable | Default

---------------------+-------------------+-----------+----------+---------------------------------------------------------------

revision_history_id | integer | | not null | nextval('revision_history_revision_history_id_seq'::regclass)

session_id | character varying | | |

table_name | character varying | | |

revision_id | integer | | | |

- Connect as student to videodb:

As shown in Step #4 above, you can now connect and use to the videodb as the student user with the following syntax:

psql -d videodb -U student -W |

If you did everything correctly, you should see the following after correctly providing the student password for the student user:

Password:

psql (14.1)

WARNING: Console code page (437) differs from Windows code page (1252)

8-bit characters might not work correctly. See psql reference

page "Notes for Windows users" for details.

Type "help" for help.

videodb=> |

After connecting to the videodb database, you can query the current database, like

SELECT current_database(); |

It should return the following:

current_database ------------------ videodb (1 row) |

This has shown you how to create a videodb tablespace, a videodb database, a dba role, a student user, an app schema, and connect to your new videodb database as the student user. As always, I hope it lets you get a lot down with little effort and avoiding pages and pages of documentation.

Oracle Container User

After you create and provision the Oracle Database 21c Express Edition (XE), you can create a c##student container user with the following two step process.

- Create a c##student Oracle user account with the following command:

CREATE USER c##student IDENTIFIED BY student DEFAULT TABLESPACE users QUOTA 200M ON users TEMPORARY TABLESPACE temp;

- Grant necessary privileges to the newly created c##student user:

GRANT CREATE CLUSTER, CREATE INDEXTYPE, CREATE OPERATOR , CREATE PROCEDURE, CREATE SEQUENCE, CREATE SESSION , CREATE TABLE, CREATE TRIGGER, CREATE TYPE , CREATE VIEW TO c##student;

As always, I hope this helps those looking for how to do something that’s less than clear because everybody uses tools.