Archive for the ‘Mac’ Category

SQL Developer on Ubuntu

The following steps show how to install and configure SQL Developer on Ubuntu 22.0.4 to work with Oracle Database 23c Free in a Docker container. The first steps are installing the Java Runtime Environment and Java Development Kit, and then downloading, installing and configuring SQL Developer. These are the required steps:

- Install the Java Runtime Environment:

sudo apt install default-jre

The log file for this is:

Display detailed console log →

Reading package lists... Done Building dependency tree... Done Reading state information... Done The following additional packages will be installed: ca-certificates-java default-jre-headless fonts-dejavu-extra java-common libatk-wrapper-java libatk-wrapper-java-jni openjdk-11-jre openjdk-11-jre-headless Suggested packages: fonts-ipafont-gothic fonts-ipafont-mincho fonts-wqy-microhei | fonts-wqy-zenhei The following NEW packages will be installed: ca-certificates-java default-jre default-jre-headless fonts-dejavu-extra java-common libatk-wrapper-java libatk-wrapper-java-jni openjdk-11-jre openjdk-11-jre-headless 0 upgraded, 9 newly installed, 0 to remove and 4 not upgraded. Need to get 44.9 MB of archives. After this operation, 185 MB of additional disk space will be used. Do you want to continue? [Y/n] Y Get:1 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 java-common all 0.72build2 [6,782 B] Get:2 http://us.archive.ubuntu.com/ubuntu jammy-updates/main amd64 openjdk-11-jre-headless amd64 11.0.21+9-0ubuntu1~22.04 [42.5 MB] Get:3 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 default-jre-headless amd64 2:1.11-72build2 [3,042 B] Get:4 http://us.archive.ubuntu.com/ubuntu jammy-updates/main amd64 ca-certificates-java all 20190909ubuntu1.2 [12.1 kB] Get:5 http://us.archive.ubuntu.com/ubuntu jammy-updates/main amd64 openjdk-11-jre amd64 11.0.21+9-0ubuntu1~22.04 [214 kB] Get:6 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 default-jre amd64 2:1.11-72build2 [896 B] Get:7 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 fonts-dejavu-extra all 2.37-2build1 [2,041 kB] Get:8 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 libatk-wrapper-java all 0.38.0-5build1 [53.1 kB] Get:9 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 libatk-wrapper-java-jni amd64 0.38.0-5build1 [49.0 kB] Fetched 44.9 MB in 14s (3,270 kB/s) Selecting previously unselected package java-common. (Reading database ... 203118 files and directories currently installed.) Preparing to unpack .../0-java-common_0.72build2_all.deb ... Unpacking java-common (0.72build2) ... Selecting previously unselected package openjdk-11-jre-headless:amd64. Preparing to unpack .../1-openjdk-11-jre-headless_11.0.21+9-0ubuntu1~22.04_amd64 .deb ... Unpacking openjdk-11-jre-headless:amd64 (11.0.21+9-0ubuntu1~22.04) ... Selecting previously unselected package default-jre-headless. Preparing to unpack .../2-default-jre-headless_2%3a1.11-72build2_amd64.deb ... Unpacking default-jre-headless (2:1.11-72build2) ... Selecting previously unselected package ca-certificates-java. Preparing to unpack .../3-ca-certificates-java_20190909ubuntu1.2_all.deb ... Unpacking ca-certificates-java (20190909ubuntu1.2) ... Selecting previously unselected package openjdk-11-jre:amd64. Preparing to unpack .../4-openjdk-11-jre_11.0.21+9-0ubuntu1~22.04_amd64.deb ... Unpacking openjdk-11-jre:amd64 (11.0.21+9-0ubuntu1~22.04) ... Selecting previously unselected package default-jre. Preparing to unpack .../5-default-jre_2%3a1.11-72build2_amd64.deb ... Unpacking default-jre (2:1.11-72build2) ... Selecting previously unselected package fonts-dejavu-extra. Preparing to unpack .../6-fonts-dejavu-extra_2.37-2build1_all.deb ... Unpacking fonts-dejavu-extra (2.37-2build1) ... Selecting previously unselected package libatk-wrapper-java. Preparing to unpack .../7-libatk-wrapper-java_0.38.0-5build1_all.deb ... Unpacking libatk-wrapper-java (0.38.0-5build1) ... Selecting previously unselected package libatk-wrapper-java-jni:amd64. Preparing to unpack .../8-libatk-wrapper-java-jni_0.38.0-5build1_amd64.deb ... Unpacking libatk-wrapper-java-jni:amd64 (0.38.0-5build1) ... Setting up java-common (0.72build2) ... Setting up fonts-dejavu-extra (2.37-2build1) ... Setting up libatk-wrapper-java (0.38.0-5build1) ... Setting up libatk-wrapper-java-jni:amd64 (0.38.0-5build1) ... Setting up default-jre-headless (2:1.11-72build2) ... Setting up openjdk-11-jre-headless:amd64 (11.0.21+9-0ubuntu1~22.04) ... update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/java to provid e /usr/bin/java (java) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jjs to provide /usr/bin/jjs (jjs) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/keytool to pro vide /usr/bin/keytool (keytool) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/rmid to provid e /usr/bin/rmid (rmid) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/rmiregistry to provide /usr/bin/rmiregistry (rmiregistry) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/pack200 to pro vide /usr/bin/pack200 (pack200) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/unpack200 to p rovide /usr/bin/unpack200 (unpack200) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/lib/jexec to provi de /usr/bin/jexec (jexec) in auto mode Setting up openjdk-11-jre:amd64 (11.0.21+9-0ubuntu1~22.04) ... Setting up default-jre (2:1.11-72build2) ... Setting up ca-certificates-java (20190909ubuntu1.2) ... head: cannot open '/etc/ssl/certs/java/cacerts' for reading: No such file or dir ectory Adding debian:QuoVadis_Root_CA_1_G3.pem Adding debian:GlobalSign_Root_E46.pem Adding debian:T-TeleSec_GlobalRoot_Class_3.pem Adding debian:Certum_Trusted_Network_CA.pem Adding debian:Buypass_Class_2_Root_CA.pem Adding debian:NetLock_Arany_=Class_Gold=_Főtanúsítvány.pem Adding debian:e-Szigno_Root_CA_2017.pem Adding debian:emSign_Root_CA_-_G1.pem Adding debian:D-TRUST_BR_Root_CA_1_2020.pem Adding debian:Hongkong_Post_Root_CA_3.pem Adding debian:GlobalSign_ECC_Root_CA_-_R4.pem Adding debian:NAVER_Global_Root_Certification_Authority.pem Adding debian:UCA_Extended_Validation_Root.pem Adding debian:AffirmTrust_Premium.pem Adding debian:Entrust_Root_Certification_Authority.pem Adding debian:DigiCert_Trusted_Root_G4.pem Adding debian:CFCA_EV_ROOT.pem Adding debian:ePKI_Root_Certification_Authority.pem Adding debian:Hellenic_Academic_and_Research_Institutions_ECC_RootCA_2015.pem Adding debian:HARICA_TLS_RSA_Root_CA_2021.pem Adding debian:GlobalSign_Root_CA_-_R6.pem Adding debian:TWCA_Global_Root_CA.pem Adding debian:Trustwave_Global_ECC_P384_Certification_Authority.pem Adding debian:ISRG_Root_X1.pem Adding debian:Starfield_Services_Root_Certificate_Authority_-_G2.pem Adding debian:QuoVadis_Root_CA_3.pem Adding debian:Security_Communication_Root_CA.pem Adding debian:DigiCert_TLS_RSA4096_Root_G5.pem Adding debian:Entrust_Root_Certification_Authority_-_EC1.pem Adding debian:Security_Communication_RootCA3.pem Adding debian:TeliaSonera_Root_CA_v1.pem Adding debian:vTrus_ECC_Root_CA.pem Adding debian:AC_RAIZ_FNMT-RCM_SERVIDORES_SEGUROS.pem Adding debian:Certum_EC-384_CA.pem Adding debian:Microsec_e-Szigno_Root_CA_2009.pem Adding debian:ssl-cert-snakeoil.pem Adding debian:USERTrust_ECC_Certification_Authority.pem Adding debian:CA_Disig_Root_R2.pem Adding debian:Certum_Trusted_Network_CA_2.pem Adding debian:ACCVRAIZ1.pem Adding debian:TunTrust_Root_CA.pem Adding debian:Buypass_Class_3_Root_CA.pem Adding debian:D-TRUST_Root_Class_3_CA_2_2009.pem Adding debian:Security_Communication_ECC_RootCA1.pem Adding debian:GTS_Root_R2.pem Adding debian:Certigna.pem Adding debian:SSL.com_EV_Root_Certification_Authority_RSA_R2.pem Adding debian:Entrust.net_Premium_2048_Secure_Server_CA.pem Adding debian:E-Tugra_Global_Root_CA_ECC_v3.pem Adding debian:Hongkong_Post_Root_CA_1.pem Adding debian:SZAFIR_ROOT_CA2.pem Adding debian:TUBITAK_Kamu_SM_SSL_Kok_Sertifikasi_-_Surum_1.pem Adding debian:Atos_TrustedRoot_2011.pem Adding debian:DigiCert_High_Assurance_EV_Root_CA.pem Adding debian:emSign_Root_CA_-_C1.pem Adding debian:Go_Daddy_Root_Certificate_Authority_-_G2.pem Adding debian:GDCA_TrustAUTH_R5_ROOT.pem Adding debian:GlobalSign_Root_CA_-_R3.pem Adding debian:DigiCert_Assured_ID_Root_G3.pem Adding debian:Autoridad_de_Certificacion_Firmaprofesional_CIF_A62634068_2.pem Adding debian:Certainly_Root_R1.pem Adding debian:vTrus_Root_CA.pem Adding debian:Certainly_Root_E1.pem Adding debian:Autoridad_de_Certificacion_Firmaprofesional_CIF_A62634068.pem Adding debian:TWCA_Root_Certification_Authority.pem Adding debian:Starfield_Root_Certificate_Authority_-_G2.pem Adding debian:Amazon_Root_CA_3.pem Adding debian:GTS_Root_R1.pem Adding debian:SwissSign_Gold_CA_-_G2.pem Adding debian:Certum_Trusted_Root_CA.pem Adding debian:Hellenic_Academic_and_Research_Institutions_RootCA_2015.pem Adding debian:AffirmTrust_Networking.pem Adding debian:emSign_ECC_Root_CA_-_G3.pem Adding debian:HARICA_TLS_ECC_Root_CA_2021.pem Adding debian:certSIGN_ROOT_CA.pem Adding debian:Actalis_Authentication_Root_CA.pem Adding debian:SSL.com_Root_Certification_Authority_RSA.pem Adding debian:Certigna_Root_CA.pem Adding debian:XRamp_Global_CA_Root.pem Adding debian:Baltimore_CyberTrust_Root.pem Adding debian:Trustwave_Global_ECC_P256_Certification_Authority.pem Adding debian:QuoVadis_Root_CA_2_G3.pem Adding debian:GTS_Root_R3.pem Adding debian:COMODO_RSA_Certification_Authority.pem Adding debian:ISRG_Root_X2.pem Adding debian:SwissSign_Silver_CA_-_G2.pem Adding debian:IdenTrust_Public_Sector_Root_CA_1.pem Adding debian:Microsoft_ECC_Root_Certificate_Authority_2017.pem Adding debian:UCA_Global_G2_Root.pem Adding debian:DigiCert_Assured_ID_Root_CA.pem Adding debian:Entrust_Root_Certification_Authority_-_G2.pem Adding debian:QuoVadis_Root_CA_2.pem Adding debian:Trustwave_Global_Certification_Authority.pem Adding debian:OISTE_WISeKey_Global_Root_GB_CA.pem Adding debian:HiPKI_Root_CA_-_G1.pem Adding debian:E-Tugra_Certification_Authority.pem Adding debian:GTS_Root_R4.pem Adding debian:Amazon_Root_CA_2.pem Adding debian:Amazon_Root_CA_1.pem Adding debian:SecureTrust_CA.pem Adding debian:GlobalSign_Root_R46.pem Adding debian:IdenTrust_Commercial_Root_CA_1.pem Adding debian:DigiCert_Global_Root_G2.pem Adding debian:Comodo_AAA_Services_root.pem Adding debian:SSL.com_Root_Certification_Authority_ECC.pem Adding debian:T-TeleSec_GlobalRoot_Class_2.pem Adding debian:Starfield_Class_2_CA.pem Adding debian:DigiCert_Global_Root_CA.pem Adding debian:SecureSign_RootCA11.pem Adding debian:certSIGN_Root_CA_G2.pem Adding debian:DigiCert_TLS_ECC_P384_Root_G5.pem Adding debian:Entrust_Root_Certification_Authority_-_G4.pem Adding debian:OISTE_WISeKey_Global_Root_GC_CA.pem Adding debian:DigiCert_Global_Root_G3.pem Adding debian:Secure_Global_CA.pem Adding debian:Microsoft_RSA_Root_Certificate_Authority_2017.pem Adding debian:DigiCert_Assured_ID_Root_G2.pem Adding debian:Telia_Root_CA_v2.pem Adding debian:emSign_ECC_Root_CA_-_C3.pem Adding debian:COMODO_Certification_Authority.pem Adding debian:AffirmTrust_Premium_ECC.pem Adding debian:GLOBALTRUST_2020.pem Adding debian:E-Tugra_Global_Root_CA_RSA_v3.pem Adding debian:Amazon_Root_CA_4.pem Adding debian:COMODO_ECC_Certification_Authority.pem Adding debian:AffirmTrust_Commercial.pem Adding debian:SSL.com_EV_Root_Certification_Authority_ECC.pem Adding debian:AC_RAIZ_FNMT-RCM.pem Adding debian:Go_Daddy_Class_2_CA.pem Adding debian:QuoVadis_Root_CA_3_G3.pem Adding debian:D-TRUST_EV_Root_CA_1_2020.pem Adding debian:GlobalSign_Root_CA.pem Adding debian:GlobalSign_ECC_Root_CA_-_R5.pem Adding debian:USERTrust_RSA_Certification_Authority.pem Adding debian:D-TRUST_Root_Class_3_CA_2_EV_2009.pem Adding debian:Izenpe.com.pem Adding debian:ANF_Secure_Server_Root_CA.pem Adding debian:Security_Communication_RootCA2.pem done. Processing triggers for mailcap (3.70+nmu1ubuntu1) ... Processing triggers for fontconfig (2.13.1-4.2ubuntu5) ... Processing triggers for desktop-file-utils (0.26-1ubuntu3) ... Processing triggers for hicolor-icon-theme (0.17-2) ... Processing triggers for gnome-menus (3.36.0-1ubuntu3) ... Processing triggers for man-db (2.10.2-1) ... Processing triggers for ca-certificates (20230311ubuntu0.22.04.1) ... Updating certificates in /etc/ssl/certs... 0 added, 0 removed; done. Running hooks in /etc/ca-certificates/update.d... done. done.

- Install the Java Runtime Environment:

sudo apt install -y default-idk

The log file for this is:

Display detailed console log →

Reading package lists... Done Building dependency tree... Done Reading state information... Done The following additional packages will be installed: default-jdk-headless libice-dev libpthread-stubs0-dev libsm-dev libx11-dev libxau-dev libxcb1-dev libxdmcp-dev libxt-dev openjdk-11-jdk openjdk-11-jdk-headless x11proto-dev xorg-sgml-doctools xtrans-dev Suggested packages: libice-doc libsm-doc libx11-doc libxcb-doc libxt-doc openjdk-11-demo openjdk-11-source visualvm The following NEW packages will be installed: default-jdk default-jdk-headless libice-dev libpthread-stubs0-dev libsm-dev libx11-dev libxau-dev libxcb1-dev libxdmcp-dev libxt-dev openjdk-11-jdk openjdk-11-jdk-headless x11proto-dev xorg-sgml-doctools xtrans-dev 0 upgraded, 15 newly installed, 0 to remove and 4 not upgraded. Need to get 76.9 MB of archives. After this operation, 90.6 MB of additional disk space will be used. Get:1 http://us.archive.ubuntu.com/ubuntu jammy-updates/main amd64 openjdk-11-jdk-headless amd64 11.0.21+9-0ubuntu1~22.04 [73.5 MB] Get:2 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 default-jdk-headless amd64 2:1.11-72build2 [942 B] Get:3 http://us.archive.ubuntu.com/ubuntu jammy-updates/main amd64 openjdk-11-jdk amd64 11.0.21+9-0ubuntu1~22.04 [1,327 kB] Get:4 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 default-jdk amd64 2:1.11-72build2 [908 B] Get:5 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 xorg-sgml-doctools all 1:1.11-1.1 [10.9 kB] Get:6 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 x11proto-dev all 2021.5-1 [604 kB] Get:7 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 libice-dev amd64 2:1.0.10-1build2 [51.4 kB] Get:8 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 libpthread-stubs0-dev amd64 0.4-1build2 [5,516 B] Get:9 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 libsm-dev amd64 2:1.2.3-1build2 [18.1 kB] Get:10 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 libxau-dev amd64 1:1.0.9-1build5 [9,724 B] Get:11 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 libxdmcp-dev amd64 1:1.1.3-0ubuntu5 [26.5 kB] Get:12 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 xtrans-dev all 1.4.0-1 [68.9 kB] Get:13 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 libxcb1-dev amd64 1.14-3ubuntu3 [86.5 kB] Get:14 http://us.archive.ubuntu.com/ubuntu jammy-updates/main amd64 libx11-dev amd64 2:1.7.5-1ubuntu0.3 [744 kB] Get:15 http://us.archive.ubuntu.com/ubuntu jammy/main amd64 libxt-dev amd64 1:1.2.1-1 [396 kB] Fetched 76.9 MB in 6s (12.7 MB/s) Selecting previously unselected package openjdk-11-jdk-headless:amd64. (Reading database ... 203527 files and directories currently installed.) Preparing to unpack .../00-openjdk-11-jdk-headless_11.0.21+9-0ubuntu1~22.04_amd6 4.deb ... Unpacking openjdk-11-jdk-headless:amd64 (11.0.21+9-0ubuntu1~22.04) ... Selecting previously unselected package default-jdk-headless. Preparing to unpack .../01-default-jdk-headless_2%3a1.11-72build2_amd64.deb ... Unpacking default-jdk-headless (2:1.11-72build2) ... Selecting previously unselected package openjdk-11-jdk:amd64. Preparing to unpack .../02-openjdk-11-jdk_11.0.21+9-0ubuntu1~22.04_amd64.deb ... Unpacking openjdk-11-jdk:amd64 (11.0.21+9-0ubuntu1~22.04) ... Selecting previously unselected package default-jdk. Preparing to unpack .../03-default-jdk_2%3a1.11-72build2_amd64.deb ... Unpacking default-jdk (2:1.11-72build2) ... Selecting previously unselected package xorg-sgml-doctools. Preparing to unpack .../04-xorg-sgml-doctools_1%3a1.11-1.1_all.deb ... Unpacking xorg-sgml-doctools (1:1.11-1.1) ... Selecting previously unselected package x11proto-dev. Preparing to unpack .../05-x11proto-dev_2021.5-1_all.deb ... Unpacking x11proto-dev (2021.5-1) ... Selecting previously unselected package libice-dev:amd64. Preparing to unpack .../06-libice-dev_2%3a1.0.10-1build2_amd64.deb ... Unpacking libice-dev:amd64 (2:1.0.10-1build2) ... Selecting previously unselected package libpthread-stubs0-dev:amd64. Preparing to unpack .../07-libpthread-stubs0-dev_0.4-1build2_amd64.deb ... Unpacking libpthread-stubs0-dev:amd64 (0.4-1build2) ... Selecting previously unselected package libsm-dev:amd64. Preparing to unpack .../08-libsm-dev_2%3a1.2.3-1build2_amd64.deb ... Unpacking libsm-dev:amd64 (2:1.2.3-1build2) ... Selecting previously unselected package libxau-dev:amd64. Preparing to unpack .../09-libxau-dev_1%3a1.0.9-1build5_amd64.deb ... Unpacking libxau-dev:amd64 (1:1.0.9-1build5) ... Selecting previously unselected package libxdmcp-dev:amd64. Preparing to unpack .../10-libxdmcp-dev_1%3a1.1.3-0ubuntu5_amd64.deb ... Unpacking libxdmcp-dev:amd64 (1:1.1.3-0ubuntu5) ... Selecting previously unselected package xtrans-dev. Preparing to unpack .../11-xtrans-dev_1.4.0-1_all.deb ... Unpacking xtrans-dev (1.4.0-1) ... Selecting previously unselected package libxcb1-dev:amd64. Preparing to unpack .../12-libxcb1-dev_1.14-3ubuntu3_amd64.deb ... Unpacking libxcb1-dev:amd64 (1.14-3ubuntu3) ... Selecting previously unselected package libx11-dev:amd64. Preparing to unpack .../13-libx11-dev_2%3a1.7.5-1ubuntu0.3_amd64.deb ... Unpacking libx11-dev:amd64 (2:1.7.5-1ubuntu0.3) ... Selecting previously unselected package libxt-dev:amd64. Preparing to unpack .../14-libxt-dev_1%3a1.2.1-1_amd64.deb ... Unpacking libxt-dev:amd64 (1:1.2.1-1) ... Setting up openjdk-11-jdk-headless:amd64 (11.0.21+9-0ubuntu1~22.04) ... update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jar to provide /usr/bin/jar (jar) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jarsigner to p rovide /usr/bin/jarsigner (jarsigner) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/javac to provi de /usr/bin/javac (javac) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/javadoc to pro vide /usr/bin/javadoc (javadoc) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/javap to provi de /usr/bin/javap (javap) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jcmd to provid e /usr/bin/jcmd (jcmd) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jdb to provide /usr/bin/jdb (jdb) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jdeprscan to p rovide /usr/bin/jdeprscan (jdeprscan) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jdeps to provi de /usr/bin/jdeps (jdeps) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jfr to provide /usr/bin/jfr (jfr) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jimage to prov ide /usr/bin/jimage (jimage) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jinfo to provi de /usr/bin/jinfo (jinfo) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jlink to provi de /usr/bin/jlink (jlink) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jmap to provid e /usr/bin/jmap (jmap) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jmod to provid e /usr/bin/jmod (jmod) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jps to provide /usr/bin/jps (jps) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jrunscript to provide /usr/bin/jrunscript (jrunscript) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jshell to prov ide /usr/bin/jshell (jshell) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jstack to prov ide /usr/bin/jstack (jstack) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jstat to provi de /usr/bin/jstat (jstat) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jstatd to prov ide /usr/bin/jstatd (jstatd) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/rmic to provid e /usr/bin/rmic (rmic) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/serialver to p rovide /usr/bin/serialver (serialver) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jaotc to provi de /usr/bin/jaotc (jaotc) in auto mode update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jhsdb to provi de /usr/bin/jhsdb (jhsdb) in auto mode Setting up libpthread-stubs0-dev:amd64 (0.4-1build2) ... Setting up xtrans-dev (1.4.0-1) ... Setting up default-jdk-headless (2:1.11-72build2) ... Setting up openjdk-11-jdk:amd64 (11.0.21+9-0ubuntu1~22.04) ... update-alternatives: using /usr/lib/jvm/java-11-openjdk-amd64/bin/jconsole to pr ovide /usr/bin/jconsole (jconsole) in auto mode Setting up xorg-sgml-doctools (1:1.11-1.1) ... Setting up default-jdk (2:1.11-72build2) ... Processing triggers for sgml-base (1.30) ... Setting up x11proto-dev (2021.5-1) ... Setting up libxau-dev:amd64 (1:1.0.9-1build5) ... Setting up libice-dev:amd64 (2:1.0.10-1build2) ... Setting up libsm-dev:amd64 (2:1.2.3-1build2) ... Processing triggers for man-db (2.10.2-1) ... Setting up libxdmcp-dev:amd64 (1:1.1.3-0ubuntu5) ... Setting up libxcb1-dev:amd64 (1.14-3ubuntu3) ... Setting up libx11-dev:amd64 (2:1.7.5-1ubuntu0.3) ... Setting up libxt-dev:amd64 (1:1.2.1-1) ...

- Download SQL Developer from here; and then install SQL Developer to the /opt directory on your Ubuntu local instance:

Use the following command to unzip the SQL Developer files to the /opt directory:

sudo unzip ~/Downloads/sqldeveloper-23.1.0.097.1607-no-jre.zip

- Create the following /usr/local/bin/sqldeveloper symbolic link:

sudo ln -s /opt/sqldeveloper/sqldeveloper.sh /usr/local/bin/sqldeveloper

- Edit the /opt/sqldeveloper/sqldeveloper.sh file by replacing the following line:

cd "`dirname $0`"/sqldeveloper/bin && bash sqldeveloper $*

with this version:

/opt/sqldeveloper/sqldeveloper/bin/sqldeveloper $*

- Now, you can launch SQL Developer from any location on your local Ubuntu operating system, like:

sqldeveloper

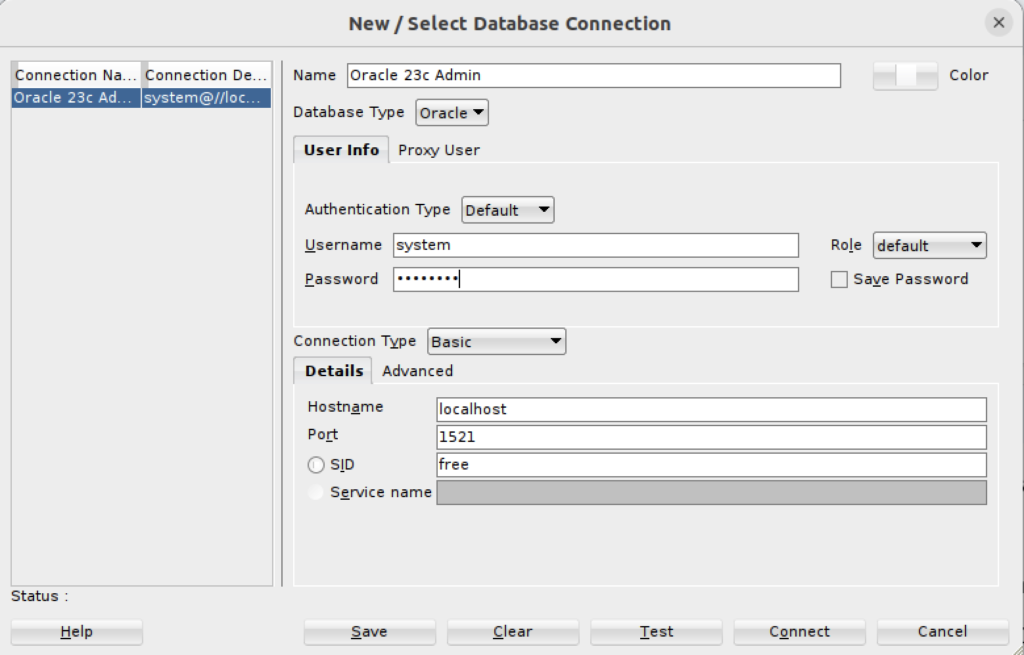

- You can now connect as the system user through SQL Developer to the Oracle Database 23c Free Docker instance with the following connection information:

- You can also create a Desktop shortcut by creating the sqldeveloper.desktop file in the /usr/share/applications directory. The SQL Developer icon is provided in the sqldeveloper base directory.

You should create the following sqldeveloper.desktop file to use a Desktop shortcut:

[Desktop Entry] Name=Oracle SQL Developer Comment=SQL Developer from Oracle GenericName=SQL Tool Exec=/usr/local/bin/sqldeveloper Icon=/opt/sqldeveloper/icon.png Type=Application StartupNotify=true Categories=Utility;Oracle;Development;SQL;

You can create a sandboxed container c##student user with the instructions from this earlier post on Oracle Database 18c, which remains the correct syntax.

As always, I hope this helps those trying to accomplish this task.

Parallels + Ubuntu

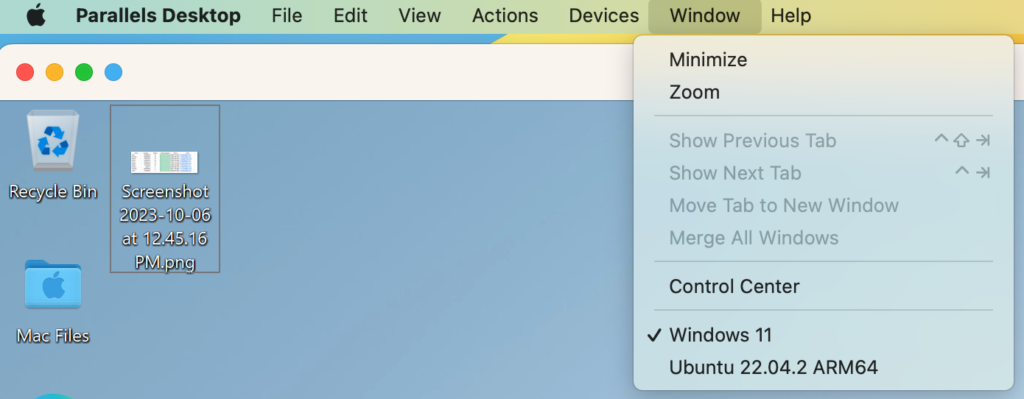

Installing Parallels on my iStudio (M2) was straightforward because I let it install Windows 11. Then, when I wanted to install Ubuntu it wasn’t quite that easy. You just need to understand that you click the Parallels’ Window menu option and Control Center option.

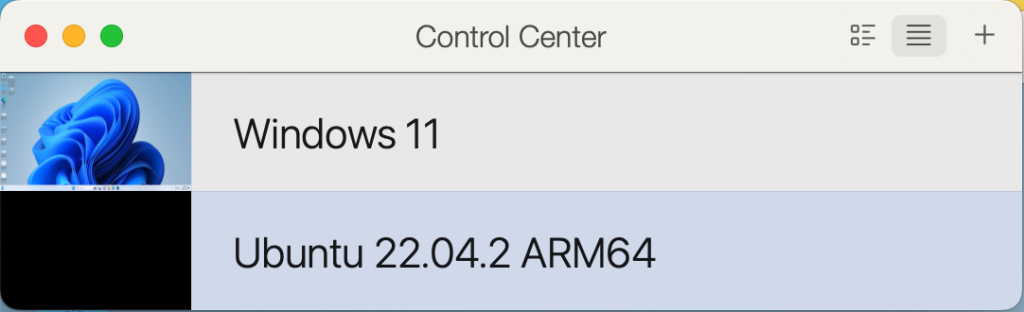

The Control Center option provides the following dialog.

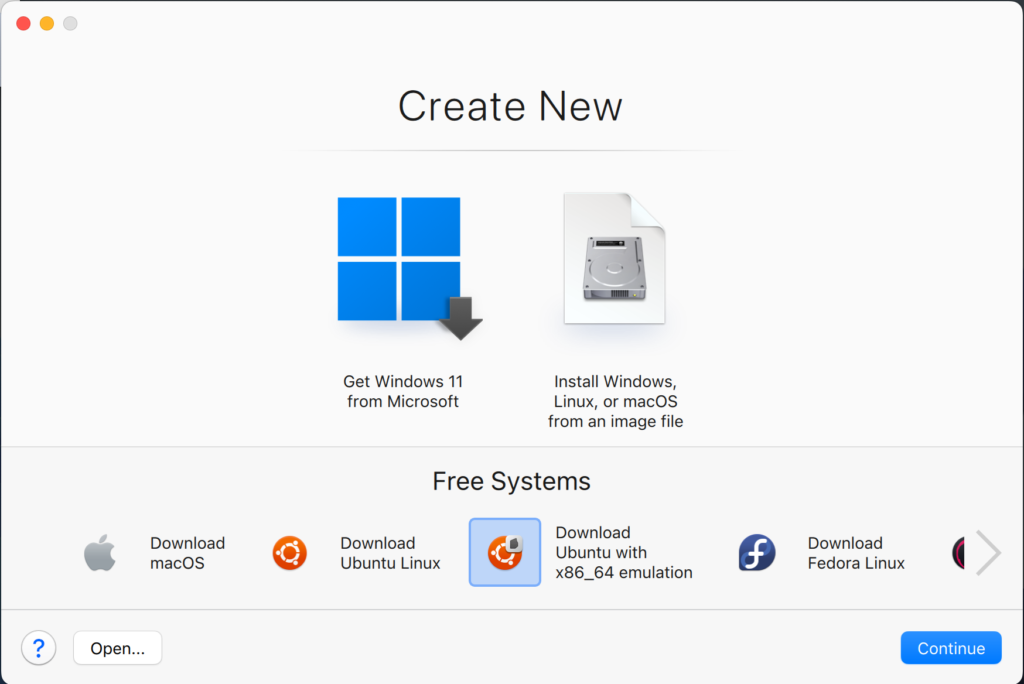

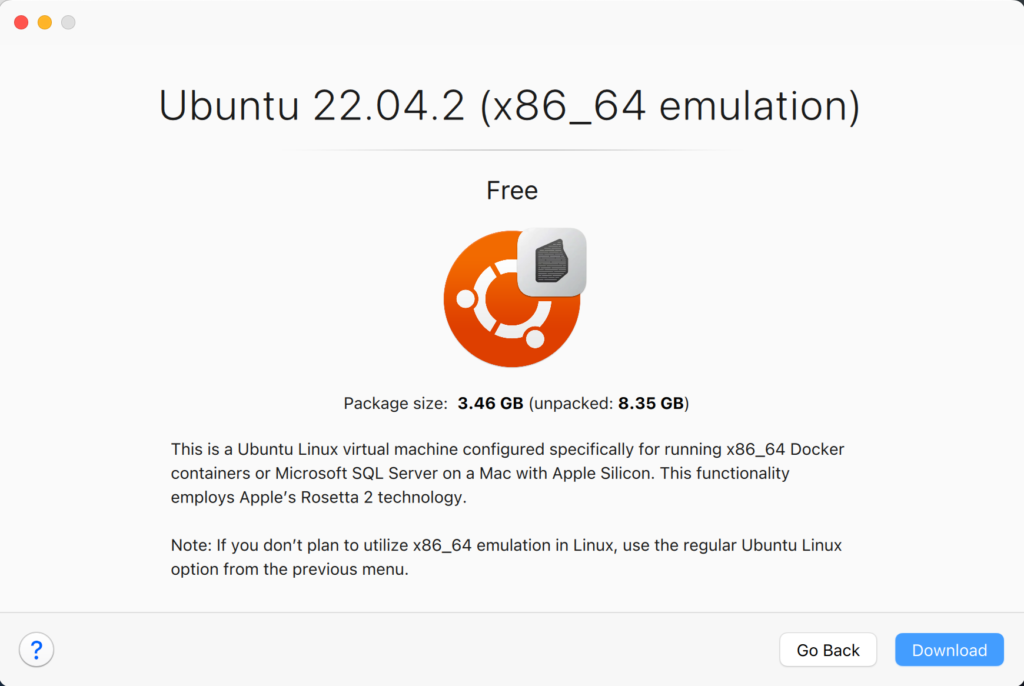

Click the + symbol to create a new virtualization and you get the following dialog; and choose the Download Ubuntu with x86_64 emulation if you want to install a Docker image that’s not ported to ARM, like the Oracle Database 19c.

It’ll then explain in this dialog that is uses Apple’s Rosetta 2 technology, which means you should be able to install an Intel architecture Docker image with Oracle Database 23c.

As always, I hope this helps those trying to sort out how to leverage a new stack.

What’s up on M1 Chip?

I’ve been trying to sort out what’s up on Oracle’s support of Apple’s M1 (arm64) chip. It really is a niche area. It only applies to those of us who work on a macOS machines with Oracle Database technology; and then only when we want to install a version of Oracle Database 21c or newer in Windows OS for testing. Since bootcamp is now gone, these are only virtualized solutions through a full virtualization product or containerized with Docker of Kubernetes.

The Best Virtual Machine Software for Mac 2022 (4/11/2022) article lets us know that only Parallels fully supports Windows virtualization on the ARM64 chip. Then, there’s the restriction that you must use Monterey or Big Sur (macOS) and Windows 11 arm64.

Instructions were published on On how to run Windows 11 on an Apple M1 a couple weeks ago. They use the free UTM App from the Apple Store and provide the download site for the Windows Insider Program. You can’t get a copy of Windows 11 arm64 without becoming part of the Windows Insider Program.

The next step would be to try and install Oracle Database 21c on Windows 11 arm64, which may or may not work. At least, I haven’t tested it yet and can’t make the promise that it works. After all, I doubt it will work because the Oracle Database 21c documentation says it only supports x64 (or Intel) architecture.

If anybody knows what Oracle has decided, will decide or even their current thinking on the issue, please make a comment.

PL/pgSQL Function

How to write an overloaded set of hello_world functions in PostgreSQL PL/pgSQL. The following code lets you write and test overloaded functions and the concepts of null, zero-length string, and string values.

-- Drop the overloaded functions. DROP FUNCTION IF EXISTS hello_world(), hello_world(whom VARCHAR); -- Create the function. CREATE FUNCTION hello_world() RETURNS text AS $$ DECLARE output VARCHAR(20); BEGIN /* Query the string into a local variable. */ SELECT 'Hello World!' INTO output; /* Return the output text variable. */ RETURN output; END $$ LANGUAGE plpgsql; -- Create the function. CREATE FUNCTION hello_world(whom VARCHAR) RETURNS text AS $$ DECLARE output VARCHAR(20); BEGIN /* Query the string into a local variable. */ IF whom IS NULL OR LENGTH(whom) = 0 THEN SELECT 'Hello World!' INTO output; ELSE SELECT CONCAT('Hello ', whom, '!') INTO output; END IF; /* Return the output text variable. */ RETURN output; END $$ LANGUAGE plpgsql; -- Call the function. SELECT hello_world(); SELECT hello_world(Null) AS output; SELECT hello_world('') AS output; SELECT hello_world('Harry') AS output; |

It should print:

output

--------------

Hello World!

(1 row)

output

--------------

Hello World!

(1 row)

output

--------------

Hello World!

(1 row)

output

--------------

Hello Harry!

(1 row) |

As always, I hope this helps those looking for the basics and how to solve problems.

MongoDB on macOS

It’s important to document things before I ask my students to do them. This walks through a native installation of MongoDB on the macOS. At least, it does when you’ve already installed Xcode. If you installed Xcode sometime ago, you should update it otherwise you may get an installation error like the following:

==> Installing mongodb-community from mongodb/brew Error: Your Xcode (11.1) is too outdated. Please update to Xcode 13.1 (or delete it). Xcode can be updated from the App Store. |

The core MongoDB installation document is in the MongoDB 5.0 installation manual. The following is a step-by-step set of instructions for those new to installing something like MongoDB without using the macOS standard package management software.

- The first step requires you install Home Brew, as qualified by the Home Brew Installation page. Before you can install Home Brew, you need to install Xcode on your macOS. After installing Xcode, you need to launch a Terminal session on your macOS and run the following command from the macOS command-line interface (CLI):

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

After the installation, which may take several minutes, you should see this final message on the console:

==> Next steps: - Run brew help to get started - Further documentation: https://docs.brew.sh - Now that Home Brew is installed, the next step requires you to update the Homebrew formulat for MongoDB. You use the following command to do that for MongoDB 5:

brew tap mongodb/brewYou should see something like the following on your console when you update the Homebrew formula:

Running `brew update --preinstall`... ==> Homebrew is run entirely by unpaid volunteers. Please consider donating: https://github.com/Homebrew/brew#donations ==> Auto-updated Homebrew! Updated 1 tap (homebrew/core). ==> Updated Formulae Updated 5 formulae. ==> Tapping mongodb/brew Cloning into '/usr/local/Homebrew/Library/Taps/mongodb/homebrew-brew'... remote: Enumerating objects: 825, done. remote: Counting objects: 100% (322/322), done. remote: Compressing objects: 100% (236/236), done. remote: Total 825 (delta 165), reused 140 (delta 83), pack-reused 503 Receiving objects: 100% (825/825), 180.91 KiB | 0 bytes/s, done. Resolving deltas: 100% (403/403), done. Tapped 14 formulae (30 files, 246.8KB).

- Assuming your Xcode is curent, you now install MongoDB with the necessary supporting packages:

brew install mongodb-community@5.0

You should see something like the following on your console after you install MongoDB 5:

==> Summary /usr/local/Cellar/mongodb-community/5.0.5: 11 files, 181.5MB, built in 6 seconds ==> Running `brew cleanup mongodb-community`... Disable this behaviour by setting HOMEBREW_NO_INSTALL_CLEANUP. Hide these hints with HOMEBREW_NO_ENV_HINTS (see `man brew`). ==> Caveats ==> mongodb-community To start mongodb/brew/mongodb-community now and restart at login: brew services start mongodb/brew/mongodb-community Or, if you don't want/need a background service you can just run: mongod --config /usr/local/etc/mongod.conf

- MongoDB really requires a background service, so I suggest you use the following to create the service:

brew services start mongodb/brew/mongodb-community

You should see something like the following on your console after you install MongoDB 5:

==> Successfully started `mongodb-community` (label: homebrew.mxcl.mongodb-community)

The installation gave you three key binaries:

- The MongoDB Server Daemon – mongod

- The MongoDB sharded cluster query router – mongos

- The MongoDB Shell – mongosh

The installation also creates configuration, data, and log files based on physical CPU-Chip hardware:

- Intel Processor

- Configuration File: /usr/local/etc/mongodb.conf

- Log Directory: /usr/local/var/log/mongodb

- Data Directory: /usr/local/var/mongodb

- Apple M1 Processor

- Configuration File: /opt/homebrew/etc/mongodb.conf

- Log Directory: /opt/homebrew/var/log/mongodb

- Data Directory: /opt/homebrew/var/mongodb

- You can now start the MongoDB Shell with the following command in a Terminal window:

mongosh

You should see the following when you connect to the MongoDB Shell

Current Mongosh Log ID: 61e4e2c98f023c2b5fc5a905 Connecting to: mongodb://127.0.0.1:27017/?directConnection=true&serverSelectionTimeoutMS=2000 Using MongoDB: 5.0.5 Using Mongosh: 1.1.8 For mongosh info see: https://docs.mongodb.com/mongodb-shell/ To help improve our products, anonymous usage data is collected and sent to MongoDB periodically (https://www.mongodb.com/legal/privacy-policy). You can opt-out by running the disableTelemetry() command. ------ The server generated these startup warnings when booting: 2022-01-16T20:26:18.320-07:00: Access control is not enabled for the database. Read and write access to data and configuration is unrestricted ------ test>

As always, I hope the instructions are helpful and clear. If you find an issue let em know about it.

macOS 2021+ DirectX

Obsolescence is always a factor with the macOS. After all, it does keep the revenue flow stable in hardware sales. Last October, Apple released macOS Monterey (12). It gets problematic for me when helping my students setup MySQL on their Apple computers. Some students come with very old machines. Take for example my wonderfully maintained MacBook Pro (mid 2014), which became obsolete with the terminal release of macOS Big Sur (11.6.2). When I did finally upgrade to that terminal release, I found my VMware (11.5.*) failed to load with a “Not enough physical memory is available …” error dialog.

The pretty Apple Dialog box is quite misleading unless you place it in context of a VMware application problem that occurs when you upgrade from one to another version of the macOS. You might go down any of three provided generalized rat holes, like paring running applications or the other nonsense on the Apple Discussion Board. While these three suggestions are useful when you’re macOS is truly running short of memory, it appears the same error can simply mean the Application isn’t supported on the new macOS release. It strikes me that this error message may be linked to a VMware virtualization issue with Hyper-V memory management with a combination of VMware (11.5.*) and macOs Big Sur (11.6).

For that reason, you can’t believe discussion threads unreservedly whether they’re from Apple or other vendors because they’re targeted to a universal context. Often users are looking for a specific fix, which means an answer to a specific use-case or problem context. The VMware Fusion 11 Release Notes clearly state that it only supports macOS Mojave (10.14) and macOS Catalina (10.15), which narrows the context, or use-case, for the error.

The error message, in this context, is most likely raised because the product is incompatible with how VMware Fusion manages memory at some level in the macOS Big Sur (11.6.*) version. As I speculated earlier, the out of memory error may be linked to how VMware uses Hyper-V but that’s a shot in the dark (or a random guess not the Peter Sellers movie of the same name that reprises his role as Inspector Jacques Clouseau).

How VMware works on the macOS is important to my students because we give them two alternatives for setting up MySQL on osMac: one is inside a Linux VM and the other uses Docker (my notes from January this year for creating a Docker instance on the macOS). My students reported errors like this earlier in the year and I suggested they upgrade to VMware Fusion 12. It seemed to work for everybody but now I can report the exact error message and verify the fix with a qualified reason.

While I’m on this topic, it’s probably best to deal with DirectX support on Apple Hardware. The Apple hardware requirements for support DirectX 11 3D Acceleration in a virtual machine is currently:

- Mac Pro 2013 and later

- iMac 27-inch 2014 and later

- MacBook Pro 13-inch 2015 and later

- MacBook Pro 15-inch 2015 with dual graphics and later

- MacBook Air 2015 and later

- MacBook 2015 and later

- iMac 21-inch 2015 and later

- iMac Pro 2017 and later

- MacMini 2018 and later

It looks like everybody must upgrade any older Apple machines because we can probably assume most 2015 hardware will become obsolete with the new macOS in October 2022. If it’s not in your budget, you should plan for that cost now.

Fortunately, I also have a MacBook Pro (Mid 2021), the last of the Intel 9 chip models. I bought it the week before the announcement of the new tech. A little birdie told me it would be only the M1 after the announcement. The newer MacBook Pro is awesome and the 16″ screen is better than the older 15″ screen. I just hate the lack of a magnetic power cord. Alas, that’s the price of ensuring I had an Intel chip set.

As always, I hope this helps those looking for an answer.

MySQL Transaction Scope

The idea of ACID transactions are a basic feature of SQL’s individual Data Manipulation Language (DML) commands, like the INSERT, UPDATE, and DELETE statements. Transactions across two or more tables are a natural extension of ACID compliance features provided by DML commands. However, they require a structured programming approach, like a store procedure or like API implemented in an imperative language.

Surprisingly, transaction management wasn’t covered well in Alan Beaulieu’s Learning SQL because he only provided pseudo code logic. While I thought troubleshoot some broken MySQL SQL/PSM logic would be a good learning experience for students, it wasn’t. So, I wrote this sample code to show how to achieve an all or nothing transaction across four tables.

The code for this example on transaction management lets you perform the important tasks necessary to effect transaction management:

- You must disable autocommit in the scope of the session.

- You must use an imperative programming language like SQL/PSM or Python, et cetera.

- You must identify an error thrown in a series of DML transactions, rollback all completed work, and exit the scope of the program unit.

This SQL defines the four tables:

/* Drop and create four tables. */ DROP TABLE IF EXISTS one, two, three, four; CREATE TABLE one ( id int primary key auto_increment, msg varchar(10)); CREATE TABLE two ( id int primary key auto_increment, msg varchar(10)); CREATE TABLE three ( id int primary key auto_increment, msg varchar(10)); CREATE TABLE four ( id int primary key auto_increment, msg varchar(10)); |

Unfortunately, there’s no way to simply transaction management from the MySQL Command-Line Interface (CLI) because you need to build the logic that manages success and failure. It requires that you create a procedure using MySQL’s SQL/PSM (Persistent Stored Module) or another imperative programming language. You might think why can’t you just write an anonymous block program, like you can do in other stored procedural languages. The answer is simple. You can’t write anonymous blocks in MySQL’s SQL/PSM because they adhere to ANSI SQL-2003 (or more accurately ISO/IEC 9075-4:2003).

The following code block does:

- Conditionally drops the locking() procedure.

- Sets the default semicolon (

;) delimiter to a double-dollar ($$), which lets you use the semicolon as statement and block terminators. - Declares a

locking()procedure with the following:- Sets a parameter list with four IN-mode parameters.

- Declares an

EXIThandler that undoes any writes before an error in a sequence of DDL commands, like theINSERT,UPDATE, orDELETEstatements. TheEXIThandler then aborts completion of the rest of the procedure. (MySQL 13.6.7.2 Declare … Handler Statement) - Disables autocommit in the scope of the session.

- Starts a transaction context and inserts data into four tables as a transaction. The continue handler picks up processing when one of the

INSERTstatements fails with a 1406 error code. The 1406 error code represents an error that occurs because the data is too long for a column’s width. - When all elements of the procedure complete, you commit the work.

/* Conditionally drop procedure. */ DROP PROCEDURE IF EXISTS locking; /* Set delimiter to $$ to allow ; inside the procedure. */ DELIMITER $$ /* Create a transaction procedure. */ CREATE PROCEDURE locking(IN pv_one varchar(10) ,IN pv_two varchar(10) ,IN pv_three varchar(10) ,IN pv_four varchar(10)) BEGIN /* Declare an EXIT Handler when a string is too long for a column. Undo all prior writes with a ROLLBACK statement. */ DECLARE EXIT HANDLER FOR 1406 BEGIN ROLLBACK; END; /* Disable autocommit. */ SET AUTOCOMMIT=0; /* Start transaction scope. */ START TRANSACTION; /* A series of INSERT statement. */ INSERT INTO one (msg) VALUES (pv_one); INSERT INTO two (msg) VALUES (pv_two); INSERT INTO three (msg) VALUES (pv_three); INSERT INTO four (msg) VALUES (pv_four); /* Commit transaction set. */ COMMIT; END; $$ /* Reset delimiter to ; for SQL statements. */ DELIMITER ; |

The next block tests the locking() procedure. The first and third calls are successful but the second one fails because the third parameter is too long for the msg column in the three table. The error triggers the EXIT handler in the locking() procedure.

/* Call locking procedure. */ CALL locking('Donald','Goofy','Mickey','Pluto'); CALL locking('Squirrel','Chipmunk','Monkey business','Raccoon'); CALL locking('Curly','Larry','Moe','Shemp'); |

The query block below:

/* Select from tables, which should be empty. */ SELECT * FROM one; SELECT * FROM two; SELECT * FROM three; SELECT * FROM four; |

Returns the following, which shows only the first and third test cases succeed:

+----+--------+ | id | msg | +----+--------+ | 1 | Donald | | 2 | Curly | +----+--------+ 2 rows in set (0.01 sec) +----+-------+ | id | msg | +----+-------+ | 1 | Goofy | | 2 | Larry | +----+-------+ 2 rows in set (0.00 sec) +----+--------+ | id | msg | +----+--------+ | 1 | Mickey | | 2 | Moe | +----+--------+ 2 rows in set (0.00 sec) +----+-------+ | id | msg | +----+-------+ | 1 | Pluto | | 2 | Shemp | +----+-------+ 2 rows in set (0.00 sec) |

As always, I hope this helps those trying to write transactions across multiple tables.

MySQL macOS Docker

While you can download MySQL as a DMG package, a number of users would prefer to install it as a Docker instance. You won’t find the macOS downloads on the same web site as other downloads. You can use the following macOS download site.

After installing Docker on your macOS, you can pull a copy of the current MySQL Server with the following command:

docker pull mysql/mysql-server |

You should create a mysql directory inside your ~/Documents directory with this command:

mkdir ~/Documents/mysql |

Then, you should use the cd command to change into the ~/Documents/mysql directory and run this command:

pwd |

It should return the following directory:

/Users/<user_name>/Documents/mysql |

Use the /Users/<user_name>/Documents/mysql as the

docker run --name=mysql1 --volume=<path_to_folder>:/var/lib/mysql -p 33060:3306/tcp -d mysql/mysql-server |

The --name option value is mysql1 and it becomes the container value. Docker mounts the column in the ~/Documents/mysql folder. All data from the Docker container under the /var/lib/mysql directory will persist in this directory. This directory will still contain the database when the container is shut down.

The docker run command maps the localhost’s 33060 port to the 3306 port on the Docker container. You will use the 33060 port to connect to the Docker instance of MySQL. It raises a dialog box asking for permission to access the directory. You need to allow Docker to write to the ~/Documents/mysql directory.

You can verify that the Docker container is running with the following command:

docker ps |

It should return:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 142b5c491cd8 mysql/mysql-server "/entrypoint.sh mysq…" 7 minutes ago Up 6 minutes (healthy) 33060-33061/tcp, 0.0.0.0:33060->3306/tcp mysql1 |

You can get the MySQL generated root password with this Docker command:

docker logs mysql1 2>&1 | grep GENERATED |

It returns something like the following:

[Entrypoint] GENERATED ROOT PASSWORD: vop#3GNYqK3nC@S@N3haf3nox5E |

Use the following Docker command to connect to the Docker container:

docker exec -it mysql1 /bin/bash |

It launches a Bash shell inside the Docker container:

bash-4.2# |

Start the mysql Command-Line Interface (CLI):

mysql -uroot -p |

You are then prompted for a password:

Enter password: |

After successfully entering the password, you’ll see the following:

Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 55 Server version: 8.0.22 Copyright (c) 2000, 2020, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> |

Unless you want to remember that hugely complex root password, you should consider changing it to something simple like, 'cangetin' with the following command:

ALTER USER 'root'@'localhost' IDENTIFIED WITH MYSQL_NATIVE_PASSWORD BY 'cangetin'; |

Next, you should check for the installed databases with this command:

show databases; |

It will return:

+--------------------+ | Database | +--------------------+ | information_schema | | mysql | | performance_schema | | sys | +--------------------+ 4 rows in set (0.01 sec) |

Exiting mysql, you can see the contents of the root user’s directory with this list command:

ls -al |

It should return:

total 84 drwxr-xr-x 1 root root 4096 Jan 12 03:41 . drwxr-xr-x 1 root root 4096 Jan 12 03:41 .. -rwxr-xr-x 1 root root 0 Jan 12 03:41 .dockerenv lrwxrwxrwx 1 root root 7 Oct 12 22:06 bin -> usr/bin dr-xr-xr-x 2 root root 4096 Apr 11 2018 boot drwxr-xr-x 5 root root 340 Jan 12 03:41 dev drwxr-xr-x 2 root root 4096 Oct 19 05:47 docker-entrypoint-initdb.d -rwxr-xr-x 1 root root 7496 Oct 19 05:37 entrypoint.sh drwxr-xr-x 1 root root 4096 Jan 12 03:41 etc -rw-r--r-- 1 root root 86 Jan 12 03:41 healthcheck.cnf -rwxr-xr-x 1 root root 1073 Oct 19 05:37 healthcheck.sh drwxr-xr-x 2 root root 4096 Apr 11 2018 home lrwxrwxrwx 1 root root 7 Oct 12 22:06 lib -> usr/lib lrwxrwxrwx 1 root root 9 Oct 12 22:06 lib64 -> usr/lib64 drwxr-xr-x 2 root root 4096 Apr 11 2018 media drwxr-xr-x 2 root root 4096 Apr 11 2018 mnt -rw-r--r-- 1 root root 0 Jan 12 03:41 mysql-init-complete drwxr-xr-x 2 root root 4096 Apr 11 2018 opt dr-xr-xr-x 127 root root 0 Jan 12 03:41 proc dr-xr-x--- 1 root root 4096 Jan 12 04:21 root drwxr-xr-x 1 root root 4096 Oct 19 05:47 run lrwxrwxrwx 1 root root 8 Oct 12 22:06 sbin -> usr/sbin drwxr-xr-x 2 root root 4096 Apr 11 2018 srv dr-xr-xr-x 13 root root 0 Jan 12 03:41 sys drwxrwxrwt 1 root root 4096 Jan 12 03:41 tmp drwxr-xr-x 1 root root 4096 Oct 12 22:06 usr drwxr-xr-x 1 root root 4096 Oct 12 22:06 var |

At this point, you have to make a choice about how you will access the MySQL database. You have a couple options:

- Create an individual

studentuser that can access the MySQL-Server as a micro-service, which would only be a MySQL user connecting through MySQL workbench. At least, that’s the only connection option unless you likewise install themysqlclient on your host macOS. Themysqlclient lets you connect from the host operating system through the Command-Line Interface (CLI). - Create a local

studentuser account inside the Docker container. It will have access to the container file system and mimic the behavior of a non-rootuser on a server.

Let’s create both for this demonstration. Reconnect as the root user and issue the following two commands:

CREATE USER 'student'@'localhost' IDENTIFIED WITH MYSQL_NATIVE_PASSWORD BY 'student'; CREATE USER 'student'@'%.%.%.%' IDENTIFIED WITH MYSQL_NATIVE_PASSWORD BY 'student'; |

The first version of the student user lets you access the database from inside the Docker container. The second version of the student user lets you access the database from MySQL Workbench deployed on your base macOS.

You can add a sakila database and grant all privileges to the student user with the following command as the root user:

CREATE DATABASE sakila; GRANT ALL ON sakila.* TO 'student'@'localhost'; GRANT ALL ON sakila.* TO 'student'@'%.%.%.%'; |

You need to get the sakila database from the Internet within the Docker container. Exit the mysql client with the following command:

quit; |

As the root user, install the wget and tar Linux utilities with this command:

yum install -y wget tar |

As the student user, you can use the wget command to grab a copy of the sakila database and store the database locally. Use the cd command to get to your ${HOME} directory, like:

cd |

Use this syntax to get a copy of the sakila database:

wget http://downloads.mysql.com/docs/sakila-db.tar.gz |

Use the ls command to verify the download, then run the following set of Linux commands from the Linux CLI:

tar -xzf sakila-db.tar.gz cd sakila-db |

Run the following two commands from the sakila-db directory:

mysql -ustudent -p < sakila-schema.sql mysql -ustudent -p < sakila-data.sql |

or, you can connect as the student user to the MySQL client and run them there:

source sakila-schema.sql source sakila-data.sql |

You create a non-root student user for the Docker container from the macOS host opearting system. Which means you need to quit; the mysql client, and exit the root user’s session with the Docker container.

At the terminal in your macOS, issue the following Docker command to create a student account in the mysql1 container:

docker exec mysql1 bash -c "useradd -u 501 -g mysql -G users \ > -d /home/student -s /bin/bash -c "Student" -n student" |

Now, you can connect as the student user to the mysql1 container, with the following Docker command:

docker exec -it --user student mysql1 bash |

The first time you connect, you will be a the / (root) directory. Use the following cd command to go to the student user’s home directory:

cd |

Then, type the following command to set the student user’s home directory as the default. You need to use this command because vim isn’t installed in the default Docker container, which would let you interactively edit files. It appends the necessary Bash shell command to the end of the .bashrc file.

echo 'cd ${HOME}' >> .bashrc |

With this change, the student user will always be available form its home directory next time you connect to the mysql1 container. You can use scp to move files into the student user’s home (/home/student) directory. However, you can create a quick test.sql file like this:

echo "select user();" > test.sql |

Connect to the mysql CLI with as the student user:

mysql -ustudent -p |

Call your test.sql file from the Linux CLI, like:

mysql -ustudent -p < test.sql |

or, you can run the test.sql program as follows form the MySQL command-line:

source test.sql |

It will return:

+-------------------+ | user() | +-------------------+ | student@localhost | +-------------------+ 1 row in set (0.00 sec) |

That’s the basic setup of the Docker MySQL Container on the macOS. You can do much more once you’ve configured it like this. For example, you can add vim to your library repository as the root user with the following command:

yum install -y vim |

It just takes a minute or a bit more. Adding vim opens up so much flexibility for you inside the Docker container, it’s impossible for me to resist. 😉

MySQL Self-Join

I’m switching to MySQL and leveraging Alan Beaulieu’s Learning SQL as a supporting reference for my Database Design and Development course. While reviewing Alan’s Chapter 5: Querying Multiple Tables, I found his coverage of using self-joins minimal.

In fact, he adds a prequel_film_id column to the film table in the sakila database and then a single row to demonstrate a minimal self-join query. I wanted to show them how to view a series of rows interconnected by a self-join, like the following:

SELECT f.title AS film , fp.title AS prequel FROM film f LEFT JOIN film fp ON f.prequel_id = fp.film_id WHERE f.series_name = 'Harry Potter' ORDER BY f.series_number; |

It returns the following result set:

+----------------------------------------------+----------------------------------------------+ | film | prequel | +----------------------------------------------+----------------------------------------------+ | Harry Potter and the Chamber of Secrets | Harry Potter and the Sorcerer's Stone | | Harry Potter and the Prisoner of Azkaban | Harry Potter and the Chamber of Secrets | | Harry Potter and the Goblet of Fire | Harry Potter and the Prisoner of Azkaban | | Harry Potter and the Order of the Phoenix | Harry Potter and the Goblet of Fire | | Harry Potter and the Half Blood Prince | Harry Potter and the Order of the Phoenix | | Harry Potter and the Deathly Hallows: Part 1 | Harry Potter and the Half Blood Prince | | Harry Potter and the Deathly Hallows: Part 2 | Harry Potter and the Deathly Hallows: Part 1 | +----------------------------------------------+----------------------------------------------+ 7 rows in set (0.00 sec) |

Then, I thought about what questions the students might ask. For example, why doesn’t the query return the first film that doesn’t have a prequel. So, I took the self-join to the next level to display the first film having no prequel, like this:

SELECT f.title AS film , IFNULL( CASE WHEN NOT f.film_id = fp.film_id AND f.prequel_id = fp.film_id THEN fp.title END,'None') AS prequel FROM film f LEFT JOIN film fp ON f.prequel_id = fp.film_id WHERE f.series_name = 'Harry Potter' ORDER BY f.series_number; |

The CASE operator in the SELECT-list filters the result set by eliminating rows erroneously returned. Without the CASE filter, the query would return the original Harry Potter and the Sorcerer’s Stone film matched agains a NULL and all of the other sequels. The CASE operator effectively limits the result set for the LEFT JOIN to only the following data:

+----------------------------------------------+----------------------------------------------+ | film | prequel | +----------------------------------------------+----------------------------------------------+ | Harry Potter and the Sorcerer's Stone | NULL | +----------------------------------------------+----------------------------------------------+ |

The IFNULL() built-in function lets you replace the NULL value returned as the prequel’s title value. The IFNULL() function substitutes a 'None' string literal for a NULL value. The query returns the following result set:

+----------------------------------------------+----------------------------------------------+ | film | prequel | +----------------------------------------------+----------------------------------------------+ | Harry Potter and the Sorcerer's Stone | None | | Harry Potter and the Chamber of Secrets | Harry Potter and the Sorcerer's Stone | | Harry Potter and the Prisoner of Azkaban | Harry Potter and the Chamber of Secrets | | Harry Potter and the Goblet of Fire | Harry Potter and the Prisoner of Azkaban | | Harry Potter and the Order of the Phoenix | Harry Potter and the Goblet of Fire | | Harry Potter and the Half Blood Prince | Harry Potter and the Order of the Phoenix | | Harry Potter and the Deathly Hallows: Part 1 | Harry Potter and the Half Blood Prince | | Harry Potter and the Deathly Hallows: Part 2 | Harry Potter and the Deathly Hallows: Part 1 | +----------------------------------------------+----------------------------------------------+ 8 rows in set (0.01 sec) |

Alan’s modification of the sakila.film table had the following two related design flaws:

- It didn’t provide a way to guarantee the ordering of films with prequels because relational databases don’t guarantee ordered result sets unless you use an

ORDER BYclause, which typically requires a column to order. - It didn’t provide a way to isolate a series of films.

I modified the film table differently by adding the series_name, series_number, and prequel_id columns. The series_name column lets you group results and the series_number column lets you order by a preserved sequence that you store as part of the data The prequel_id column lets you connect to the prequel film, much like the backward portion of a doubly linked list.

The new sakila.film table is:

+----------------------+---------------------------------------------------------------------+------+-----+-------------------+-----------------------------------------------+ | Field | Type | Null | Key | Default | Extra | +----------------------+---------------------------------------------------------------------+------+-----+-------------------+-----------------------------------------------+ | film_id | smallint unsigned | NO | PRI | NULL | auto_increment | | title | varchar(255) | NO | MUL | NULL | | | description | text | YES | | NULL | | | release_year | year | YES | | NULL | | | language_id | tinyint unsigned | NO | MUL | NULL | | | original_language_id | tinyint unsigned | YES | MUL | NULL | | | rental_duration | tinyint unsigned | NO | | 3 | | | rental_rate | decimal(4,2) | NO | | 4.99 | | | length | smallint unsigned | YES | | NULL | | | replacement_cost | decimal(5,2) | NO | | 19.99 | | | rating | enum('G','PG','PG-13','R','NC-17') | YES | | G | | | special_features | set('Trailers','Commentaries','Deleted Scenes','Behind the Scenes') | YES | | NULL | | | last_update | timestamp | NO | | CURRENT_TIMESTAMP | DEFAULT_GENERATED on update CURRENT_TIMESTAMP | | series_name | varchar(20) | YES | | NULL | | | series_number | int unsigned | YES | | NULL | | | prequel | int unsigned | YES | | NULL | | +----------------------+---------------------------------------------------------------------+------+-----+-------------------+-----------------------------------------------+ 16 rows in set (0.21 sec) |

After adding the three new columns, I inserted eight rows for the original Harry Potter films. You can use the following script in the MySQL client (mysql) to add the columns and insert the data to test the preceding queries:

-- Use sakila database. USE sakila; -- Add a prequel_id column to the sakila.film table. ALTER TABLE film ADD (series_name varchar(20)), ADD (series_number int unsigned), ADD (prequel_id int unsigned); -- Set primary to foreign key local variable. SET @sv_film_id = 0; -- Insert Harry Potter films in sakila.film table with classic values clause. INSERT INTO film ( title , description , release_year , language_id , original_language_id , rental_duration , rental_rate , length , replacement_cost , rating , special_features , last_update , series_name , series_number , prequel_id ) VALUES ('Harry Potter and the Sorcerer''s Stone' ,'A film about a young boy who on his eleventh birthday discovers, he is the orphaned boy of two powerful wizards and has unique magical powers.' , 2001 , 1 , NULL , 3 , 0.99 , 152 , 19.99 ,'PG' ,'Trailers' ,'2001-11-04' ,'Harry Potter' , 1 , NULL ); -- Assign the last generated primary key value to the local variable. SET @sv_film_id := last_insert_id(); -- Insert 2nd film in sakila.film table with classic values clause. INSERT INTO film ( title , description , release_year , language_id , original_language_id , rental_duration , rental_rate , length , replacement_cost , rating , special_features , last_update , series_name , series_number , prequel_id ) VALUES ('Harry Potter and the Chamber of Secrets' ,'A film where Harry returning to Hogwarts, still famous and a hero, when strange things start to happen ... people are turning to stone and no-one knows what, or who, is doing it.' , 2002 , 1 , NULL , 3 , 0.99 , 160 , 19.99 ,'PG' ,'Trailers' ,'2002-11-15' ,'Harry Potter' , 2 , @sv_film_id ); -- Assign the last generated primary key value to the local variable. SET @sv_film_id := last_insert_id(); -- Insert 3rd film in sakila.film table with classic values clause. INSERT INTO film ( title , description , release_year , language_id , original_language_id , rental_duration , rental_rate , length , replacement_cost , rating , special_features , last_update , series_name , series_number , prequel_id ) VALUES ('Harry Potter and the Prisoner of Azkaban' ,'A film where Harry, Ron, and Hermione return for their third year at Hogwarts and are forced to face escaped prisoner, Sirius Black.' , 2004 , 1 , NULL , 3 , 0.99 , 141 , 19.99 ,'PG' ,'Trailers' ,'2004-06-04' ,'Harry Potter' , 3 , @sv_film_id ); -- Assign the last generated primary key value to the local variable. SET @sv_film_id := last_insert_id(); -- Insert 4th film in sakila.film table with classic values clause. INSERT INTO film ( title , description , release_year , language_id , original_language_id , rental_duration , rental_rate , length , replacement_cost , rating , special_features , last_update , series_name , series_number , prequel_id ) VALUES ('Harry Potter and the Goblet of Fire' ,'A film where where Harry Potter''s name emerges from the Goblet of Fire, and he becomes a competitor in a grueling battle for glory among three wizarding schools - the Triwizard Tournament.' , 2005 , 1 , NULL , 3 , 0.99 , 157 , 19.99 ,'PG' ,'Trailers' ,'2005-11-18' ,'Harry Potter' , 4 , @sv_film_id ); -- Assign the last generated primary key value to the local variable. SET @sv_film_id := last_insert_id(); -- Insert 5th film in sakila.film table with classic values clause. INSERT INTO film ( title , description , release_year , language_id , original_language_id , rental_duration , rental_rate , length , replacement_cost , rating , special_features , last_update , series_name , series_number , prequel_id ) VALUES ('Harry Potter and the Order of the Phoenix' ,'A film where Lord Voldemort has returned, but the Ministry of Magic is doing everything it can to keep the wizarding world from knowing the truth.' , 2007 , 1 , NULL , 3 , 0.99 , 138 , 19.99 ,'PG-13' ,'Trailers' ,'2007-07-12' ,'Harry Potter' , 5 , @sv_film_id ); -- Assign the last generated primary key value to the local variable. SET @sv_film_id := last_insert_id(); -- Insert 6th film in sakila.film table with classic values clause. INSERT INTO film ( title , description , release_year , language_id , original_language_id , rental_duration , rental_rate , length , replacement_cost , rating , special_features , last_update , series_name , series_number , prequel_id ) VALUES ('Harry Potter and the Half Blood Prince' ,'A film where Voldemort is tightening his grip on Hogwarts and it is no longer the safe haven it once was. Harry and Dumbledore work to find the key to unlock the Dark Lord''s defenses.' , 2009 , 1 , NULL , 3 , 0.99 , 153 , 19.99 ,'PG' ,'Trailers' ,'2009-07-15' ,'Harry Potter' , 6 , @sv_film_id ); -- Assign the last generated primary key value to the local variable. SET @sv_film_id := last_insert_id(); -- Insert 7th film in sakila.film table with classic values clause. INSERT INTO film ( title , description , release_year , language_id , original_language_id , rental_duration , rental_rate , length , replacement_cost , rating , special_features , last_update , series_name , series_number , prequel_id ) VALUES ('Harry Potter and the Deathly Hallows: Part 1' ,'A film where Harry, Ron and Hermione set out on their perilous mission to track down and destroy the Horcruxes - the keys to Voldemort''s immortality.' , 2010 , 1 , NULL , 3 , 0.99 , 146 , 19.99 ,'PG-13' ,'Trailers' ,'2010-11-19' ,'Harry Potter' , 7 , @sv_film_id ); -- Assign the last generated primary key value to the local variable. SET @sv_film_id := last_insert_id(); -- Insert 8th film in sakila.film table with classic values clause. INSERT INTO film ( title , description , release_year , language_id , original_language_id , rental_duration , rental_rate , length , replacement_cost , rating , special_features , last_update , series_name , series_number , prequel_id ) VALUES ('Harry Potter and the Deathly Hallows: Part 2' ,'A film where Harry, Ron and Hermione set out on their perilous mission to track down and destroy the Horcruxes - the keys to Voldemort''s immortality.' , 2011 , 1 , NULL , 3 , 0.99 , 130 , 19.99 ,'PG-13' ,'Trailers' ,'2011-07-15' ,'Harry Potter' , 8 , @sv_film_id ); |

You can put the following commands into a SQL script file to revert the sakila.film table to its base configuration:

DELETE FROM film WHERE film_id > 1000; ALTER TABLE film DROP COLUMN series_name; ALTER TABLE film DROP COLUMN series_number; ALTER TABLE film DROP COLUMN prequel_id; ALTER TABLE film AUTO_INCREMENT = 1000; |

As always, I hope this helps those looking for how to solve a new problem.

Fix VMware Networking

Occasionally, my students loose their network connection when copying their virtual machines. This article shows you how to rebuild your Internet connection.

The first step requires you to identify the port number on your host operating system, which is typically Windows OS or Mac OS X. You can find that by running the following search from a Mac OS X Terminal session or Windows OS Command session.

If you’re on the Mac OS X, you launch a Terminal session and then use the sudo command to open a shell as the root super user, like this:

sudo sh |

As the root super user on Mac OS X , you run the netstat command like this:

sh-3.2# netstat -a | grep 1.ntp | grep -v grep udp4 0 0 192.168.147.1.ntp *.* |

VMware uses the same subdomain with one difference for the gateway, it uses node 2:

192.168.147.2 |

The alternate syntax to find Vmware’s subdomain requires you to use an Administrator account on Windows, like this:

C:\> netstat -a | findstr /C:.ntp |

After you determine the subdomain, you need to ensure VMware is configured correctly. You navigate to the menu and choose Virtual Machine and then Settings from the dropdown menu. The software shows you the following:

Then, click on the Network Adapter under the Removable Devices, and you see the following screen:

You need to make sure that you’re using Internet Sharing, or Share with my Mac. If you’re not using it select it now.

Launch the hosted Linux OS and open a Terminal seesion. Inside the Terminal, you should find the machine’s address as the root address with the ifconfig utility. The technique follows:

[student@localhost ~]$ sudo sh [sudo] password for student: sh-4.2# ifconfig eno16777736: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 ether 00:0c:29:70:77:64 txqueuelen 1000 (Ethernet) RX packets 34 bytes 4190 (4.0 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 0 (Local Loopback) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

Next, you need to edit some files, they assume the VMware Network Gateway is 192.168.147.2 and the machine’s address is “00:0c:29:70:77:64“. The first file you need to edit is the /etc/resolv.conf file, and it should look like this:

domain localdomain search localdomain nameserver 192.168.147.2 |

The second file you need to edit is the /etc/sysconfig/network file. It should look like this:

# Created by anaconda NETWORKING=yes HOSTNAME=localhost.localdomain GATEWAY=192.168.147.2 |

The third file you need to edit is the /etc/sysconfig/network-scripts/ifcfg-eth0 file. It should look like this:

DEVICE=eth0 HWADDR=00:0c:29:70:77:64 ONBOOT=yes NM_CONTROLLED=yes BOOTPROTO=dhcp TYPE=Ethernet DNS1=192.168.147.2 USERCTL=no PEERDNS=yes IPV6INIT=no |

The last step requires that you reboot the machine or run the /etc/rc.d/init.d/network to restart the network. I hope this helps those trying to restore their VMware hosted operating systems network connection.