Python Objects

![]()

I promised to give my students a full example of how to write and execute a Python object. There were two motivations for this post. The first was driven by my students trying to understand the basics and the second my somebody else saying Python couldn’t deliver objects. Hopefully, this code is simple enough for both audiences. I gave them this other tutorial on writing and mimicking overloaded Python functions earlier.

This defines a Ball object type and a FilledBall object subtype of Ball. It incorporates the following elements:

- A special __init__ function, which is a C/C++ equivalent to a constructor.

- A special __str__ function represents a class object instance as a string. It is like the getString() equivalent in the Java programming language.

- A bounce instance function, which means it acts on any instance of the Ball object type or FilledBall object subtype.

- A get_direction instance function and it calls the __format local object function, which is intended to mimic a private function call, like other object-oriented programming languages.

- A private name __format function (Private name mangling: When an identifier that textually occurs in a class definition begins with two or more underscore characters and does not end in two or more underscores, it is considered a private name of that class.)

You can test this code by creating the $PYTHONPATH (Unix or Linux) or %PYTHONPATH% (Windows) as follows with all the code inside the present working directory, like this in Unix or Linux:

export set $PYTHONPATH=. |

Then, you create the Ball.py file with this syntax:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 | # Creates a Ball object type and FilledBall object subtype. class Ball: # User-defined constructor with required parameters. def __init__(self, color = None, radius = None, direction = None): # Assign a default color value when the parameter is null. if color is None: self.color = "Blue" else: self.color = color.lower() # Assign a default radius value when the parameter is null. if radius is None: self.radius = 1 else: self.radius = radius # Assign a default direction value when the parameter is null. if direction is None: self.direction = "down" else: self.direction = direction.lower() # Set direction switch values. self.directions = ("down","up") # User-defined standard function when printing an object type. def __str__(self): # Build a default descriptive message of the object. msg = "It's a " + self.color + " " + str(self.radius) + '"' + " ball" # Return the message variable. return msg # Define a bounce function. def bounce(self, direction = None): # Set direction on bounce. if not direction is None: self.direction = direction else: # Switch directions. if self.directions[0] == self.direction: self.direction = self.directions[1] elif self.directions[1] == self.direction: self.direction = self.directions[0] # Define a bounce function. def getDirection(self): # Return current direction of ball. return self.__format(self.direction) # User-defined pseudo-private function, which is available # to instances of the Ball object and any of its subtypes. def __format(self, msg): return "[" + msg + "]" # This is the object subtype, which takes the parent class as an # argument. class FilledBall(Ball): def __init__(self, filler = None): # Instantiate the parent class and then any incremental # parameter values. Ball.__init__(self,"Red",2) # Add a default value or the constructor filler value. if filler is None: self.filler = "Air".lower() else: self.filler = filler # User-defined standard function when printing an object type, which # uses generalized invocation. def __str__(self): # Build a default descriptive message of the object. msg = Ball.__str__(self) + str(" filled with " + self.filler) # Return the message variable. return msg |

Next, let’s test instantiating the Ball object type with the following code:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | #!/usr/bin/python # Import the Ball class into its own namespace. import Ball # Assign an instantiated class to a local variable. myBall = Ball.Ball() # Check whether the local variable holds a valid Ball instance. if not myBall is None: print(myBall, "instance.") else: print("No Ball instance.") # Loop through 10 times changing bounce direction. for i in range(1,10): # Find dirction of ball. print(myBall.getDirection()) # Bounce the ball. myBall.bounce() |

Next, let’s test instantiating the FilledBall object subtype with the following code:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | #!/usr/bin/python # Import the Ball class into its own namespace. import Ball # Assign an instantiated class to a local variable. myBall = Ball.FilledBall() # Check whether the local variable holds a valid FilledBall instance. if not myBall is None: print(myBall, "instance.") else: print("No Ball instance.") # Loop through 10 times changing bounce direction. for i in range(1,10): # Find dirction of ball. print(myBall.getDirection()) # Bounce the ball. myBall.bounce() |

As always, I hope this helps those looking to learn and extend their knowledge.

User/Group Setups

The following are samples of creating, changing, and removing users and groups in Linux. These are the command-line options in the event you don’t have access to the GUI tools.

Managing Users:

Adding a user:

The prototype is:

# useradd [-u uid] [-g initial_group] [-G group[,...]] \ > [-d home_directory] [-s shell] [-c comment] \ > [-m [-k skeleton_directory]] [-f inactive_time] \ > [-e expire_date] -n username |

A sample implementation of the prototype is:

# useradd -u 502 -g dba -G users,root \ > -d /u02/oracle -s /bin/tcsh -c "Oracle Account" \ > -f 7 -e 12/31/03 -n jdoe |

Modifying a user:

The prototype is:

# usermod [-u uid] [-g initial_group] [-G group[,...]] \ > [-d home_directory] [-s shell] [-c comment] \ > [-l new_username ] [-f inactive_time] [-e expire_date] > username |

A sample implementation of the prototype is:

# usermod -u 502 -g dba -G users,root > -d /u02/oracle -s /bin/bash -c "Senior DBA" > -l sdba -f 7 -e 12/31/03 jdoe |

Removing a user:

The prototype is:

# userdel [-r] username |

A sample implementation of the prototype is:

# userdel -r jdoe |

Managing Groups:

Adding a group:

The prototype is:

# groupadd [-g gid] [-rf] groupname |

A sample implementation of the prototype is:

# groupadd -g 500 dba |

Modifying a group:

The prototype is:

# groupmod [-g gid] [-n new_group_name] groupname |

A sample implementation of the prototype is:

# groupmod -g 500 -n dba oinstall |

Deleting a group:

The prototype is:

# groupdel groupname |

A sample implementation of the prototype is:

# groupdel dba |

Installing a GUI Manager for Users and Groups:

If you’re the root user or enjoy sudoer privileges, you can install the following GUI package for these tasks:

yum install -y system-config-users |

You can verify the GUI user management tool is present with the following command:

which system-config-users |

It should return this:

/bin/system-config-users |

You can run the GUI user management tool from the root user account or any sudoer account. The following shows how to launch the GUI User Manager from a sudoer account:

sudo system-config-users |

As always, I hope this helps those trying to figure out the proper syntax.

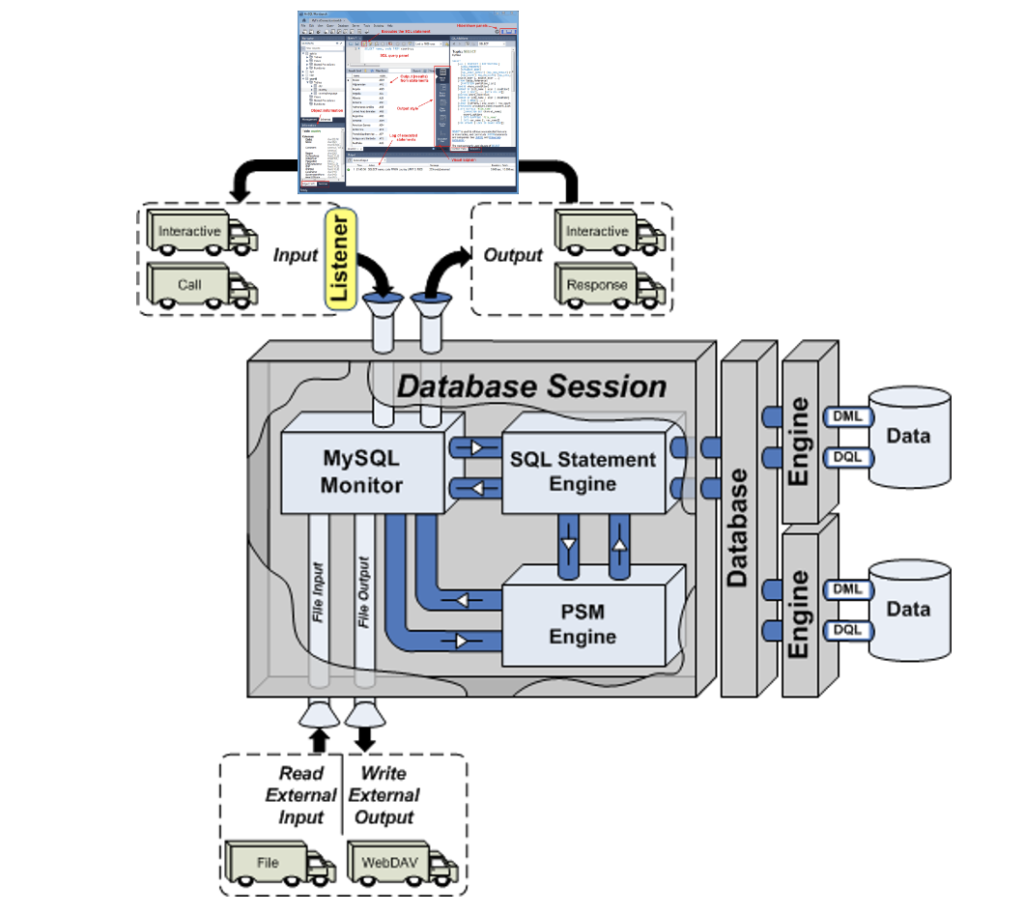

MySQL Workbench Fits

My students wanted an illustration of where MySQL Workbench fits within the MySQL database. So, I overlaid it in this drawing from my old book on comparative SQL syntax for Oracle and MySQL. Anybody else have a cool alternative illustration?

The idea is the lightening bolt transmits an interactive call and reading a script file submits a call paradigm.

More or less MySQL processes a static query in the panel, like the following Python program process the dynamic query (on lines 71-78) with parameters sent when calling the Python script.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 | #!/usr/bin/python # ------------------------------------------------------------ # Name: mysql-query2.py # Date: 20 Aug 2019 # ------------------------------------------------------------ # Purpose: # ------- # The program shows you how to provide agruments, convert # from a list to individual variables of the date type. # # You can call the program: # # ./mysql-query3.py 2001-01-01 2003-12-31 # # ------------------------------------------------------------ # Import the library. import sys import mysql.connector from datetime import datetime from datetime import date from mysql.connector import errorcode # Capture argument list. fullCmdArguments = sys.argv # Assignable variables. start_date = "" end_date = "" # Assign argument list to variable. argumentList = fullCmdArguments[1:] # Check and process argument list. # ============================================================ # If there are less than two arguments provide default values. # Else enumerate and convert strings to dates. # ============================================================ if (len(argumentList) < 2): # Set a default start date. if (isinstance(start_date,str)): start_date = date(1980, 1, 1) # Set the default end date. if (isinstance(end_date,str)): end_date = datetime.date(datetime.today()) else: # Enumerate through the argument list where beginDate precedes endDate as strings. try: for i, s in enumerate(argumentList): if (i == 0): start_date = datetime.date(datetime.fromisoformat(s)) elif (i == 1): end_date = datetime.date(datetime.fromisoformat(s)) except ValueError: print("One of the first two arguments is not a valid date (YYYY-MM-DD).") # Attempt the query. # ============================================================ # Use a try-catch block to manage the connection. # ============================================================ try: # Open connection. cnx = mysql.connector.connect(user='student', password='student', host='127.0.0.1', database='studentdb') # Create cursor. cursor = cnx.cursor() # Set the query statement. query = ("SELECT CASE " " WHEN item_subtitle IS NULL THEN CONCAT('''',item_title,'''') " " ELSE CONCAT('''',item_title,': ',item_subtitle,'''') " " END AS title, " "release_date " "FROM item " "WHERE release_date BETWEEN %s AND %s " "ORDER BY item_title") # Execute cursor. cursor.execute(query, (start_date, end_date)) # Display the rows returned by the query. for (title, release_date) in cursor: print("{}, {:%d-%b-%Y}".format(title, release_date)) # Close cursor. cursor.close() # ------------------------------------------------------------ # Handle exception and close connection. except mysql.connector.Error as e: if e.errno == errorcode.ER_ACCESS_DENIED_ERROR: print("Something is wrong with your user name or password") elif e.errno == errorcode.ER_BAD_DB_ERROR: print("Database does not exist") else: print("Error code:", e.errno) # error number print("SQLSTATE value:", e.sqlstate) # SQLSTATE value print("Error message:", e.msg) # error message # Close the connection when the try block completes. else: cnx.close() |

You could call this type of script from the Linux CLI (Command-Line Interface), like this:

./mysql-query3.py '2003-01-01' '2003-12-31' 2>/dev/null |

As always, I hope this helps those looking to understand things.

MySQL Posts Summary

Here’s a quick catalog for my students of PowerShell, JavaScript, and Python examples connecting to MySQL:

- MySQL Powershell Connection with .Net Library Example

- MySQL Powershell Connection with ODBC DSN Example

- MySQL Powershell with CSV File Write Example

- MySQL Powershell with Dialog for Dynamic Connection Inputs

- MySQL Node.js Introduction without Bind Variables

- MySQL Express.js Introcution with Bind Variables – Inclusive of Alternate Syntax

- MySQL Node.js Server-side Scripting Example – Inclusive of Regular Expressions and Parameter Validation

- MySQL Node.js Server-side Clarification of JavaScript Streams

- MySQL Python Connector – Working Example with Python 2 & 3

- MySQL Python with CTE Examples

- MySQL How to use Python to Read a CSV and Write it to a Table

- MySQL How to use Python to cleanup JSON Presentation

As always, I hope this helps those looking for a code sample.

Python Functions

It seems a number of my students had some confusion over how to write overloaded Python functions. So, I prepared this little tutorial using Python 3.

The first basic1.py example file is a standalone Python file that:

- Defines a hello() world function.

- Calls the local hello() world function.

#!/usr/bin/python # Define a hello() function. def hello(): print("Hello World!") # Call the hello() function. hello() |

You can test the basic1.py script as follows:

./basic1.py |

It prints:

Hello World! |

The second basic2.py example file is also a standalone Python file that:

- Attempts to define overloaded hello() world functions. One version takes no arguments and the other takes one argument.

- Attempts to call the overloaded local hello() world function without any arguments and with one argument.

#!/usr/bin/python # Call the hello() function without any arguments. def hello(): print("Hello World!") # Call the hello() function with one argument. def hello(whom): print("Hello", whom) # Call the overloaded hello() functions. hello() hello("Henry") |

You can test the basic2.py script as follows:

./basic2.py |

It successfully defines the hello() function and then it replaces it with the hello(whom) function. So, it raises the following runtime error because the call to the hello() world function finds the hello(whom) function and the call lacks a call parameter.

Traceback (most recent call last):

File "/home/student/Code/python/funct/./basic2.py", line 12, in <module>

hello()

TypeError: hello() missing 1 required positional argument: 'whom' |

The third basic3.py example file is also a standalone Python file that:

- Defines a function that acts like an overloaded hello(whom=None) world function.

- Call the local hello(whom=None) world function without any arguments and with one argument. It works because you do two things:

- You assign a default null value to the whom parameter, which makes the parameter optional in the function’s signature.

- You use an if-statement to manage the behavior of a null parameter. The None keyword defines a null value. Please note that the is reference comparison operator is necessary to evaluate whether a variable contains a null value.

#!/usr/bin/python # Call the hello() function with an optional parameter; and # manage the inner workings with and without a parameter. def hello(whom = None): if whom is None: print("Hello World!") else: print("Hello", whom + "!") # Call the overloaded hello() functions. hello() hello("Henry") |

You can test the basic3.py script as follows:

./basic3.py |

It prints:

Hello World! Hello Henry! |

At this point, we need to qualify how you can position a Python library file in a development directory. Development directories aren’t typically in the standard library locations, which means you need to define the directories in the $PYTHONPATH environment variable.

There’s a convenient trick that lets you set the $PYTHONPATH value so that you can use it across multiple test environments. It requires you to create an src directory for your library source code inside the directory where you develop code that will use library functions.

After creating the src directory, you can set the $PYTHONPATH environment variable with a relative src directory in the following syntax:

export set PYTHONPATH=$PYTHONPATH:./src:. |

It will now let Python look for libraries in the src subdirectory or the present working directory.

Next, you deploy the following hello(whom=None) function in a lib.py file inside the src subdirectory.

# Call the hello() function with an optional parameter; and # manage the inner workings with and without a parameter. def hello(whom = None): if whom is None: print("Hello World!") else: print("Hello", whom + "!") |

In the parent directory of the src subdirectory create the basic4.py file, like:

#!/usr/bin/python # Import the lib.py file as a lib package. import lib # Call the hello() function without arguments and # with one argument within the namespace of the lib # library. lib.hello() lib.hello("Henry") |

An alternate way to write the basic4.py program imports a single namespace element (like a variable, function, or object) and places it in the local namespace of the program. You can redefine hello() function

#!/usr/bin/python # Define the hello namespace element from the lib # library in the current program. from lib import hello # Call the hello() function without arguments and # with one argument within the namespace of the lib # library. hello() hello("Henry") |

The hello() function only prints messages. You can add a return statement to return a value from the hello() function. The modified library returns a string rather than printing a string, as follows:

# Call the hello() function with an optional parameter; and # manage the inner workings with and without a parameter. def hello(whom = None): if whom is None: return "Hello World!" else: return "Hello " + whom + "!" # Call the goodbye() function with an optional parameter; and # manage the inner workings with and without a parameter. def goodbye(whom = None): if whom is None: return "Goodbye World!" else: return "Goodbye " + whom + "!" |

You would then make the following changes to the basic5.py program that calls the lib.py library file. You could also call the goodbye() function inside the imported lib scope. However, you wouldn’t be able to call the goodbye() function if you had imported only the hello() function from the lib package into the local namespace.

#!/usr/bin/python # Import the lib.py file as a lib package. import lib # Call the hello() function without arguments and # with one argument within the namespace of the lib # library. print(lib.hello()) print(lib.hello("Henry")) |

As always, I hope this helps those looking to understand and use functions in Python.

PostgreSQL on Ubuntu

Fresh install of Ubuntu on my MacBook Pro i7 because Apple said the OS X was no longer upgradable. Time to install and configure MySQL Server. These are the steps to install MySQL on the Ubuntu Desktop.

Installation

- Update the Ubuntu OS by checking for, inspecting, and upgrading any available updates with the following commands:

sudo apt update sudo apt list sudo apt upgrade

- Check for available PostgreSQL Server packages with this command:

sudo apt install postgresql postgresql-contrib

- Connect as the postgres user with the following command:

sudo -i -u postgres

Then, you can connect to PostgreSQL with this command:

psql

It displays your connection as the root user. Then, you can use the show data_directory; command to find the data directory:

psql (14.8 (Ubuntu 14.8-0ubuntu0.22.04.1)) Type "help" for help. postgres=# show data_directory; data_directory ----------------------------- /var/lib/postgresql/14/main (1 row)\q

- At this point, you have some operating system (OS) stuff to setup before configuring a PostgreSQL sandboxed videodb database and student user.

- Assume the role of the root superuser on Ubuntu with this command:

sudo sh

As the root user, navigate to /etc/postgresql/14/main directory and edit the pg_hba.conf file. Add lines for the postgres and student users, as shown below:

# TYPE DATABASE USER ADDRESS METHOD # "local" is for Unix domain socket connections only local all all peer local all postgres peer local all student peer # IPv4 local connections: host all all 127.0.0.1/32 scram-sha-256 # IPv6 local connections: host all all ::1/128 scram-sha-256 # Allow replication connections from localhost, by a user with the # replication privilege. local replication all scram-sha-256 host replication all 127.0.0.1/32 scram-sha-256 host replication all ::1/128 scram-sha-256

- As the root user, navigate to the /var/lib/postgresql/14 directory, and make the video_db directory with the following command:

mkdir video_db - Change the video_db ownership and group to the respective postgres user and primary group:

chown postgres:postgres video_db - Change the video_db permissions to read, write, and execute for only the owner with this syntax as the postgres user:

chmod 700 video_db

- Assume the role of the root superuser on Ubuntu with this command:

- Connect to the postgres account and perform the following commands:

- Connect as the postgres user with the following command:

sudo -i -u postgres

- After connecting as the postgres superuser, you can create a video_db tablespace with the following syntax:

CREATE TABLESPACE video_db OWNER postgres LOCATION '/var/lib/postgresql/14/video_db';

This will return the following:

CREATE TABLESPACE

You can query whether you successfully create the video_db tablespace with the following:

SELECT * FROM pg_tablespace;

It should return the following:

oid | spcname | spcowner | spcacl | spcoptions -------+------------+----------+--------+------------ 1663 | pg_default | 10 | | 1664 | pg_global | 10 | | 16389 | video_db | 10 | | (3 rows)

-

You need to know the PostgreSQL default collation before you create a new database. You can write the following query to determine the default correlation:

postgres=# SELECT datname, datcollate FROM pg_database WHERE datname = 'postgres';

It should return something like this:

datname | datcollate ----------+------------- postgres | en_US.UTF-8 (1 row)

The datcollate value of the postgres database needs to the same value for the LC_COLLATE and LC_CTYPE parameters when you create a database. You can create a videodb database with the following syntax provided you’ve made appropriate substitutions for the LC_COLLATE and LC_CTYPE values below:

CREATE DATABASE videodb WITH OWNER = postgres ENCODING = 'UTF8' TABLESPACE = video_db LC_COLLATE = 'en_US.UTF-8' LC_CTYPE = 'en_US.UTF-8' CONNECTION LIMIT = -1;

You can verify the creation of the videodb with the following command:

postgres# \l

It should show you a display like the following:

List of databases Name | Owner | Encoding | Collate | Ctype | ICU Locale | Locale Provider | Access privileges -----------+----------+----------+-------------+-------------+------------+-----------------+----------------------- postgres | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | | libc | template0 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | | libc | =c/postgres + | | | | | | | postgres=CTc/postgres template1 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | | libc | =c/postgres + | | | | | | | postgres=CTc/postgres videodb | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | | libc | (4 rows)Then, you can assign comment to the database with the following syntax:

COMMENT ON DATABASE videodb IS 'Video Store Database';

- Create a Role, Grant, and User:

In this section you create a dba role, grant privileges on a videodb database to a role, and create a user with the role that you created previously with the following three statements. There are three steps in this sections.

- The first step creates a dba role:

CREATE ROLE dba WITH SUPERUSER;

- The second step grants all privileges on the videodb database to both the postgres superuser and the dba role:

GRANT TEMPORARY, CONNECT ON DATABASE videodb TO PUBLIC; GRANT ALL PRIVILEGES ON DATABASE videodb TO postgres; GRANT ALL PRIVILEGES ON DATABASE videodb TO dba;

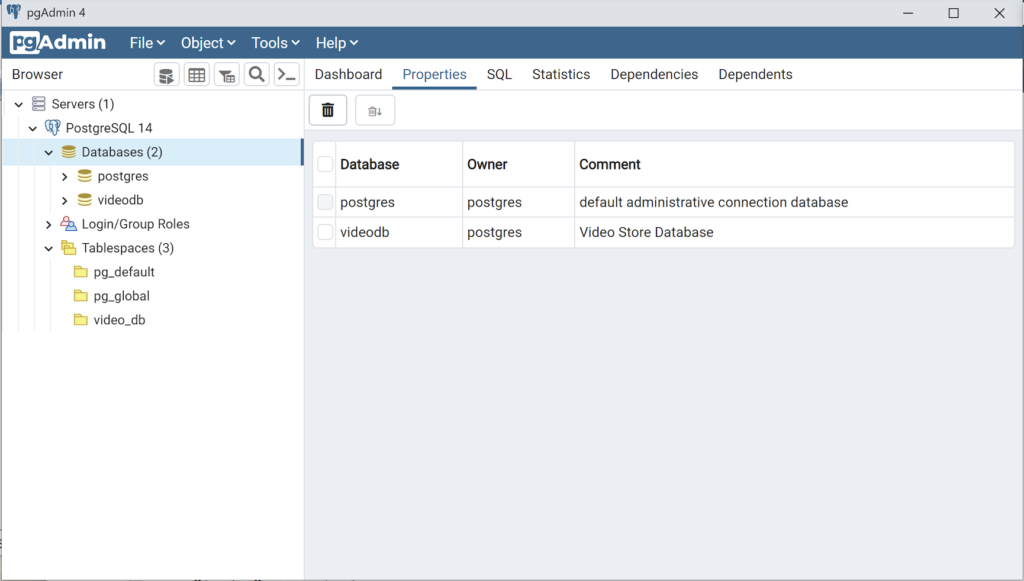

Any work in pgAdmin4 requires a grant on the videodb database to the postgres superuser. The grant enables visibility of the videodb database in the pgAdmin4 console as shown in the following image.

- The third step creates a student user:

CREATE USER student WITH ROLE dba ENCRYPTED PASSWORD 'student';

- The fourth step changes the ownership of the videodb database to the student user:

ALTER DATABASE videodb OWNER TO student;

You can verify the change of ownership for the videodb from the postgres user to student user with the following command:

postgres# \l

It should show you a display like the following:

List of databases Name | Owner | Encoding | Collate | Ctype | ICU Locale | Locale Provider | Access privileges -----------+----------+----------+-------------+-------------+------------+-----------------+----------------------- postgres | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | | libc | template0 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | | libc | =c/postgres + | | | | | | | postgres=CTc/postgres template1 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | | libc | =c/postgres + | | | | | | | postgres=CTc/postgres videodb | student | UTF8 | en_US.UTF-8 | en_US.UTF-8 | | libc | =Tc/student + | | | | | | | student=CTc/student + | | | | | | | dba=CTc/student (4 rows)

Installation of PGAdmin4

These are the steps to install pgAdmin4. They include some preconditions.

You need to install the curl utility as a precondition.

sudo apt install curl

Install the public key for the repository (if not done previously):

curl -fsSL https://www.pgadmin.org/static/packages_pgadmin_org.pub | sudo gpg --dearmor -o /etc/apt/trusted.gpg.d/pgadmin.gpg

- The first step creates a dba role:

- Connect as the postgres user with the following command:

MySQL on Ubuntu

Fresh install of Ubuntu on my MacBook Pro i7 because Apple said the OS X was no longer upgradable. Time to install and configure MySQL Server. These are the steps to install MySQL on the Ubuntu Desktop.

Installation

- Update the Ubuntu OS by checking for, inspecting, and upgrading any available updates with the following commands:

sudo apt update sudo apt list sudo apt upgrade

- Check for available MySQL Server packages with this command:

apt-cache search binaries | grep -i mysql

It should return:

mysql-server - MySQL database server binaries and system database setup mysql-server-8.0 - MySQL database server binaries and system database setup mysql-server-core-8.0 - MySQL database server binaries default-mysql-server - MySQL database server binaries and system database setup (metapackage) default-mysql-server-core - MySQL database server binaries (metapackage) mariadb-server-10.6 - MariaDB database core server binaries mariadb-server-core-10.6 - MariaDB database core server files

- Check for more details on the MySQL packages with this command:

apt info -a mysql-server-8.0

- Install MySQL Server packages with this command:

sudo apt install mysql-server-8.0

- Start the MySQL Server service with this command:

sudo systemctl start mysql.service - Before you can run the mysql_secure_installation script, you must set the root password. If you skip this step the mysql_secure_installation script will enter an infinite loop and lock your terminal session. Log in to the mysql monitor with the following command:

sudo mysqlEnter a password with the following command (password is an insecure example):

ALTER USER 'root'@'localhost' IDENTIFIED WITH mysql_native_password BY 'C4nGet1n!';

Quit the mysql monitor session:

quit; - Run the mysql_secure_installation script with this command:

sudo mysql_secure_installationHere’s the typical output from running the mysql_secure_installation script:

Securing the MySQL server deployment. Enter password for user root: VALIDATE PASSWORD COMPONENT can be used to test passwords and improve security. It checks the strength of password and allows the users to set only those passwords which are secure enough. Would you like to setup VALIDATE PASSWORD component? Press y|Y for Yes, any other key for No: Y There are three levels of password validation policy: LOW Length >= 8 MEDIUM Length >= 8, numeric, mixed case, and special characters STRONG Length >= 8, numeric, mixed case, special characters and dictionary file Please enter 0 = LOW, 1 = MEDIUM and 2 = STRONG: 2 Using existing password for root. Estimated strength of the password: 100 Change the password for root ? ((Press y|Y for Yes, any other key for No) : N ... skipping. By default, a MySQL installation has an anonymous user, allowing anyone to log into MySQL without having to have a user account created for them. This is intended only for testing, and to make the installation go a bit smoother. You should remove them before moving into a production environment. Remove anonymous users? (Press y|Y for Yes, any other key for No) : Y Success. Normally, root should only be allowed to connect from 'localhost'. This ensures that someone cannot guess at the root password from the network. Disallow root login remotely? (Press y|Y for Yes, any other key for No) : Y Success. By default, MySQL comes with a database named 'test' that anyone can access. This is also intended only for testing, and should be removed before moving into a production environment. Remove test database and access to it? (Press y|Y for Yes, any other key for No) : Y - Dropping test database... Success. - Removing privileges on test database... Success. Reloading the privilege tables will ensure that all changes made so far will take effect immediately. Reload privilege tables now? (Press y|Y for Yes, any other key for No) : Y Success. All done!

Configuration

The next step is configuration. It requires setting up the sample sakila and studentdb database. The syntax has changed from prior releases. Here are the new three steps:

- Grant the root user the privilege to grant to others, which root does not have by default. You use the following syntax as the MySQL root user:

mysql> GRANT ALL ON *.* TO 'root'@'localhost';

- Download the sakila database, which you can download from this site. Click on the sakila database’s TGZ download.

When you download the sakila zip file it creates a sakila-db folder in the /home/student/Downloads directory. Copy the sakila-db folder into the /home/student/Data/sakila directory. Then, change to the /home/student/Data/sakila/sakila-db directory, connect to mysql as the root user, and run the following command:

mysql> SOURCE /home/student/Data/sakila/sakila-db/sakila-schema.sql mysql> SOURCE /home/student/Data/sakila/sakila-db/sakila-data.sql

- Create the studentdb database with the following command as the MySQL root user:

mysql> CREATE DATABASE studentdb; - Create the user with a clear English password and grant the user student full privileges on the sakila and studentdb databases:

mysql> CREATE USER 'student'@'localhost' IDENTIFIED WITH mysql_native_password BY 'Stud3nt!'; mysql> GRANT ALL ON studentdb.* TO 'student'@'localhost'; mysql> GRANT ALL ON sakila.* TO 'student'@'localhost';

You can now connect to a sandboxed sakila database with the student user’s credentials, like:

mysql -ustudent -p -Dsakila |

or, you can now connect to a sandboxed studentdb database with the student user’s credentials, like:

mysql -ustudent -p -Dstudentdb |

MySQL Workbench Installation

sudo snap install mysql-workbench-community |

You have now configure the MySQL Server 8.0.

Ubuntu Desktop 22.04

I finally got around to installing Ubuntu Desktop, Version 22.04, on my MacBook Pro 2014 since OS X stopped allowing upgrades on the device in 2021. While I replaced it in 2021 with a new MacBook Pro with an i9 Intel Chip. The Ubuntu documentation gave clear instructions on how to create a bootable USB drive before replacing the Mac OS software..

Unfortunately, networking was not well covered. It left me with two questions:

- How to configure Ubuntu Desktop 22.04 to the network?

You need to use an RJ45 network cable (in this case also an RJ45 to Thunderbolt adapter) and reboot the OS. It will automatically configure your DCHP connection.

- How to configure Wifi for Ubuntu Desktop 22.04?

You need to download and install a library, which is covered below.

After the Ubuntu Desktop installation, I noticed it didn’t provide any opportunity to update the software or configure the network. It also was not connected to the network. I connected the MacBook Pro to a physical Internet cable and rebooted the Ubuntu OS. It recognized the wired network. Then, I upgraded the installed libraries, which is almost always the best choice.

At this point, I noticed that the libraries to enable a WiFi connection were not installed. So, I installed the missing Wifi libraries with this command:

sudo apt-get install dbms bcmwl-kernel-source |

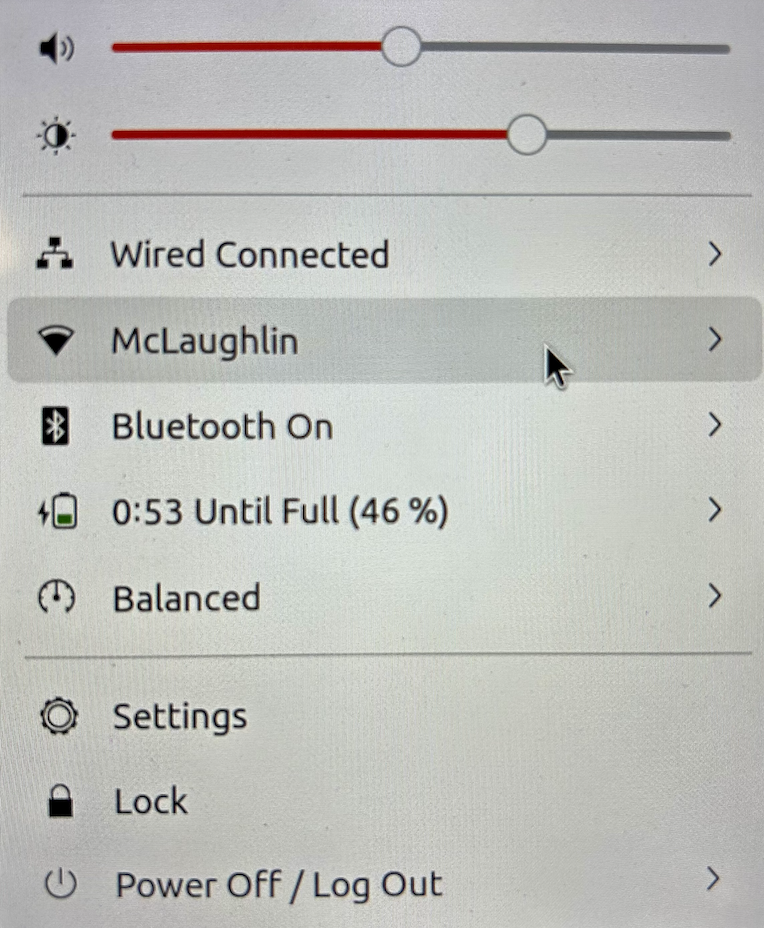

After you’ve installed the bcmwl-kernel-source libraries, navigate to the top right where you’ll find a small network icon. Click on the network icon and you’ll see the following dialog. Click on your designated Wifi, enter the password and you’ll have a Wifi connection.

As always, I hope this note helps those trying to solve a real world problem.

A tkprof Korn Shell

Reviewing old files, I thought posting my tkprof.ksh would be helpful. So, here’s the script that assumes you’re using Oracle e-Business Suite (Demo database, hence the APPS/APPS connection); and if I get a chance this summer I’ll convert it to Bash shell.

#!/bin/ksh

# -------------------------------------------------------------------------

# Author: Michael McLaughlin

# Name: tkprof.ksh

# Purpose: The program takes the following arguments:

# 1. A directory

# 2. A search string

# 3. A target directory

# It assumes raw trace files have an extension of ".trc".

# The output file name follows this pattern (because it is

# possible for multiple tracefiles to be written during the

# same minute).

# -------------------------------------------------------------------------

# Function to find minimum field delimiter.

function min

{

# Find the whitespace that preceeds the file date.

until [[ $(ls -al $i | cut -c$minv-$minv) == " " ]]; do

let minv=minv+1

done

}

# Function to find maximum field delimiter.

function max

{

# Find the whitespace that succeeds the file date.

until [[ $(ls -al $i | cut -c$maxv-$maxv) == " " ]]; do

let maxv=maxv+1

done

}

# Debugging enabled by unremarking the "set -x"

# set -x

# Print header information

print =================================================================

print Running [tkprof.ksh] script ...

# Evaluate whether an argument is provide and if no argument

# is provided, then substitute the present working directory.

if [[ $# == 0 ]]; then

dir=${PWD}

str="*"

des=${PWD}

elif [[ $# == 1 ]]; then

dir=${1}

str="*"

des=${1}

elif [[ $# == 2 ]]; then

dir=${1}

str=${2}

des=${1}

elif [[ $# == 3 ]]; then

dir=${1}

str=${2}

des=${3}

fi

# Evaluate whether the argument is a directory file.

if [[ -d ${dir} ]] && [[ -d ${des} ]]; then

# Print what directory and search string are targets.

print =================================================================

print Run in tkprof from [${dir}] directory ...

print The files contain a string of [${str}] ...

print =================================================================

# Evaluate whether the argument is the present working

# directory and if not change directory to that target

# directory so file type evaluation will work.

if [[ ${dir} != ${PWD} ]]; then

cd ${dir}

fi

# Set file counter.

let fcnt=0

# Submit compression to the background as a job.

for i in $(grep -li "${str}" *.trc); do

# Evaluate whether file is an ordinary file.

if [[ -f ${i} ]]; then

# Set default values each iteration.

let minv=40

let maxv=53

# Increment counter.

let fcnt=fcnt+1

# Call functions to reset min and max values where necessary.

min ${i}

max ${i}

# Parse date stamp from trace file without multiple IO calls.

# Assumption that the file is from the current year.

date=$(ls -al ${i} | cut -c${minv}-${maxv})

mon=$(echo ${date} | cut -c1-3)

yr=$(date | cut -c25-28)

# Validate month is 10 or greater to pad for reduced whitespace.

if (( $(echo ${date} | cut -c5-6) < 10 )); then

day=0$(echo ${date}| cut -c5-5)

hr=$(echo ${date} | cut -c7-8)

min=$(echo ${date} | cut -c10-11)

else

day=$(echo ${date} | cut -c5-6)

hr=$(echo ${date} | cut -c8-9)

min=$(echo ${date} | cut -c11-12)

fi

fn=file${fcnt}_${day}-${mon}-${yr}_${hr}:${min}:${day}

print Old [$i] and new [$des/$fn]

tkprof ${i} ${des}/${fn}.prf explain=APPS/APPS sort='(prsela,exeela,fchela)'

# Print what directory and search string are targets.

print =================================================================

fi

done

else

# Print message that a directory argument was not provided.

print You failed to provie a single valid directory argument.

fi |

I hope this helps those looking for a solution.

Listener for APEX

Unless dbca lets us build the listener.ora file, we often leave off some component. For example, running listener control program the following status indicates an incorrectly configured listener.ora file.

lsnrctl status |

It returns the following, which displays an endpoint for the XDB Server (I’m using Oracle Database 11g XE because it’s pre-containerized and has a small testing footprint):

LSNRCTL for Linux: Version 11.2.0.2.0 - Production on 24-MAR-2023 00:59:06 Copyright (c) 1991, 2011, Oracle. All rights reserved. Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=EXTPROC_FOR_XE))) STATUS of the LISTENER ------------------------ Alias LISTENER Version TNSLSNR for Linux: Version 11.2.0.2.0 - Production Start Date 21-MAR-2023 21:17:37 Uptime 2 days 3 hr. 41 min. 29 sec Trace Level off Security ON: Local OS Authentication SNMP OFF Default Service XE Listener Parameter File /u01/app/oracle/product/11.2.0/xe/network/admin/listener.ora Listener Log File /u01/app/oracle/diag/tnslsnr/localhost/listener/alert/log.xml Listening Endpoints Summary... (DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=EXTPROC_FOR_XE))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=localhost)(PORT=1521))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=localhost)(PORT=8080))(Presentation=HTTP)(Session=RAW)) Services Summary... Service "PLSExtProc" has 1 instance(s). Instance "PLSExtProc", status UNKNOWN, has 1 handler(s) for this service... Service "XE" has 1 instance(s). Instance "XE", status READY, has 1 handler(s) for this service... Service "XEXDB" has 1 instance(s). Instance "XE", status READY, has 1 handler(s) for this service... The command completed successfully |

The listener is missing the second SID_LIST_LISTENER value of CLRExtProc value. A complete listener.ora file should be as follows for the Oracle Database XE:

# listener.ora Network Configuration FILE: SID_LIST_LISTENER = (SID_LIST = (SID_DESC = (SID_NAME = PLSExtProc) (ORACLE_HOME = /u01/app/oracle/product/11.2.0/xe) (PROGRAM = extproc) ) (SID_DESC = (SID_NAME = CLRExtProc) (ORACLE_HOME = /u01/app/oracle/product/11.2.0/xe) (PROGRAM = extproc) ) ) LISTENER = (DESCRIPTION_LIST = (DESCRIPTION = (ADDRESS = (PROTOCOL = IPC)(KEY = EXTPROC_FOR_XE)) (ADDRESS = (PROTOCOL = TCP)(HOST = localhost.localdomain)(PORT = 1521)) ) ) DEFAULT_SERVICE_LISTENER = (XE) |

With this listener.ora file, the Oracle listener control utility will return the following correct status, which hides the XDB Server’s endpoint:

LSNRCTL for Linux: Version 11.2.0.2.0 - Production on 24-MAR-2023 02:38:57 Copyright (c) 1991, 2011, Oracle. All rights reserved. Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=EXTPROC_FOR_XE))) STATUS of the LISTENER ------------------------ Alias LISTENER Version TNSLSNR for Linux: Version 11.2.0.2.0 - Production Start Date 24-MAR-2023 02:38:15 Uptime 0 days 0 hr. 0 min. 42 sec Trace Level off Security ON: Local OS Authentication SNMP OFF Default Service XE Listener Parameter File /u01/app/oracle/product/11.2.0/xe/network/admin/listener.ora Listener Log File /u01/app/oracle/product/11.2.0/xe/log/diag/tnslsnr/localhost/listener/alert/log.xml Listening Endpoints Summary... (DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=EXTPROC_FOR_XE))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=localhost)(PORT=1521))) Services Summary... Service "CLRExtProc" has 1 instance(s). Instance "CLRExtProc", status UNKNOWN, has 1 handler(s) for this service... Service "PLSExtProc" has 1 instance(s). Instance "PLSExtProc", status UNKNOWN, has 1 handler(s) for this service... The command completed successfully |

It seems a number of examples on the web left the SID_LIST_LISTENER value of CLRExtProc value out of the listener.ora file. As always, I hope this helps those looking for a complete solution rather than generic instructions without a concrete example.